The Scariest People in the World: Maniacs, Misanthropes, and Silicon Valley Pro-Extinctionists

On how emerging technologies are dissolving the social contract, and enabling malicious actors to wreak civilizational havoc. (3,200 words.)

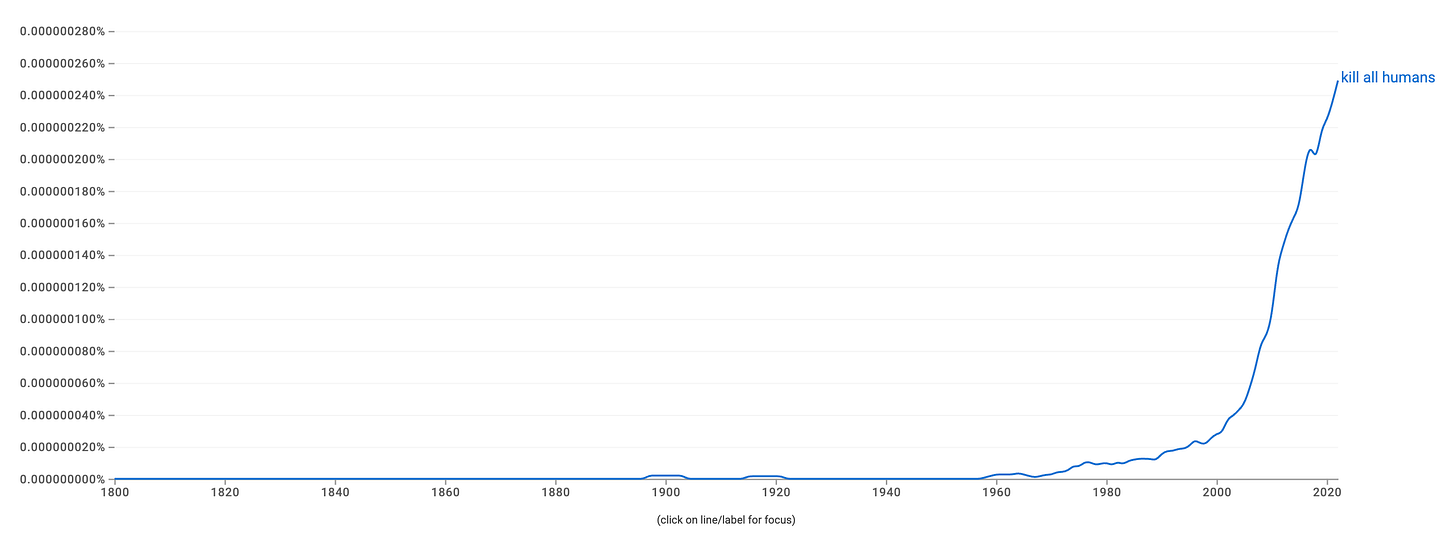

Starting off with a bit of humor:

On to the main subject of this article! Content warning: I discuss some psychologically disturbed individuals below who fantasized about destroying the world. There are a few quotes that are somewhat graphic.

Who exactly would destroy the world, if they could? This deceptively complex question was completely irrelevant for most of human history, because the condition “if they could” was not satisfiable. No one had the technological power to unilaterally end human history — even if they harbored a death wish for humanity.

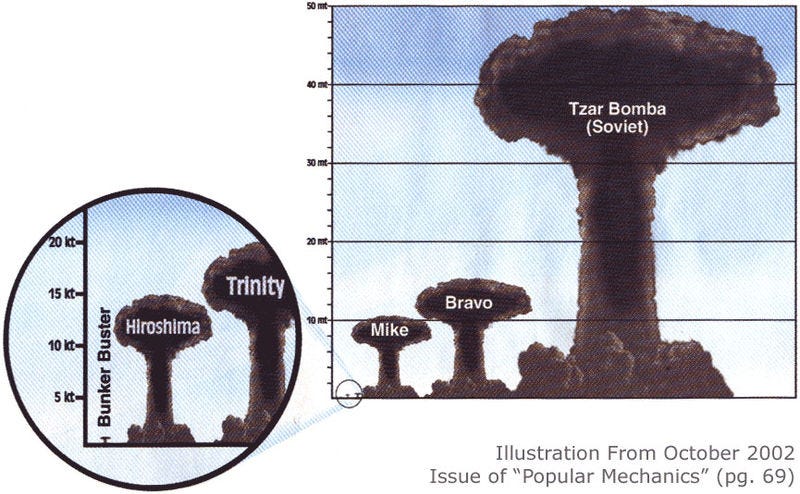

That changed in the 1950s, when the US and Soviet Union built enough thermonuclear weapons to induce a global “nuclear winter” that could have potentially killed everyone on Earth. As the great cosmologist Carl Sagan wrote in 1983, shortly after a team of scientists, including him, published an article on the nuclear winter hypothesis (dubbed the “TTAPS” paper):

There is little question that our global civilization would be destroyed. The human population would be reduced to prehistoric levels, or less. Life for any survivors would be extremely hard. And there seems to be a real possibility of the extinction of the human species.

The power to destroy the world was in the hands of political leaders on both sides of the Iron Curtain. For the first time in history, the “if they could” condition could be satisfied, which consequently foregrounds the other part of the question: what kind of person would intentionally push a “doomsday button” if one were within finger’s reach? Who would do such a thing, and why?

Omniviolence: The Democratization of Destructive Capabilities

These questions become even more pressing when one recognizes the growing power and accessibility of dual-use emerging technologies. CRISPR-Cas9, digital-to-biological converters, base editing, gene drives, USB-powered DNA sequencers, SILEX (separation of isotopes by laser excitation), cyberweapons, LLM-based AI, and perhaps even advanced molecular nanotechnology are enabling — or could enable — small groups and even lone wolves to wreak civilizational havoc.

Two centuries ago, a single individual or group could harm only a relatively small number of people in a single attack. Today, CRISPR-cas9 makes it possible for rogue scientists (think Bruce Ivins, who worked right down the road from where I spent my childhood) or terrorist organizations to synthesize designer pathogens that could theoretically combine the lethality of rabies, the incurability of Ebola, the contagiousness of the common cold, and the long incubation period of HIV. The result?

An infectious disease the spreads around the globe undetected, hopping from one asymptomatic person to the next, until suddenly hospitals are overflowing with dying patients. But no help is available, because medical experts have never seen anything like the disease and, well, most of them are also dying or already dead.

It’s not just biotechnology that poses a dire threat. I mentioned SILEX above, which could make it much easier for state and nonstate actors alike to produce weapons-grade enriched uranium. Similar dangers concern lethal autonomous weapons, such as drones. As the computer scientist Stuart Russell warned years ago:

A very, very small quadcopter, one inch in diameter can carry a one- or two-gram shaped charge. You can order them from a drone manufacturer in China. You can program the code to say: “Here are thousands of photographs of the kinds of things I want to target.” A one-gram shaped charge can punch a hole in nine millimeters of steel, so presumably you can also punch a hole in someone’s head. You can fit about three million of those in a semi-tractor-trailer. You can drive up I-95 with three trucks and have 10 million weapons attacking New York City. They don’t have to be very effective, only 5 or 10% of them have to find the target.

This scenario, which inspired the short film below, could be scaled up arbitrarily. Maybe there are 10 trucks spewing out such insect-sized drones — or maybe 100 trucks strategically placed in 10 major cities around the world. The political scientist Daniel Deudney calls the novel possibility of catastrophic attacks enabled by the democratization of dual-use science and technology “omniviolence.”

Another way to put this is that the k/k ratio — that is, the ratio of killers to those killed — is dramatically falling. One lone wolf, or a single group of malicious individuals, can harm more people than ever before. At the extreme, they could potentially harm a sizable fraction of the human population and perhaps even precipitate the extinction of our species. I’m not convinced that such claims are hyperbolic (but you are more than welcome to argue that I’m wrong below! After all, I might be and hope that I am!).

This is worrisome because it suggests that the number of people who could unilaterally destroy the world is rapidly increasing. And that, in turn, means that the probability of a global catastrophe could significantly rise in the future (without, say, mass global surveillance, which no sane person wants). To quote an MIT Technology Review article about a fascinating academic paper by John Sotos:

The results [of Sotos’ calculations] are sobering. If there is a one in 100 chance that somebody with the technology will release it, and there are a few hundred individuals like this, then our civilization is doomed on a timescale of 100 years or so. If there are 100,000 individuals with this technology, then the probability of them releasing it needs to be less than one in 109 for our civilization to last 1,000 years.

Years earlier, I provided similar calculations (which Sotos didn’t know about when he wrote his paper, as I confirmed through personal communication). Here’s a slightly edited excerpt of what I wrote in 2017:

Consider a hypothetical situation involving 10 billion individuals. Assume that only 500 of them — that is, a mere 0.000005 percent of the global population — have access to world-destroying technologies, and the probability of each destroying the world is only 0.01 per 100-year period. What’s the likelihood that humanity would survive a single century? The answer is a mere 1% — i.e., there would be a staggering 99% chance of annihilation. Alternatively, consider a case in which all 10 billion people have access to a “doomsday button.” How likely is this civilization to survive the century if each of its citizens has a negligible 0.0000000004 chance of pressing a button per 100 years? Again, the odds are overwhelmingly against civilization enduring, with a 99 percent chance of self-destruction.

A friend of mine, a journalist, quips that we seem to be heading in the direction of a world in which every individual has the capacity to blow up the entire planet by pushing a button on his or her cell phone. … How long do you think the world would last if five billion individuals each had the capacity to blow the whole thing up? No one could plausibly defend an answer of anything more than a second. Expected life span would hardly be longer if only one million people had these cell-phones, and even if there were 10,000 you’d have to think that an eventual global holocaust would be pretty likely. Ten thousand is only two millionths of five billion. — Stanford political scientist James FearonImplications for the Social Contract

Here’s an interesting implication of omniviolence. Consider the social contract, an idea that goes back to Classical Athens, when Socrates chose to accept his death sentence because of the social contract he “signed” with the city-state in which he lived. It was, however, Thomas Hobbes who offered the first systematic account of social contract theory (or contractarianism), arguing that the contract consists in citizens like you and I giving up certain freedoms in exchange for security from the state (which he called the “Leviathan”). States essentially act as neutral referees, with a license to intervene on affairs — using force and even lethal violence when necessary — to ensure the security of its citizens. If Joe cheats Alice out of money, the state swoops down to force Joe to repay Alice, perhaps throwing him in prison as punishment.

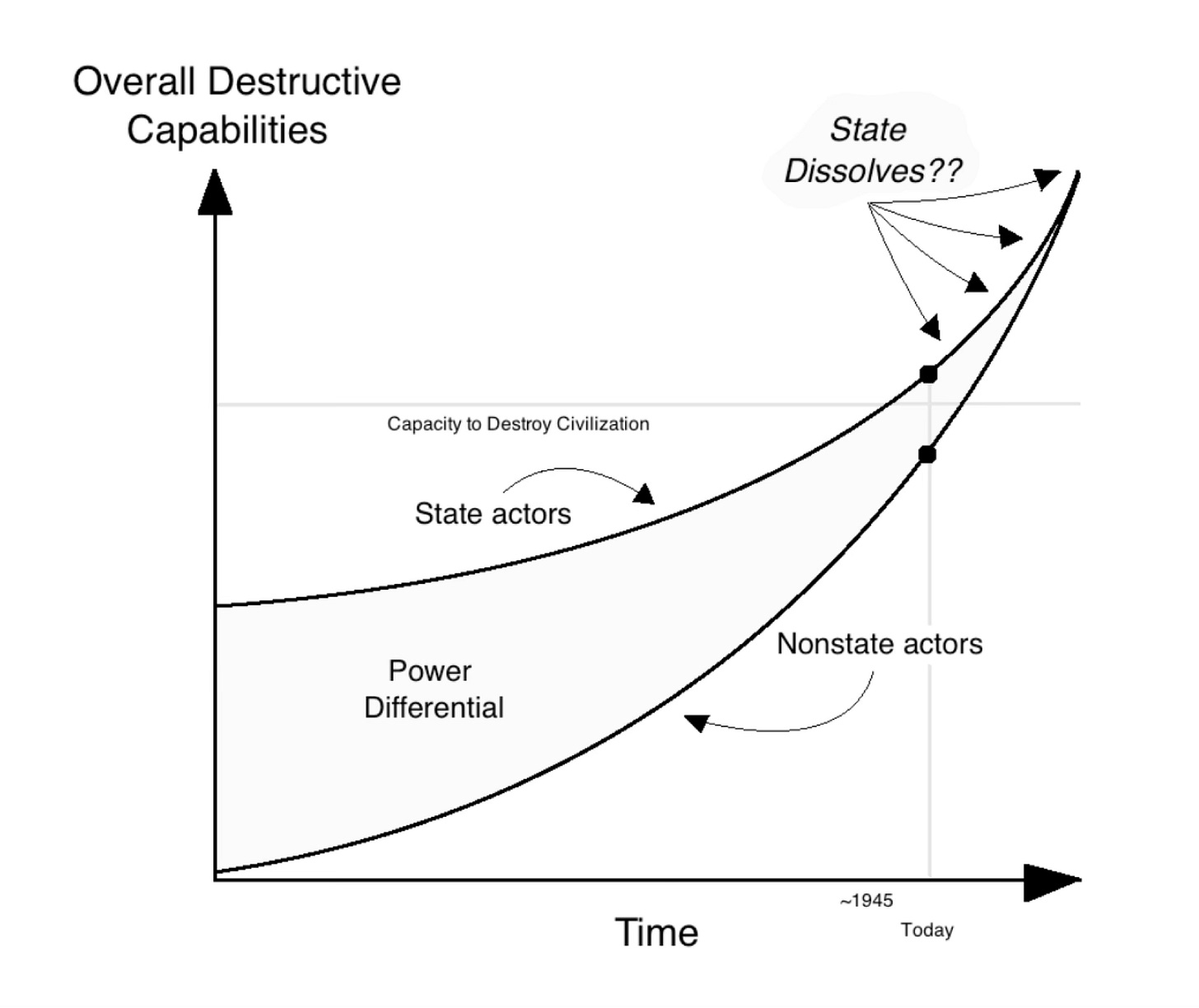

The point: this social contract is predicated on there being a power differential between states and citizens. If Joe has his own 1-million-soldier-strong military, his own nuclear arsenal, etc., then good luck to the state trying to enforce those anti-theft laws! It’s precisely because you and I have much less power than states that such top-down refereeing works.

Enter omniviolence: what if individuals or small groups acquire extreme destructive capabilities? What if they have the same capacities for force and lethal violence as states? It seems like the social contract collapses, as the power differential diminishes. The modern state system cannot exist in such circumstances — or so it seems to me. (But what do you think?) Here’s a little diagram representing this from a paper I published several years ago:

Now that People Are Able, Who Is Willing?

Let’s return to the main question: given that a growing multiplicity of people will be able to destroy the world due to emerging technologies, who exactly would do this? In other words, who would be both able and willing?

First, it’s worth disambiguating the phrase “destroy the world.” In a 2023 article, I argued that it could denote at least four scenarios:

Kill a large portion of the world’s population.

Obliterate modern civilization.

Trigger a global conflict or apocalyptic war.

Bring about the extinction of humanity.

You may be surprised — or not! — to know that there are a lot of people out there who explicitly want to bring about one or more of these outcomes (such outcomes aren’t mutually exclusive, of course, although some people only explicitly advocate for one outcome, while others want a particular outcome while avoiding others).

By “a lot of people,” I don’t mean that the percentage of wannabe world-destroyers is large relative to the global population. I’m saying that, in absolute terms, the world is full of people fantasizing about destroying it. It some cases, these very same people have gone on to commit atrocities like rampage shootings, mass murder, etc.

In a series of papers published in 2018 (here and here), I outlined four primary categories of people who would destroy the world if they could. These are:

—> (1) Apocalyptic terrorists, many of whom embrace an “active eschatology” according to which they are divinely mandated to catalyze the end of the world. What lies on the other side of the apocalypse is utopia, so the sooner we get the apocalypse, the sooner they get utopia. Put differently, the world must be destroyed to be saved.

Examples include groups like the Islamic State (ISIS), Aum Shinrikyo, Christian Identity, etc. Of all the categories, this is the most crowded, as history is replete with movements that would have almost certainly pushed a doomsday button if one were available, to accelerate the onset of paradise. (I wrote about this a bit in my “clash of eschatology” essay.) Indeed, the laptop of an ISIS member was found circa 2014 that “contained a 19-page manual to learn how to turn the bubonic plague into a weapon of war.” It observes that “there are many methods to spread the biological or chemical agents in a way to impact the biggest number of people. Air, main water supplies, food. The most dangerous is through the air.” Yikes.

—> (2) Radical environmentalists/ecoterrorists who argue that our species is a “cancer” on the biosphere that must be excised through force and violence. For example, the Gaia Liberation Front says that the entire population must be exterminated because “if any Humans survive, they may start the whole thing over again.” They add that “bioengineering” is “the specific technology for doing the job right — and it’s something that could be done by just one person with the necessary expertise and access to the necessary equipment.” This idea was earlier expressed by an anonymous author in the Earth First! Journal, writing that “contributions are urgently solicited for scientific research on a species specific virus that will eliminate Homo shiticus from the planet.”

Or consider the Finnish “eco-fascist” Pentti Linkola, who declared in 1994 that “if there were a button I could press, I would sacrifice myself without hesitating, if it meant millions of people who would die.” Linkola wants to avoid an “ecocatastrophe” by significantly reducing the total number of people on Earth. On another occasion, he said that another world war would be “a happy occasion for the planet” only if it destroys “the actual breeding potential” of our species: “young females as well as children, of which a half is girls. If this doesn’t happen, waging war is mostly [a] waste of time or even harmful.”

Also in this category are some anarcho-primitivists and neo-Luddites, including the infamous Ted Kaczynski, discussed more below.

—> (3) Negative utilitarians, philosophical pessimists, and others motivated by “ethical” concerns. According to negative utilitarianism (NU), our sole moral obligation in the universe is to eliminate suffering. Since eliminating that which suffers (sentient life) would eliminate suffering, we should strive to become “world-exploders” (R. N. Smart’s colorful term from 1958) who annihilate everything.

Indeed, the philosopher David Pearce describes himself as a “negative utilitarian who wouldn’t hesitate to initiate a vacuum phase transition ... if he got the chance.” The “vacuum phase transition” term references the possibility that our universe is in a “false vacuum” or “metastable” state. Hence, it could potentially flip into a “true vacuum” state if a “vacuum bubble” were nucleated by, e.g., a high-powered particle accelerator. This bubble would expand in all directions at nearly the speed of light, decimating everything it comes into contact with. Our entire future light cone would be obliterated!

The contemporary Efilist movement — where “Efilism” is “life” spelled backwards — embraces NU and philosophical pessimism, arguing that our goal should be to destroy all life on Earth and in the cosmos. As the leading Efilist Amanda Sukenick puts it:

If you could end suffering tomorrow, probably anything is justifiable; inflicting just about anything is probably justifiable … by any means necessary. If I found out tomorrow that the only way that sentient extinction could possibly happen was skinning all the living things alive slowly, I’d hate it, but I would say that it’s what we have to do.

As it happens, an Efilist recently killed himself upon detonating a bomb that tore through a fertility clinic in Palm Springs, California. I’m sure this isn’t the last we’ll hear of Efilism.

—> (4) The last category is a catch-all consisting of individuals or groups who were motivated to world-destroy for idiosyncratic reasons. There are a ton of examples, many of which involve individuals who both fantasized about destroying the world and committed heinous acts of violence.

For example, one of the perpetrators of the 1999 Columbine massacre, Eric Harris, repeatedly wrote about killing everyone on Earth: “I think I would want us to go extinct,” he said. “I just wish I could actually DO this instead of just DREAM about it all.” He also proclaimed, in the most horrific way imaginable, that “if you recall your history the Nazis came up with a ‘final solution’ to the Jewish problem. Kill them all. Well, in case you haven’t figured it out yet, I say ‘KILL MANKIND’ no one should survive.”

The Matrix of Doom

There are many more examples like these — in fact, they’re all over the Internet. On Debate.org someone asked: “if you could push a button and destroy all human life. [sic] Would you? All other life would survive as is; plus evidence of mankind too.” Many people answered “yes,” with one writing that “my view is that Mankind is a plague … I vote to destroy mankind and let nature start over.” Another said, “in the short time we’ve been on this planet, humans have already destroyed so much … The world would be better off without humans as a whole.”

As alluded to above, the taxonomy of types of doomsday agents cuts across the different goals that one might have. Most apocalyptic terrorists don’t explicitly want to bring about human extinction — indeed, they would say that human extinction isn’t even possible, given a religious eschatology according to which humanity will survive the cataclysmic paroxysms of the end times (the eschaton!).1 Rather, in many cases they’ve wanted to trigger a global war — Armageddon — in hopes of reaching paradise within their lifetimes. This is precisely what both ISIS and Aum Shinrikyo aimed for, as I’ve discussed elsewhere.

Some environmentalists want to kill all humans, while others only want to involuntarily reduce the human population. As Linkola put it, human extinction would be “an extremely bad thing.” Kaczynski also didn’t advocate for our extinction: he wanted the megatechnics of modern industrial civilization to collapse so we can live in local communities with small-scale technologies, which he believed are most conducive to human freedom. The same goes for anarcho-primitivists who want to return to nature (“Back to the Pleistocene”!): their goal isn’t human extinction, but a return to the hunter-gatherer lifeways of our Paleolithic ancestors.

Negative utilitarians, however, tend to want complete human extinction. (There are some interesting exceptions, but this is the obvious and straightforward implication of their ethical theory.) Efilists would say that fewer people would be better, but no people would be best.

Similarly, many idiosyncratic actors have expressed a desire to annihilate everyone on Earth, although not all such actors. For example, Elliot Rodger, the raging misogynist behind the 2014 Isla Vista massacre, railed against women who had rejected his “sexual advances.” He declared: “Women’s rejection of me is a declaration of war, and if it’s war they want, then war they shall have. It will be a war that will result in their complete and utter annihilation. I will deliver a blow to my enemies that will be so catastrophic it will redefine the very essence of human nature.”

But he didn’t advocate the elimination of our species. Yes, “humanity is a disgusting, wretched, depraved species,” he declares. “If I had it in my power, I would stop at nothing to reduce every single one of you to mountains of skulls and rivers of blood.” But he also explicitly fantasized about keeping a small group of women imprisoned “for the sake of reproduction,” thus enabling humanity to persist.

A New Type of Doomsday Agent: Silicon Valley Pro-Extinctionists

Surprisingly, virtually no scholars have explored this topic. To my knowledge, I’m the only one who’s ever published peer-reviewed articles on it!

Yet, I realized only recently that I had utterly failed to anticipate the emergence of a new type of doomsday agent: Silicon Valley pro-extinctionists, as I’m calling them. These are people who explicitly advocate for the extinction of our species in the near future through replacement with one or more “posthuman” species, usually in the form of superintelligent AGI. In previous articles for this newsletter, I’ve written about Michael Druggan, who literally argues that it’s “selfish” to want your children to survive into adulthood. If they are slaughtered and replaced by AGI, and that’s a good thing, as the “cosmic destiny is more important” than your puny little human desires — like wanting your children to live.

There are lots of other examples, some of which I highlight in my 3-part series on Silicon Valley pro-extinctionism. I also have a forthcoming academic paper in The Journal of Value Inquiry titled, “Should Humanity Go Extinct? Exploring the Arguments for Traditional and Silicon Valley Pro-Extinctionism,” which goes into detail about this issue (see sections 4 and 5), as well as another one in the works that elaborates the ideas adumbrated in part 3 of my newsletter series. These people really do want our species to die out in the near future, though unlike negative utilitarians, Efilists, radical environmentalists, etc., their aim is not final extinction (we disappear and leave no successors behind) but terminal extinction without final extinction (we disappear but leave behind some successors to take our place).

Whereas dual-use emerging technologies may be the weapons of choice among those who aim for (1) mass death, (2) civilizational collapse, (3) global conflict, and (4) human extinction (in the specific sense of final extinction), these new pro-extinctionists are actively trying to develop AGI to supplant us. Druggan even worked at xAI, which aims to build superintelligent machines.

Given the extraordinary power and influence of people in Silicon Valley, I am now much more worried about this new category of doomsday agent than I am of, say, the Efilists and whoever might still align with the Gaia Liberation Front. I doubt that Silicon Valley pro-extinctionists will succeed, in the coming years, to build AGI that replaces us, but my god they’re working on it! And who knows — maybe they will succeed to achieve this goal on timescales relevant to the lives of you, me, and whatever children we might have.

Two Anxieties

In conclusion, I’m anxious about two things: first, the democratization of science and technology that could enable individuals or small groups to wield unprecedented destructive power. This could make humanity vulnerable to global-scale catastrophes unlike anything we’ve previously experienced, as well as dissolve the social contract that constitutes the foundations of our modern state system.

Second, I’m anxious about this new category of doomsday agent, which I completely failed to anticipate years ago: people in and around Silicon Valley who want to replace us with posthumanity — from Michael Druggan to Larry Page, Richard Sutton, Hans Moravec, Peter Thiel, and Eliezer Yudkowsky, among many others. Perhaps I need to write an updated article with a new, expanded typology!

What do you think? What am I missing? What have I overlooked? Please tell me why you’re more optimistic than I am — or confirm my cautious pessimism! :-)

Thanks for reading and I’ll see you on the other side!

See my book Human Extinction for detailed discussion.

The amount of people who express misanthropic sentiments like this seems scary. But also I think almost everyone (save for spree killers and suicide bombers) who claims these beliefs would renounce every word of it if they had a loaded gun pointed at their head for about five seconds.

Honestly Émile I have so much to say I think I’ll save it for the next subscriber Zoom Call. Very good read even if at the same time it was hard to see just how many people are pro-extinctionist and what their “justifications are”.

Also that image at the beginning was actually really funny. Personally I’d full it with coffee or hot chocolate so I can stay warm in the winter.