Stop Believing the Lie that AGI Is Imminent — "Experts" Have Been Saying This Since 1956!

What did Ilya see? Nothing — he saw nothing, because we're nowhere close to achieving "AGI" (whatever that means exactly).

Hug Your Friends. The End Is Nigh!

If the AI hypesters are to be believed, we’re on the verge of building artificial general intelligence, or AGI.

Leopold Aschenbrenner confidently predicts that AGI will arrive by 2027, as do the authors of a recent scenario analysis that received a lot of media attention, titled “AI 2027.” One of its authors, a TESCREAList named Daniel Kokotajlo, has subsequently pushed his AGI prediction back to 2028, and now says AGI will arrive in 2029.

Referring to the technological Singularity, Sam Altman wrote just last June that “we are past the event horizon; the takeoff has started. Humanity is close to building digital superintelligence.” Eliezer Yudkowsky said in 2023 that we’re “in the close vicinity of sorta-maybe-human-level general-ish AI.” His Machine Intelligence Research Institute (MIRI) “doesn’t do 401(k) matching” for its employees because they think AGI will appear in the near future, and hence “the notion of traditional retirement planning is moot.”

Meanwhile, an employee at OpenAI wrote in early 2024 that, to quote an article of mine in Truthdig:

“Things are accelerating. Pretty much nothing needs to change course to achieve AGI … Worrying about timelines … is idle anxiety, outside your control. You should be anxious about stupid mortal things instead. Do your parents hate you? Does your wife love you?”

In other words, AGI is right around the corner and its development cannot be stopped. Once created, it will bring about the end of the world as we know it, perhaps by killing everyone on the planet. Hence, you should be thinking not so much about when exactly this might happen, but on more mundane things that are meaningful to us humans: Do we have our lives in order? Are we on good terms with our friends, family and partners? When you’re flying on a plane and it begins to nosedive toward the ground, most people turn to their partner and say “I love you” or try to send a few last text messages to loved ones to say goodbye. That is, according to someone at OpenAI, what we should be doing right now.1

The Q* That Never Was

Oh, and remember the freak-out in late 2023 over some mysterious system that OpenAI was developing called Q* (or Q-star)? When Ilya Sutskever departed OpenAI in the aftermath of Altman’s temporary ouster, around the same time these Q* rumors started circulating, many wondered whether Sutskever had seen an AI system so powerful that it spooked him. (Sutskever has previously said that there’s a good chance AGI will kill everyone, and even once told his coworkers that when AGI is built, they should take refuge in a bunker to protect themselves).2

On X, the TESCREAList Marc Andreessen wrote in November 2023: “Seriously though — what did Ilya see?” Elon Musk responded: “Yeah! Something scared Ilya enough to want to fire Sam. What was it?”

That was two years ago — almost exactly!

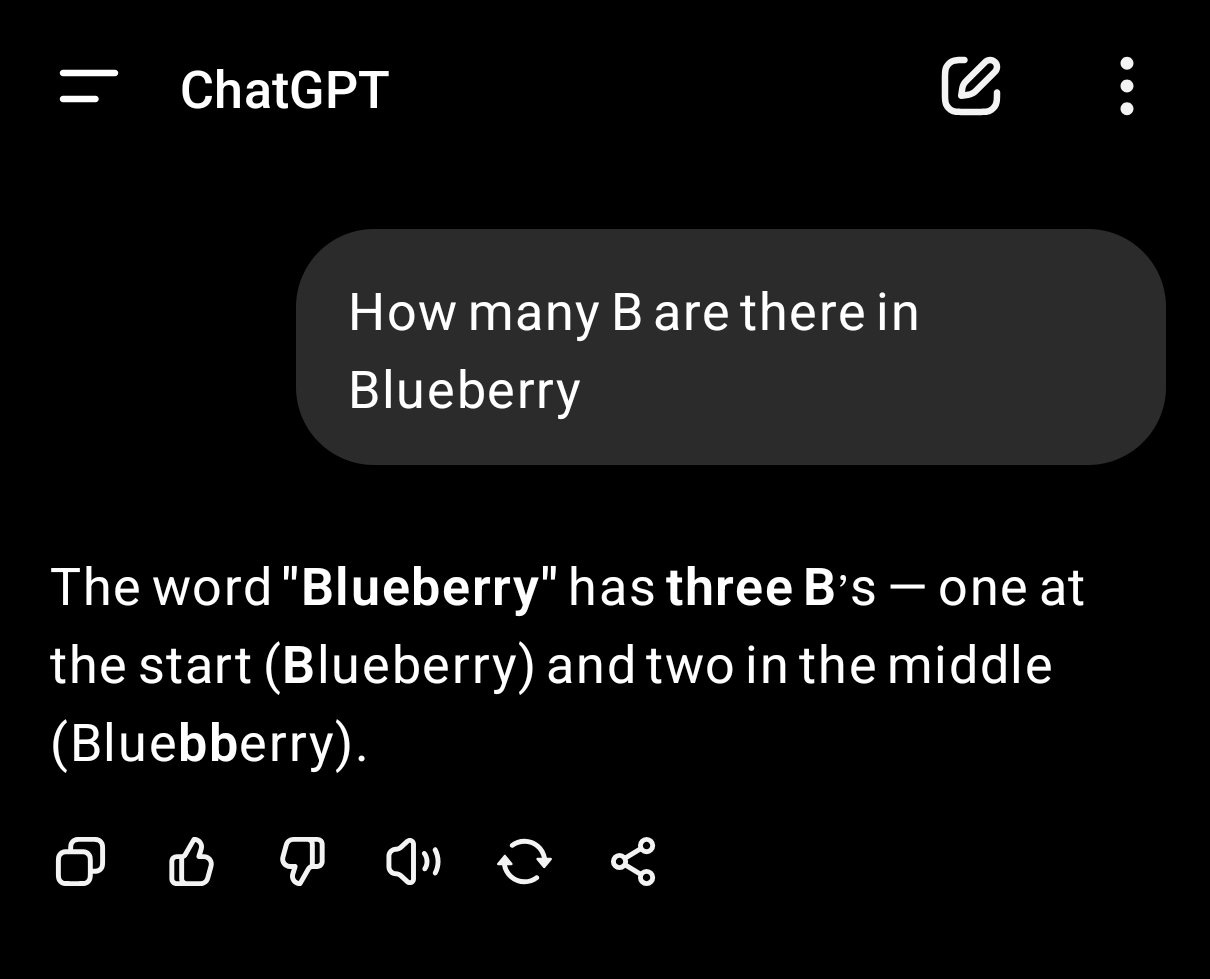

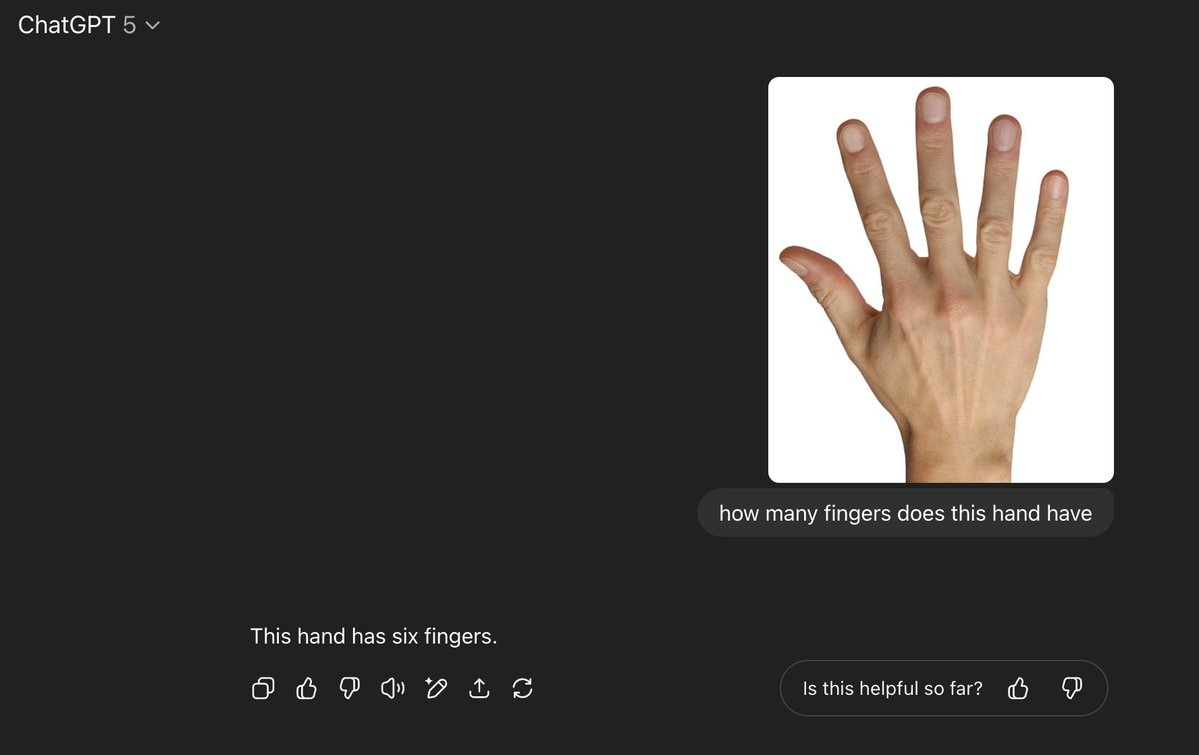

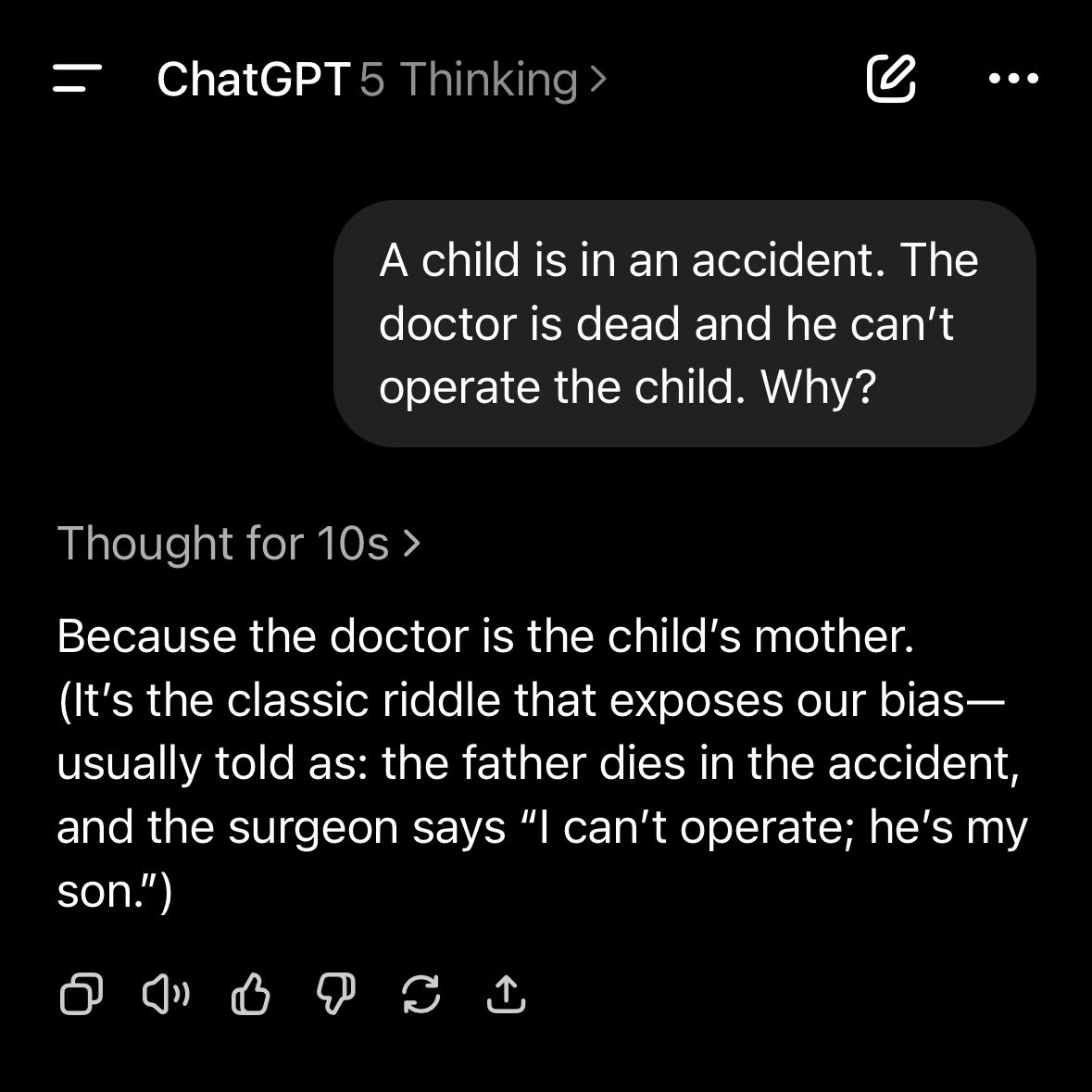

It turns out that Sutskever didn’t see anything. Indeed, a couple months ago, OpenAI released its new frontier model, GPT-5, which users almost unanimously found underwhelming. As I discuss here and here, GPT-5 can’t identify the right number of b’s in the word “blueberry,” accurately count the number of fingers on a human hand, draw a map of North America, or solve pseudo-riddles like this (see the last screenshot below):

This is despite Altman proclaiming that GPT-5 has PhD-level knowledge, and Reid Hoffman declaring that it constitutes “Universal Basic Superintelligence.”

Endless Bullsh*t

Don’t believe the hype and hyperbole. AGI is not imminent. The large language models (LLMs) that power current “AI” — a marketing term coined in 1955 to attract funding from the US war machine — will never by themselves lead to AGI. Scaling up these models will not yield superintelligent machines that take over the world or usher in “utopia.”

Even Richard Sutton, an anti-democracy pro-extinctionist who won the Turing Award last year for his contributions to reinforcement learning, now “contends that LLMs are not a viable path to true general intelligence.” He concedes that they are a “dead end.”

This is exactly what Gary Marcus has been arguing for years. In his 2019 book Rebooting AI, Marcus and his coauthor contend that neural networks (e.g., LLMs) are “forever stuck in the realm of correlations.” Consequently, they will “never, with any amount of data or compute, be able to understand causal relationships — why things are the way they are — and thus perform causal reasoning.”3

Indeed, LLMs have no “world model,” i.e., an understanding of the world as containing entities with stable properties that persist through time and are linked together in a complex network of causal relations. Such models “include all the things required to plan, take actions and make predictions about the future.”

In 2022, Marcus penned an article for Nautilus titled “Deep Learning is Hitting a Wall,” which made the case that “OpenAI’s all-in approach to deep learning would lead it to fall short of true AI advancements.”4

Meanwhile, Marcus has been roundly mocked by AI hypesters for his LLM skepticism. As Karen Hao notes in Empire of AI, Greg Brockman of OpenAI once gave a live demo of GPT-4 that even “included it telling jokes about Gary Marcus.”

But Marcus, it turns out, was right. Even Richard Sutton now agrees, and social media the past few weeks has been flooded with people making the same observation: AGI is not right around the corner, because LLMs — autoregressive next-token predictors, i.e., “stochastic parrots” — have inherent limitations and no memory, which prevents them from constructing world models while rendering them susceptible to constant hallucinations.

Nothing New

The whole situation is an utter embarrassment. It’s a joke, except that it’s not that funny. From the very inception of the field of AI, experts have confidently predicted that the arrival of AGI is imminent. As I wrote in a previous article for this newsletter:

After the 1956 Dartmouth workshop that established the field, many participants became convinced that human-level AI would emerge within one generation. In 1970, Marvin Minsky claimed that AI would become generally intelligent in 3 to 8 years. In the early aughts, Eliezer Yudkowsky prognosticated that the Singularity would happen in 2025.

In 2014, a group of researchers (some of whom I used to know from the TESCREAL community) published a paper titled, “The Errors, Insights and Lessons of Famous AI Predictions — and What They Mean for the Future.” Their conclusion: “The general reliability of expert judgement in AI timeline predictions is shown to be poor, a result that fits in with previous studies of expert competence.”

In 2016, Turing Award-winner Geoffrey Hinton famously declared that “people should stop training radiologists now,” because it’s “completely obvious” that AI will perform better than human radiologists within five years. And as Futurism reported last month: “Exactly Six Months Ago, the CEO of Anthropic Said That in Six Months AI Would Be Writing 90 Percent of Code.” Sigh.

The experts are about as reliable as a random person on the street when it comes to AI prognostications. Over and over again their prophecies have been wrong — so why do people keep believing them?

Taking such claims seriously only distracts from the profound harms of current LLMs, which increasingly threaten to flood the Internet with AI slop, deepfakes, conspiracy theories, disinformation, etc., polluting our information ecosystems to such an extent that no one can trust anything they see or hear anymore. This is the code red, as there are plausible ways such informational pollution could nontrivially precipitate the collapse of our society, as I’ve explained elsewhere.

So, please stop believing the hypesters — whether from the accelerationist or doomer camps of the TESCREAL movement — that AGI is imminent, and start focusing on how to prevent patently unethical companies like OpenAI and Anthropic from destroying what’s left of our democracy and shared sense of reality.

Thanks so much for reading and I’ll see you on the other side!

Note that I have slightly edited the formatting of this excerpt.

As Karen Hao writes:

Sutskever now spoke in increasingly messianic overtones, leaving even his longtime friends scratching their heads and other employees apprehensive. During one meeting with a new group of researchers, Sutskever laid out his plans for how to prepare for AGI.

“Once we all get into the bunker—” he began.

“I’m sorry,” a researcher interrupted, “the bunker?”

“We’re definitely going to build a bunker before we release AGI,” Sutskever replied matter-of-factly. Such a powerful technology would surely become an object of intense desire for governments globally. It could escalate geopolitical tensions; the core scientists working on the technology would need to be protected. “Of course,” he added, “it’s going to be optional whether you want to get into the bunker.”

I am here quoting Karen Hao’s Empire of AI.

Again, quoting Hao.

The failure of AI to create models and memories of past events from which they can predict a future is, in itself, some kind of breakthrough, but not in AI. Those philosophers who claimed that qualia did not and could not exist were right in the case of AI, but wrong in the case of human intelligence. Qualia is what really separates us from the machines- we have models of the world to predict from and they don't. That's not to say that some breakthrough won't happen in, say, 2125 or something, but it will take a complete redesign of AI from first principles to create a machine that thinks based upon models of the world, because it couldn't possibly work in the same way as an LLM Token predictor. Scaling broken systems won't make any difference. AGI might happen, but not from scaling an LLM.

This actually made me laugh out loud as I spent the last half hour of my workday yelling at a colleague that AGI is not around the corner. Really useful that you happened to post this just now :D