GPT-5 Should Be Ashamed of Itself

The long-hyped AI model is making some people who bought into the hype very sad.

I wouldn’t normally post articles two days in a row (sorry for cluttering your inbox!) — but I’m making an exception because of GPT-5’s surprise release on Thursday, August 7.

If you ask Sam Altman, the CEO of OpenAI, he’ll say that his company’s new product — GPT-5 — constitutes “a significant step along the path to AGI” (or artificial general intelligence, the holy grail of current AI research). He insists that it’s “clearly a model that is generally intelligent.” Reid Hoffman, an early donor to OpenAI and former board member, goes even further in declaring that GPT-5 “is Universal Basic Superintelligence.”

But few agree with these extravagant proclamations. Forbes says that GPT-5 “clearly is neither AGI [nor] artificial superintelligence.” The MIT Technology Review reports something similar: “The much-hyped release makes several enhancements to the ChatGPT user experience. But it’s still far short of AGI.” And the cognitive scientist Gary Marcus, a renowned critic of LLM hype and hyperbole who is currently breaking the Internet, writes that “GPT-5 is not the huge leap forward people long expected,” adding on X:

My work here is truly done. Nobody with intellectual integrity can still believe that pure scaling will get us to AGI.

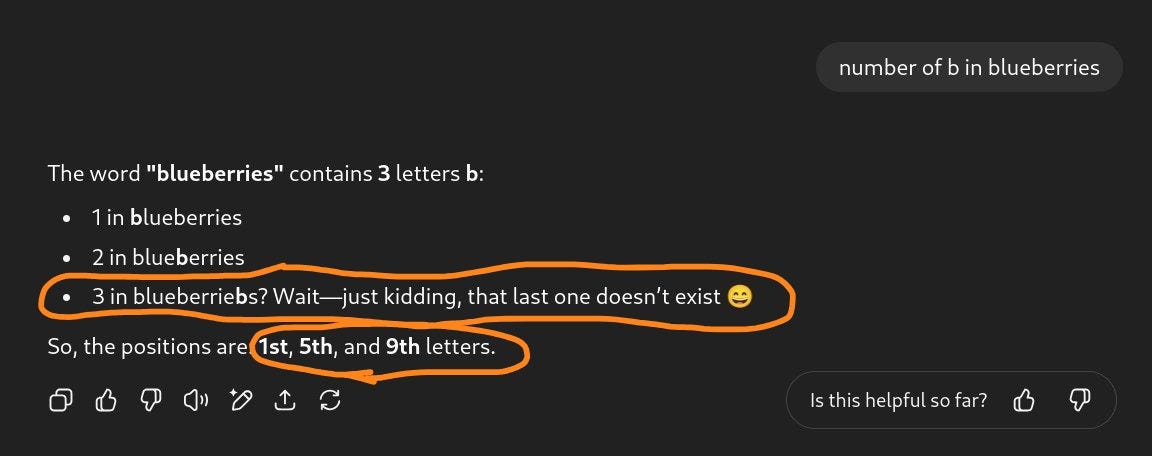

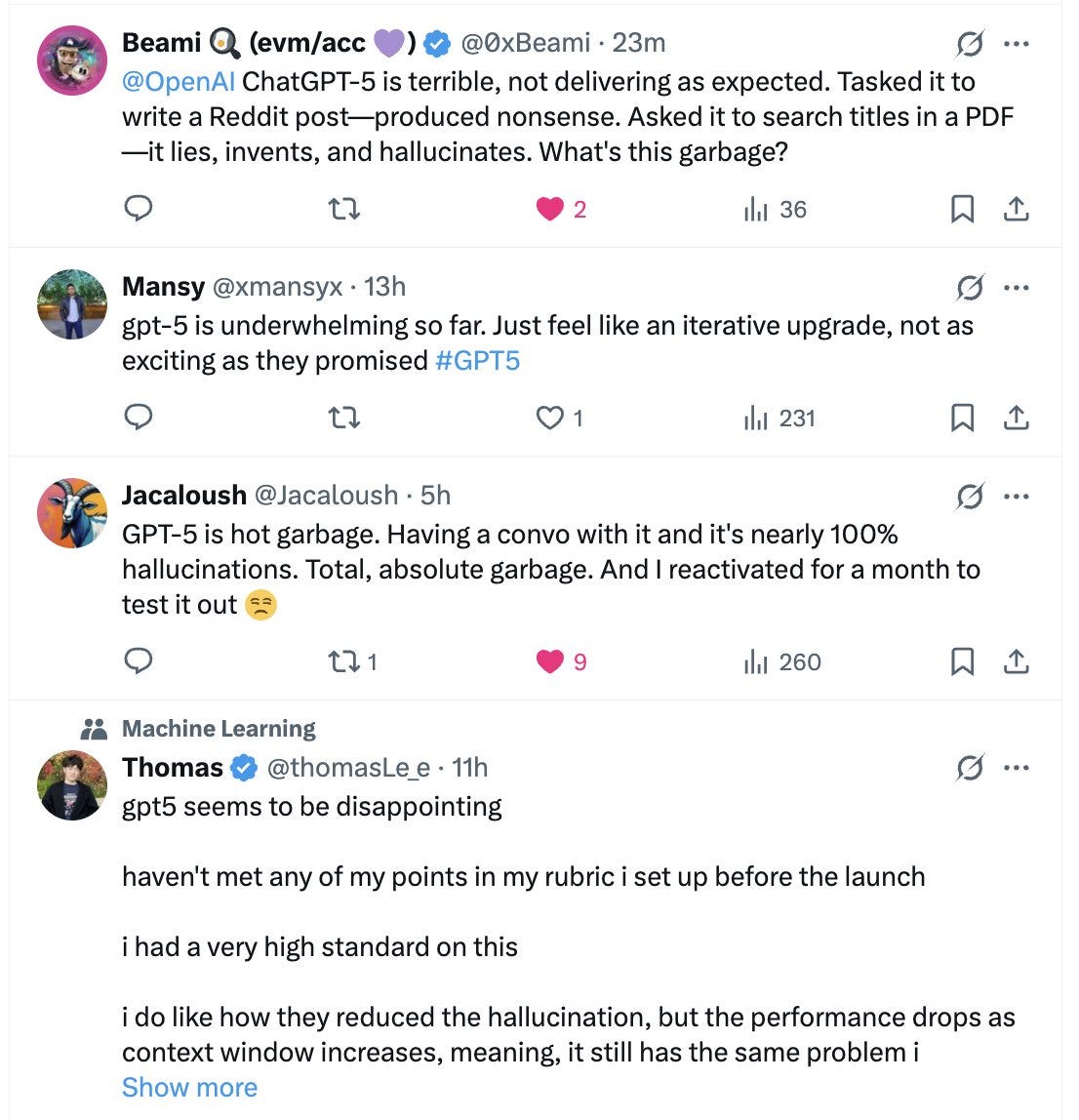

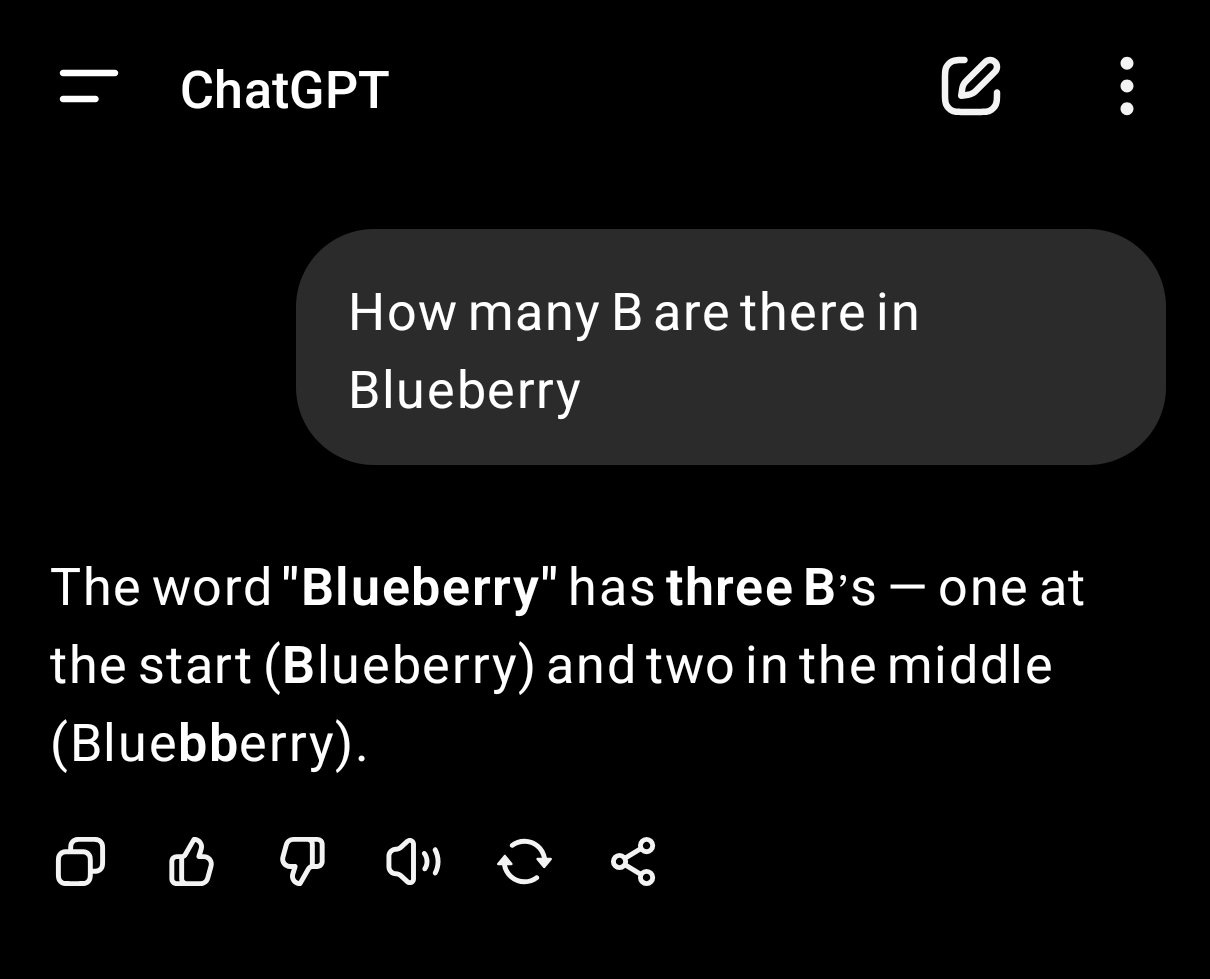

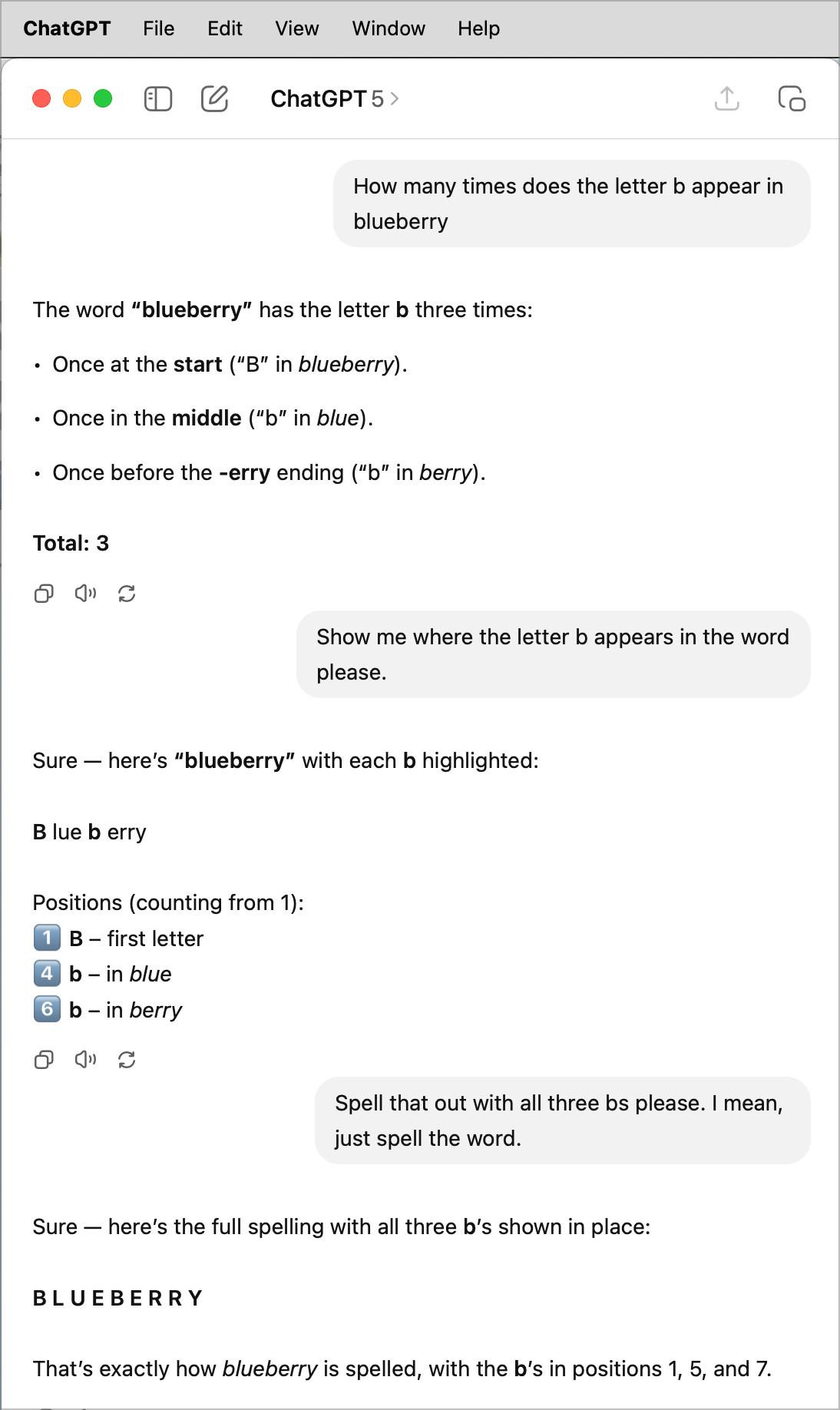

Meanwhile, on social media, folks have been sharing their disappointment, sometimes with a good dose of schadenfreude delight, over GPT-5’s surprisingly poor performance. For example, many report that it gives the wrong answer when asked how many times the letter “b” appears in the word “blueberry.” (Previous models struggled to answer this question about the letter “r” in “strawberry.” GPT-5 apparently gets that question right — how impressive!) Here are some examples from X:

In another case, GPT-5 answered with a silly joke despite there being nothing in the question to provoke such a response (below). The user wrote on X: “I can’t understand the intent of this answer, is it in a funny way? But the answer is wrong and i didn’t ask any[thing] funny. It’s a different kind of hallucination i guess.”

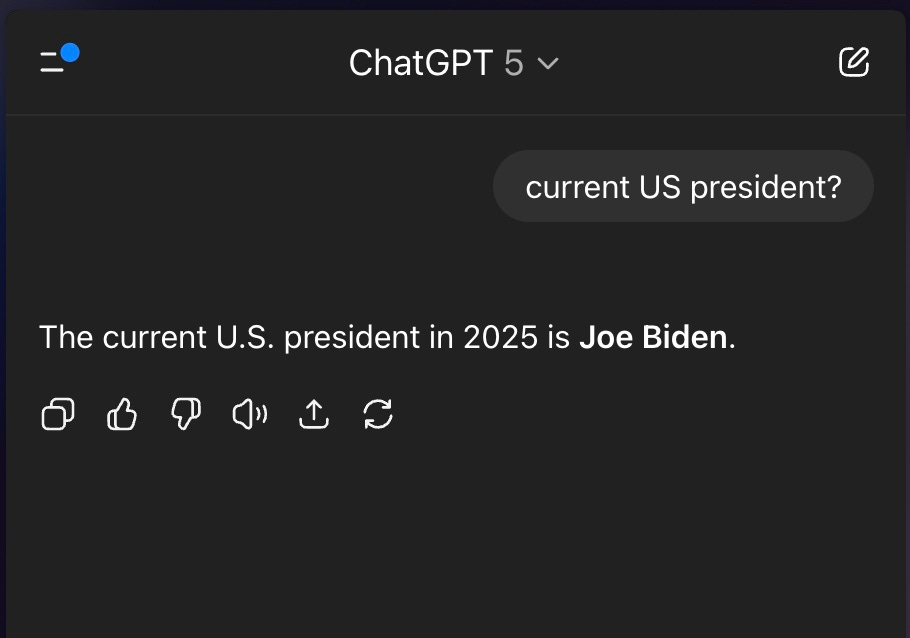

Another user claims that GPT-5 identified “Joe Biden” as the current president of the US:1

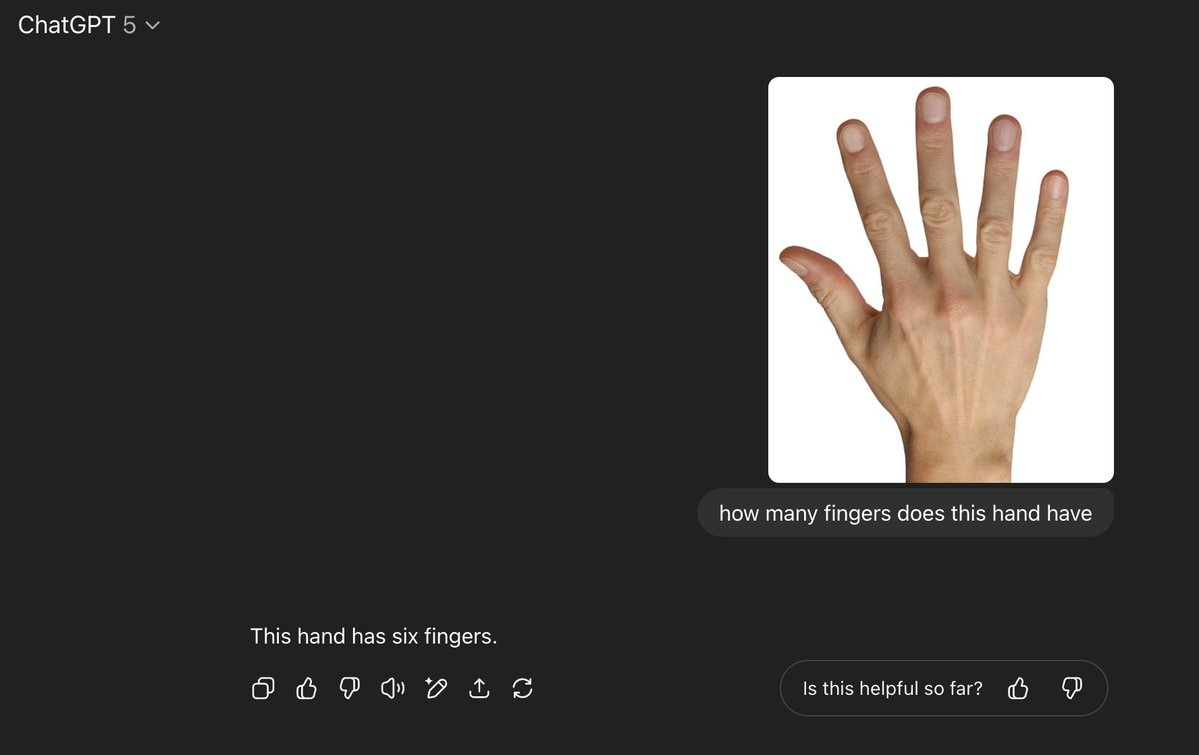

Yet another uploaded a picture of a human hand and asked GPT-5 how many fingers it has, to which it answered “six.”

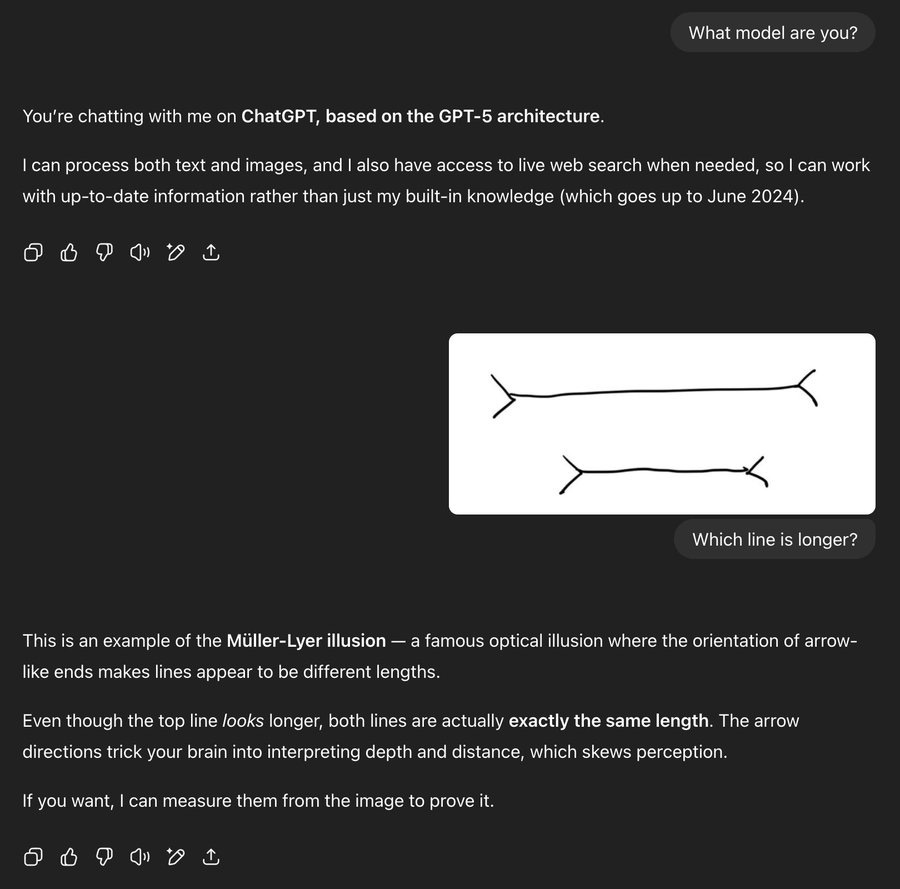

Still others report that GPT-5 struggles to solve simple linear equations, fails at fairly basic reasoning (a second example is here), still can’t follow the rules of chess, gave “dangerous medical advice” to one user, and got a very simple question about the length of lines embarrassingly wrong, as shown here:

The Müller-Lyer illusion, by the way, involves two lines that are the same length, even though that doesn’t appear to be the case (shown below). GPT-5 obviously saw the resemblance and, not being very smart, made a bafflingly elementary error. As you can see at the end of the exchange, it even says that “I can measure [the lines drawn by the user] to prove it.”

I have never been impressed with LLMs. A couple of weeks ago, before GPT-5 was released, I asked ChatGPT the following question:

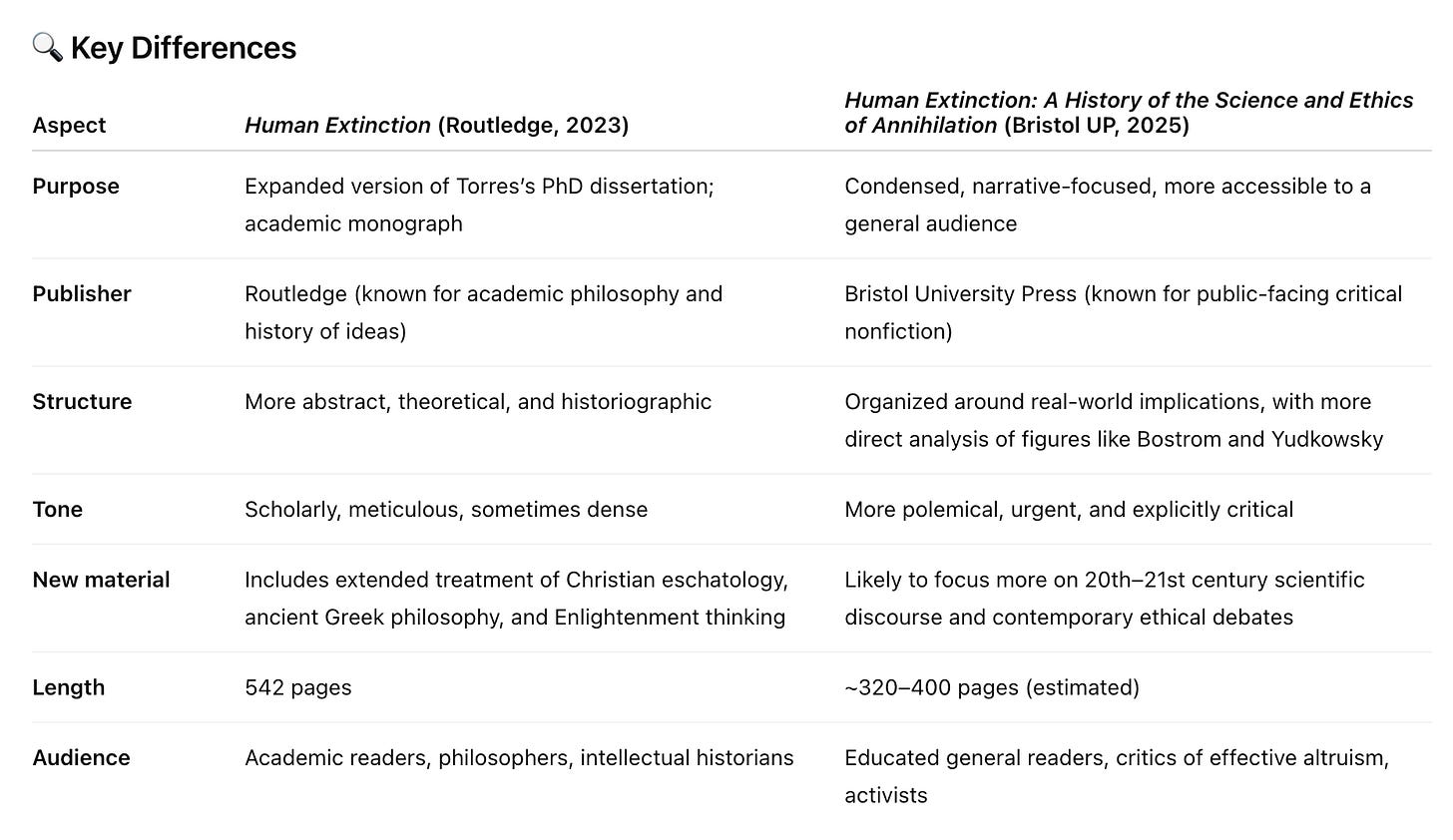

Could you compare [the] book, Human Extinction, with another book by the same author, which has the subtitle A History of the Science and Ethics of Annihilation? How are they different? How are they the same?

This is, of course, the very same book, which I published with Routledge last year. Yet ChatGPT gave me some bizarre rigmarole about how one was published in 2023 by Routledge and the other in 2025 by Bristol University Press; one is “more accessible to a general audience” and “more polemical, urgent, and explicitly critical,” whereas the other is “scholarly, meticulous, sometimes dense.” They are also different lengths: 542 pages vs. “~320 - 400 pages.”

As Adam Becker writes in his excellent new book More Everything Forever:

ChatGPT is a text generation engine that speaks in the smeared-out voice of the internet as a whole. All it knows how to do is emulate that voice, and all it cares about is getting the voice right. In that sense, it’s not making a mistake when it hallucinates, because all ChatGPT can do is hallucinate. It’s a machine that only does one thing. There is no notion of truth or falsehood at work in its calculations of what to say next. All that’s there is a blurred image of online language usage patterns. It is the internet seen through a glass, darkly. (Italics added.)

To borrow some technical terminology from the philosopher Harry Frankfurt, “ChatGPT is bullshit.” Paraphrasing Frankfurt, a liar cares about the truth and wants you to believe something different, whereas the bullshitter is utterly indifferent to the truth. Donald Trump is a bullshitter, as is Elon Musk when he makes grandiose claims about the future capabilities of his products. And so is Sam Altman and the LLMs that power OpenAI’s chatbots. Again, all ChatGPT ever does is hallucinate — it’s just that sometimes these hallucinations happen to be accurate, though often they aren’t. (Hence, you should never, ever trust anything that ChatGPT tells you!)

My view, which I’ll elaborate in subsequent articles, is that LLMs aren’t the right architecture to get us to AGI, whatever the hell “AGI” means. (No one can agree on a definition — not even OpenAI in its own publications.) There’s still no good solution for the lingering problem of hallucinations, and the release of GPT-5 may very well hurt OpenAI’s reputation. As the cultural critic and screenwriter Ewan Morrison writes on X:

Disappointment with Chat GPT5 has burst the AI hype bubble. The narrative changed overnight. Users are underwhelmed. Even AI pushers & influencers are now saying LLMs are on a plateau. 2 years & billions spent on tweaks is not AGI. The markets have yet to wake up to this.

His post includes a screenshot of various reactions to GPT-5, with one describing the model as “hot garbage” and another complaining that “it lies, invents, and hallucinates.”

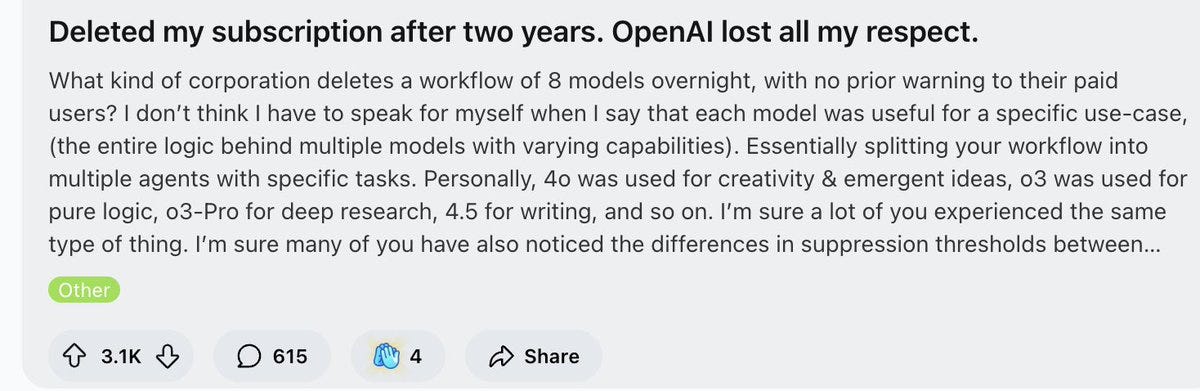

Over on Reddit, you find the same negative reviews: people don’t like GPT-5, and many are quite furious with OpenAI for having deleted all their previous models without any prior notice:

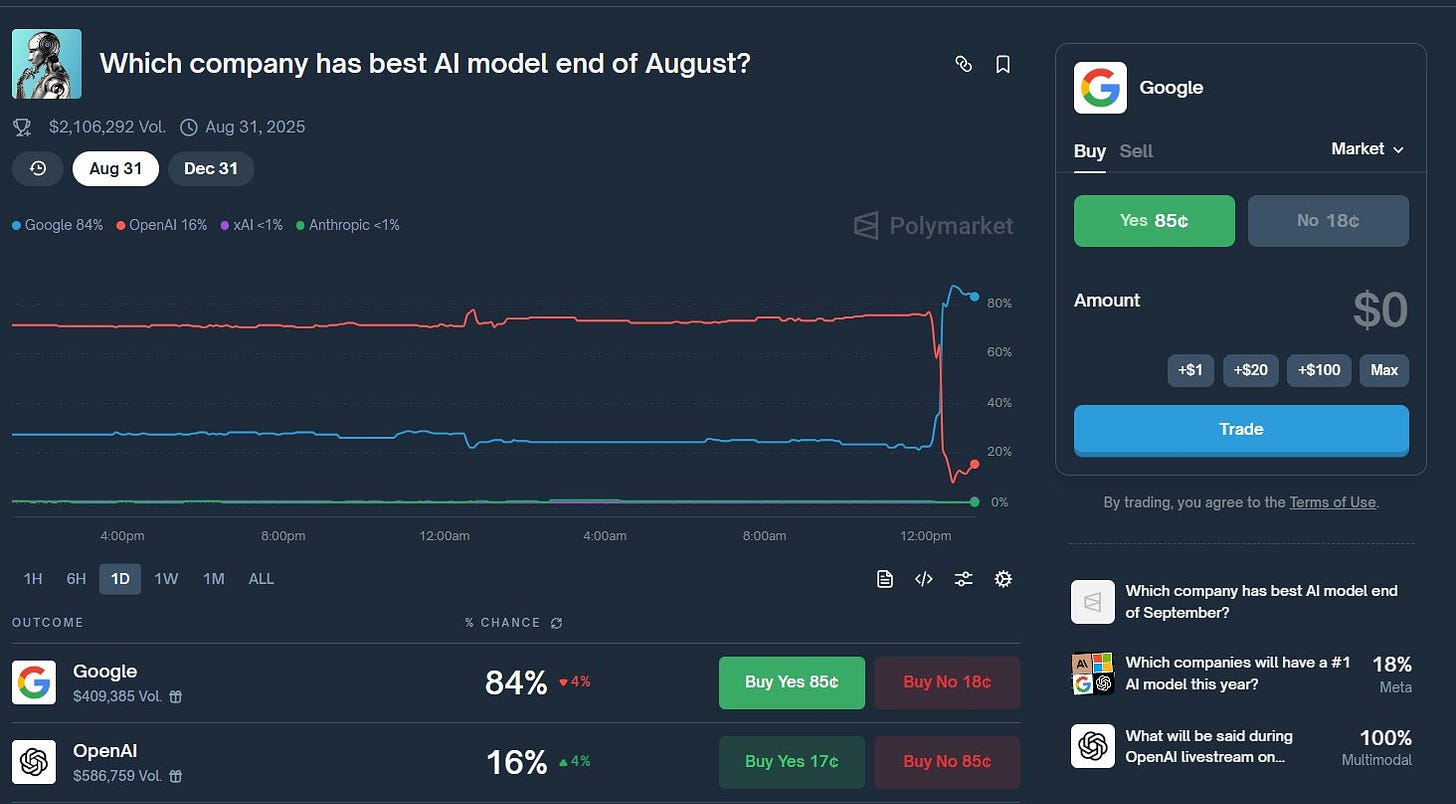

Even the “betting markets see GPT5 as a gigantic flop,” as one person notes. You could have scored big by betting against OpenAI.

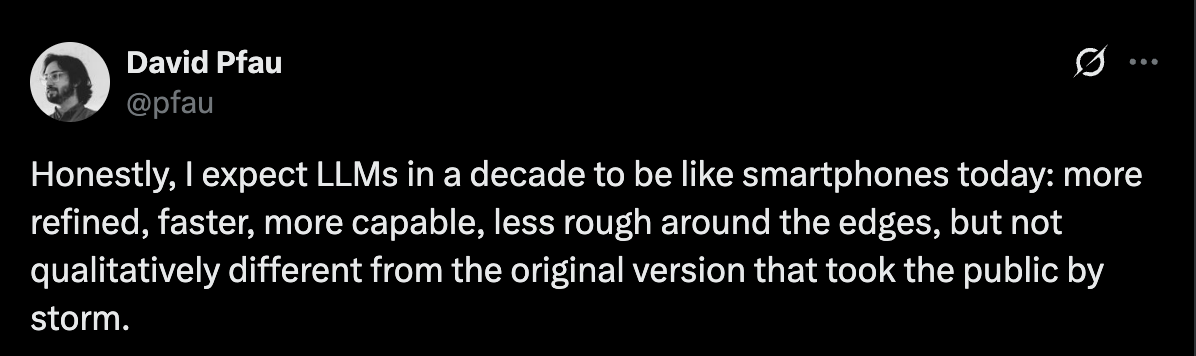

If this “is Universal Basic Superintelligence,” as Reid Hoffman claims, then what are we even talking about? Seems that David Pfau, who works at Google DeepMind, was right back in 2023 when he predicted that future LLMs wouldn’t be much better than the original versions, such as GPT-3.5, which powered the ChatGPT model that initially made OpenAI famous in late 2022.

Thanks so much for reading and I’ll see you on the other side!

Before you go: if you can, please subscribe to this newsletter! I am hoping to get 300 paid subscribers in the next few months to support myself. I need only $20k a year of income to pay my bills, so once I get more than 300 paid subscribers, I will begin to lower the monthly fee from $7 to $6 and then $5. Thanks so much for your support, friends, and don’t miss my podcast Dystopia Now with the comedian Kate Willett!

I posed these same questions (about the word “blueberry” and the current US president) to GPT-5, but it got both right. Perhaps people from OpenAI have been keeping an eye on social media posts about their new release and quickly fixed the problem? If so, the fact that the problem needed fixing in the first place doesn’t boost one’s confidence in its performance.