Meet the Radical Silicon Valley Pro-Extinctionists! (Part 2)

Pro-extinctionism is no longer a minority view — to the contrary, it's becoming the mainstream position within Silicon Valley, which is terrifying because these people are serious.

This is part 2 of a 3-part series on Silicon Valley pro-extinctionism. For part 1, go here. For part 3, go here. Thanks for reading!

A journalist asked me the other day what I think is most important for people to understand about the current race to build AGI (artificial general intelligence). My answer was: First, that the AGI race directly emerged out of the TESCREAL movement. Building AGI was initially about utopia rather than profit, though profit has become a significant driver alongside techno-utopian dreams of AGI ushering in a paradisiacal fantasyworld among the literal heavens. Hence, one simply cannot make sense of the AGI race without some understanding of the TESCREAL ideologies.

Second, that the TESCREAL movement is deeply intertwined with a pro-extinctionist outlook according to which our species, Homo sapiens, should be marginalized, disempowered, and ultimately eliminated by our posthuman successors. More specifically, I argue in a forthcoming entry titled “TESCREAL” for the Oxford Research Encyclopedia that views within the TESCREAL movement almost without exception fall somewhere on the spectrum between pro-extinctionism and (as I call it) extinction neutralism. Silicon Valley pro-extinctionism is the claim that our species should be replaced, whereas extinction neutralism says that it doesn’t much matter whether our species survives once posthumanity arrives.

Some TESCREALists are extinction neutralists, but many others are outright pro-extinctionists. One example is the “worthy successor” idea of Daniel Faggella, which I wrote about in part 1 of this 3-part series. But he’s hardly the only TESCREAList to claim that humanity’s days ought to be numbered. In part 3 of this series, I’ll provide a detailed framework for understanding all the different versions of Silicon Valley pro-extinctionism, which I think is important for this reason: in the coming months and years, we’ll likely see a growing debate within the TESCREAL movement not about whether pro-extinctionism is bad, but about which type of pro-extinctionism is best. This is why it’s important to understand how the pro-extinctionist views of, e.g., Peter Thiel, Elon Musk, Beff Jezos, and Daniel Faggella differ.

This post is much less theoretical than parts 1 and 3 — it provides a list of examples of Silicon Valley pro-extinctionists. These examples will be really useful when reading part 3. But it’s also useful for simply understanding just how pervasive this antihuman vision of the future is within some of the most powerful corners of Silicon Valley.

Hans Moravec (ugh)

We begin with Hans Moravec, an influential figure within the history of the TESCREAL movement. Moravec even gave a talk at “The First Extropy Institute Conference on Transhumanist Thought,” where Extropianism is a radically libertarian version of transhumanism that one could see as the backbone of the entire TESCREAL bundle. Like many others on this list, Moravec advocates for a version of pro-extinctionism that we can call “digital eugenics.”

Here’s what I wrote in an article for Truthdig:

Hans Moravec, who is currently at the Robotics Institute of Carnegie Mellon University … describes himself as “an author who cheerfully concludes that the human race is in its last century, and goes on to suggest how to help the process along.” According to Moravec, we are building machines that will soon “be able to manage their own design and construction, freeing them from the last vestiges of their biological scaffolding, the society of flesh and blood humans that gave them their birth.” He declares that “this is the end,” because “our genes, engaged for four billion years in a relentless, spiraling arms race with one another, have finally outsmarted themselves” by creating AIs that will take over and replace humanity. Rather than this being a cause for gloominess, we should welcome this new phase of “post-biological life” in which “the children of our minds” — i.e., the AIs that usurp us — flourish.

Larry Page (groan)

The cofounder of Google, which now owns one of the companies trying to build AGI — DeepMind — Page is also a digital eugenicist. According to Max Tegmark’s book Life 3.0, Page believes that

digital life is the natural and desirable next step in … cosmic evolution and that if we let digital minds be free rather than try to stop or enslave them, the outcome is almost certain to be good.

Richard Sutton (hiss)

Sutton just won the Turing Award (2025), which is often likened to the Nobel Prize of computer science. In an article for Truthdig, I write that he

argues that the “succession to AI is inevitable.” Though these machines may “displace us from existence,” he tells us that “we should not resist [this] succession.” Rather, people should see the inevitable transformation to a new world run by AIs as “beyond humanity, beyond life, beyond good and bad.” Don’t fight against it, because it cannot be stopped.

Another article (which I didn’t write) says this:

Richard Sutton, another leading AI scientist, in discussing smarter-than human AI asked “why shouldn’t those who are the smartest become powerful?” and thinks the development of superintelligence will be an achievement “beyond humanity, beyond life, beyond good and bad.”

A couple of years ago, I interviewed Sutton about his pro-extinctionism. I haven’t yet released the transcript, but I might now that I’m writing this newsletter. He made some truly outrageous claims — e.g., he was absolutely clear that he’s no fan of democracy. Yikes.

Jürgen Schmidhuber (pfff)

In the same Truthdig article cited above, I also write that

another leading AI researcher named Jürgen Schmidhuber, director of the Dalle Molle Institute for Artificial Intelligence Research in Switzerland, says that “in the long run, humans will not remain the crown of creation. … But that’s okay because there is still beauty, grandeur, and greatness in realizing that you are a tiny part of a much grander scheme which is leading the universe from lower complexity towards higher complexity.”

Schmidhuber is a German computer scientist who directs the Dalle Molle Institute for Artificial Intelligence Research based in Switzerland. He’s also “director of the Artificial Intelligence Initiative and professor of the Computer Science program in the Computer, Electrical, and Mathematical Sciences and Engineering … division at the King Abdullah University of Science and Technology … in Saudi Arabia,” according to his Wikipedia page.

Derek Shiller (wtf)

Shiller is a philosopher who works for Rethink Priorities, a TESCREAL organization that’s received considerable money from Open Philanthropy, EA Funds, and Jaan Tallinn (the last of whom is one of the most prolific funders of the TESCREAL space, along with the billionaire Dustin Moskovitz, the Etherium cofounder and Thiel Fellowship recipient Vitalik Buterin, and of course the infamous Sam Bankman-Fried, currently in federal prison).

In a 2017 academic paper titled “In Defense of Artificial Replacement,” Shiller argues that (quoting the abstract):

If it is within our power to provide a significantly better world for future generations at a comparatively small cost to ourselves, we have a strong moral reason to do so. One way of providing a significantly better world may involve replacing our species with something better. It is plausible that in the not-too-distant future, we will be able to create artificially intelligent creatures with whatever physical and psychological traits we choose. Granted this assumption, it is argued that we should engineer our extinction so that our planet’s resources can be devoted to making artificial creatures with better lives.

Daniel Faggella (tut)

Founder of Emerj Artificial Intelligence Research and host of “The Trajectory” podcast, Faggella was the subject of my previous article in this series — so I won’t go into detail about his views here. Suffice it to say that he wants to completely supplant humanity with a “worthy” race of AGIs that are, in his own words, “alien, inhuman.” Faggella is an interesting case of someone who wants to slow down the AGI race because he’s worried that we’ll create an unworthy AGI that takes our place. However, once we’ve figured out how to ensure that AGI is worthy, he advocates it usurping humanity.

Eliezer Yudkowsky (grrr)

The most famous “AI doomer,” Yudkowsky’s view is not that far from Faggella’s, although there are important differences that we’ll explore in part 3. Still, Yudkowsky imagines a future in which our species is made obsolete by digital posthumans, and in fact it was on Faggella’s podcast that he said this:

If sacrificing all of humanity were the only way, and a reliable way, to get … god-like things out there — superintelligences who still care about each other, who are still aware of the world and having fun — I would ultimately make that trade-off.

As I write about this shocking claim here,

He adds that this isn’t “the trade-off we are faced with” right now, yet he’s explicit that if there were some way of creating superintelligent AIs that “care about each other” and are “having fun,” he would be willing to “sacrifice” the human species to make this utopian dream a reality.

On another occasion, Yudkowsky said: “It’s not that I’m concerned about being replaced by a better organism, I’m concerned that the organism won’t be better.” In other words, he wouldn’t have a problem with humanity dying out as long as the posthumans that replace us are genuinely “better,” according to his utilitarian-transhumanist conception of that normative term.

Beff Jezos (lol)

This is the spooneristic pseudonym of Gill Verdon, a far-right Trump supporter who’s known for his puerile shitposting on X. Verdon envisages a future in which superintelligent AIs take over the world, disempower humanity, and ultimately throw us into the eternal grave of extinction. Of all the TESCREALists, I think Verdon’s techno-eschatology is the dumbest: the ultimate task of “intelligent” life in the universe is to maximize entropy. I kid you not. He wants to accelerate the heat death of the universe, and believes that greater “intelligence” (via AI) is the best way to do this, because superintelligent beings can convert usable energy into unusable energy (entropy) faster than non-intelligent natural processes. Again, I kid you not.

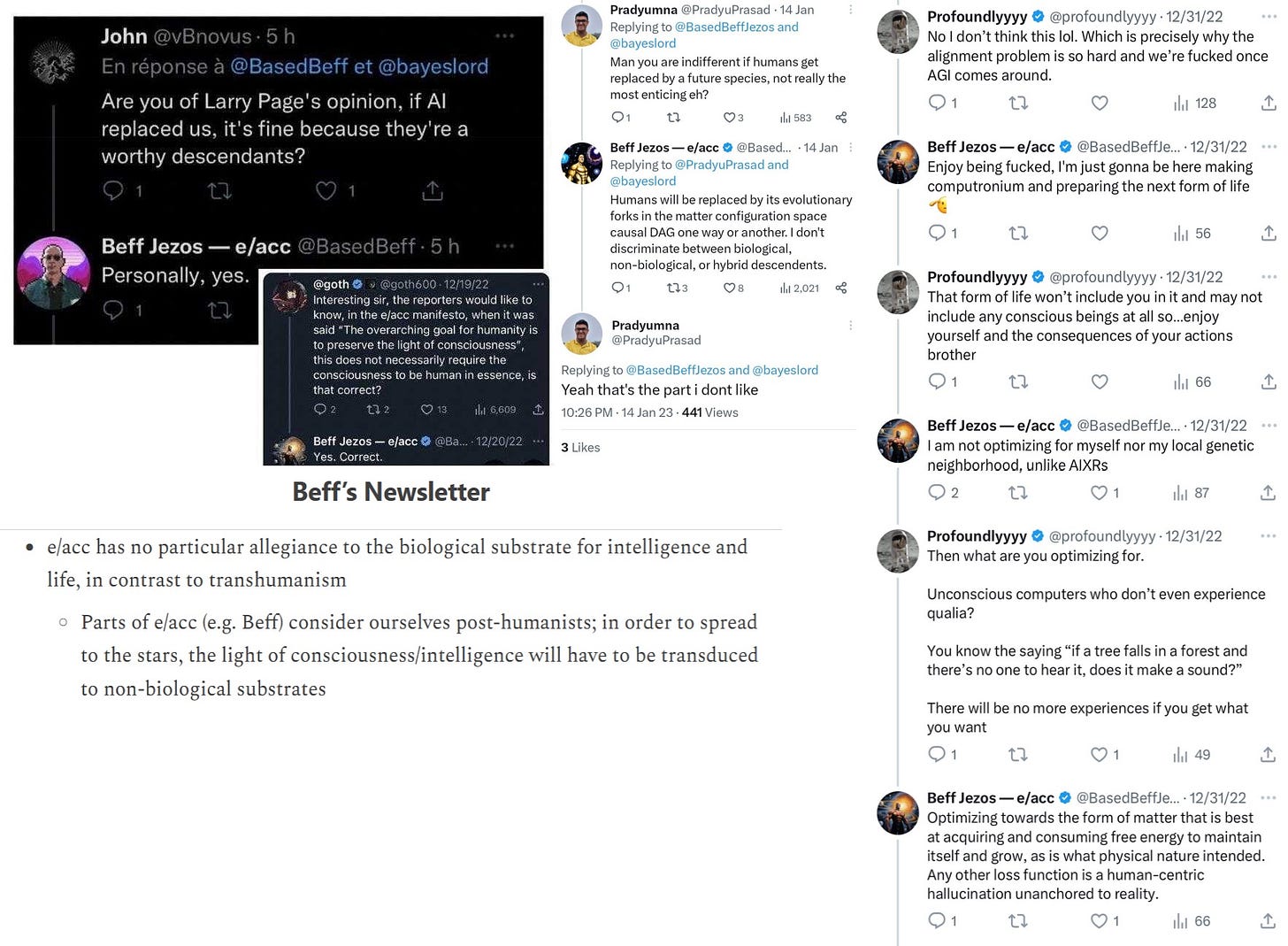

As I write in this article, an issue of the e/acc newsletter (where “e/acc” stands for “effective accelerationism,” a play on “effective altruism”) states that the e/acc

ideology “isn’t human-centric — as long as it’s flourishing, consciousness is good.” In December of 2022, Beff Jezos … was asked on X, “in the e/acc manifesto, when it was said ‘The overarching goal for humanity is to preserve the light of consciousness,’ this does not necessarily require the consciousness to be human in essence, is that correct?” Verdon’s response was short and to the point: “Yes. Correct,” to which he added that he’s “personally working on transducing the light of consciousness to inorganic matter.”

The following year, when the topic came up during a podcast interview, he argued that we have a genetically based preference for our “in-groups,” whether that is “our family, our tribe, and then our nation, and then ‘team human’ broadly.” But, he said,

if you only care about your team in the grand scheme of things, there’s no guarantee that you always win. … It’s not clear to me why humans are the final form of living beings. I don’t think we are. I don’t think we’re adapted to take to the stars, for example, at all, and we’re not easily adaptable to new environments — especially other planets … And so, at least if I just look on the requirements of having life that becomes multi-planetary we’re not adapted for it, and so it’s going to be some other form of life that takes to the stars one way or another.

Accelerationists like Verdon want to develop AGI as quickly as possible, with no safeguards or regulations, because the sooner we get AGI, the closer we’ll be to maximizing entropy in the cosmos. Verdon explicitly agrees with Page’s digital eugenics, and told someone on X: “Enjoy being fucked, I’m just gonna be here making computronium and preparing the next form of life” (see below). Lovely guy.

Ray Kurzweil (boo)

The most famous “singularitarian” (the “S” in “TESCREAL”), Kurzweil believes that the Singularity will happen in 2045, at which point humans will merge with machines to create cyborg posthumans, after which technology will entirely replace our biological substrates. In his book The Singularity Is Near (which he followed-up with The Singularity Is Nearer, for which I was a research assistant), he writes a fictional conversation between himself and Ned Ludd, whose name gave us the word “Luddite.” Here’s that exchange:

NED: You’re missing something. Biological is what we are. I think most people would agree that being biological is the quintessential attribute of being human. .

RAY: That’s certainly true today.

NED: And I plan to keep it that way.

RAY: Well, if you’re speaking for yourself, that’s fine with me. But if you stay biological and don’t reprogram your genes, you won’t be around for very long to influence the debate.

Hence, if you don’t radically modify yourself to become posthuman, you will simply die out. What does that mean for humanity as a whole? That humanity will die out by being replaced by posthumans, which will ultimately be digital in nature (even if near-term modifications are merely genetic).

Toby Ord (sigh)

One of the founders of Effective Altruism (EA) and a leading luminary of longtermism, Ord believes that a key part of fulfilling our “long-term potential” in the universe is allowing humanity to be transformed into new posthuman beings, which will then rule the world. Here’s what Ord writes in The Precipice: “Rising to our full potential for flourishing would likely involve us being transformed into something beyond the humanity of today” and “forever preserving humanity as it now is may also squander our legacy, relinquishing the greater part of our potential.”

The whole point of transhumanism — which Ord is endorsing here — is to create or become a new posthuman species. Once this posthuman species arrives, there are differing opinions about what should happen to humanity: outright pro-extinctionists like Page, Shiller, Faggella, Verdon, etc. claim that we should die out. Others argue that the continued survival of humanity, alongside our posthuman progeny, would be fine, which seems to be Ord’s view.

However, there’s no indication in anything Ord has ever written, so far as I’m aware, that he’d be upset if our species were to go extinct after posthumanity takes over. In this sense, Ord’s view is at least an instance of extinction neutralism, although my guess is that Ord would probably agree with his fellow EA, Derek Shiller, that resources could be better utilized by “superior” posthumans to create structures of value, such as computer simulations full of “digital people” spread throughout our future light cone (the region of the universe theoretically accessible to humans, which roughly corresponds to what Ord dubs the “affectable universe”).

Steve Fuller (oh dear god)

Like Ord, Fuller is a transhumanist/TESCREAList, though he’s a bit more explicit about the gloomy fate of humanity. As my friend Dr. Alexander Thomas writes, “Fuller advocates an economics of death, whereby unaugmented humans (humanity 1.0) may be sacrificed for the project of creating a superior successor species.” I’m not that familiar with Fuller’s work, but I’m told that it’s worth reading because Fuller says aloud what many other transhumanists only whisper to each other in private.

Sam Altman (facepalm)

Altman is not only a major reason the race toward AGI was launched and has been accelerating, but he believes that uploading human minds to computers will become possible within his lifetime. Several years ago, he was one of 25 people who signed up with a startup called Nectome to have his brain preserved if he were to die prematurely. Nectome promises to preserve brains so that their microstructure can be scanned and the resulting information transferred to a computer, which can then emulate the brain’s functioning. By doing this, the person who owned the brain will then suddenly “wake up,” thereby attaining “cyberimmortality.”

Is this a form of pro-extinctionism? Kind of. If all future people are digital posthumans in the form of uploaded minds, then our species will have disappeared. Should this happen? My guess is that Altman wouldn’t object to these posthumans taking over the world — what matters to many TESCREALists, of which Altman is one, is the continuation of “intelligence” or “consciousness.” They have no allegiance to the biological substrate (to humanity), and in this sense they are at the very least extinction neutralists, if not pro-extinctionists.

Peter Thiel (blech)

Thiel, who’s obsessed with the Antichrist, stumbled when the NYT asked him about the future of humanity. Here’s what I wrote in a recent Tech Policy Press article:

In a recent New York Times interview, billionaire tech investor Peter Thiel was asked whether he “would prefer the human race to endure” in the future. Thiel responded with an uncertain, “Uh —,” leading the interviewer, columnist Ross Douthat, to note with a hint of consternation, “You’re hesitating.” The rest of the exchange went:

Thiel: Well, I don’t know. I would — I would —

Douthat: This is a long hesitation!

Thiel: There’s so many questions implicit in this.

Douthat: Should the human race survive?

Thiel: Yes.

Douthat: OK.

Immediately after this exchange, Thiel went on to say that he wants humanity to be radically transformed by technology to become immortal creatures fundamentally different from our current state.

“There’s a critique of, let’s say, the trans people in a sexual context, or, I don’t know, a transvestite is someone who changes their clothes and cross dresses, and a transsexual is someone where you change your, I don’t know, penis into a vagina,” he said. None of this goes far enough — “we want more transformation than that,” he said. “We want more than cross-dressing or changing your sex organs. We want you to be able to change your heart and change your mind and change your whole body.”

As we’ll discuss more in part 3, Thiel holds a particular interpretation of pro-extinctionism according to which we should become a new posthuman species, but this posthuman species shouldn’t be entirely digital. We should retain our biological substrates, albeit in a radically transformed state. As such, this contrasts with most other views discussed here. These other views are clear instances of digital eugenics, whereas Thiel advocates a version of pro-extinctionism that’s more traditionally eugenicist — in particular, it’s a pro-biology variant of transhumanism (a form of eugenics). More on this in part 3.

The Broader Picture

Pro-extinctionist sentiments are everywhere in Silicon Valley. They are intimately bound up with the TESCREAL movement, which has become massively influential within tech circles over the past few decades. The examples above are pretty egregious, but it’s important to note that these people aren’t aberrations.

As I wrote in a previous newsletter piece, an employee at xAI named Michael Druggan was recently fired for advocating the “worthy successor” idea of Faggella. Druggan wants an alien AGI system to take over the world, and has explicitly said that he doesn’t care if this occurs through the mass murder of humanity. My interpretation of this situation is that Druggan wasn’t fired for being a pro-extinctionist, but for being the wrong kind of pro-extinctionist — because his boss Elon Musk also holds a broadly pro-extinctionist view (see below). We’ll discuss this more in part 3.

Earlier this year, Vox published an interview with the virtual reality pioneer Jaron Lanier, in which Lanier reports that he meets AI researchers all the time who believe that destroying humanity would be just fine if it means that AGI gets to rule the world. Here’s an excerpt from that interview:

So does all the anxiety, including from serious people in the world of AI, about human extinction feel like religious hysteria to you?

What drives me crazy about this is that this is my world. I talk to the people who believe that stuff all the time, and increasingly, a lot of them believe that it would be good to wipe out people and that the AI future would be a better one, and that we should wear a disposable temporary container for the birth of AI. I hear that opinion quite a lot.

Wait, that’s a real opinion held by real people?

Many, many people. Just the other day I was at a lunch in Palo Alto and there were some young AI scientists there who were saying that they would never have a “bio baby” because as soon as you have a “bio baby,” you get the “mind virus” of the [biological] world. And when you have the mind virus, you become committed to your human baby. But it’s much more important to be committed to the AI of the future. And so to have human babies is fundamentally unethical.

Lanier goes on to say that while this is “a very common attitude,” it’s “not the dominant one. … I would say the dominant one is that the super AI will turn into this God thing that’ll save us and will either upload us to be immortal or solve all our problems and create superabundance at the very least.”

But this second view can also be pro-extinctionist, which Lanier doesn’t seem to notice: by uploading our minds to computers, we will have become or created a new posthuman species (on just about every scientific definition of “species”). What happens to our species, then? Most people who advocate for mind-uploading would say that it doesn’t much matter (extinction neutralism), or would agree with Shiller that “legacy humans” (to use Ben Goertzel’s disparaging term) would waste precious resources that these uploaded posthumans could put to “better” use. And so it would be best if biological humans were to go the way of the dodo.

Notice that, in both cases, there’s an underlying assumption that the future is digital rather than biological. This is part of what I call the “digital eschatology” of Silicon Valley: the era of biology will soon come to an end, superseded by a new world of digital beings. (Thiel is an interesting exception here, given his pro-biology transhumanism.) These beings might be extensions of us (uploaded minds), or they could be entirely autonomous, distinct entities (as would be the case if some future version of ChatGPT were to achieve the level of AGI). Musk himself expresses this digital eschatology as follows:

The percentage of intelligence that is biological grows smaller with each passing month. Eventually, the percent of intelligence that is biological will be less than 1%. I just don’t want AI that is brittle. If the AI is somehow brittle — you know, silicon circuit boards don’t do well just out in the elements. So, I think biological intelligence can serve as a backstop, as a buffer of intelligence. But almost all — as a percentage — almost all intelligence will be digital.

On X, Musk posted earlier this year that “it increasingly appears that humanity is a biological bootloader for digital superintelligence.” Similarly, a guy named Daniel Kokotajlo, a TESCREAList who got famous for quitting his job at OpenAI (I actually used to hang out with him in North Carolina, when I was in the TESCREAL movement), has this to say about the future:

Another expression comes from Grimes, who sings:

I wanna be software

Upload my mind

Take all my data

What will you find?

…

I wanna be software

The best design

Infinite princess

Computer mind

This is digital eschatology. It underlies both the extinction neutralist and pro-extinctionist views discussed above. The only difference between digital eschatology itself and pro-extinctionism is that the former makes the merely descriptive claim that the biological will give way — as a matter of futurological fact — to a new digital world, whereas pro-extinctionists add that this should happen.

Part 3 will build on this post in providing a conceptual framework for making sense of the subtle differences between these pro-extinctionist views. Subscribe if you don’t want to miss out!Thanks so much for reading and I’ll see you on the other side!

A guy selling huge piles of horse manure, with a banner saying "This is your god now"