Superintelligent Machines, Mirror Bacteria, and the New Coalitions of "Upwingers" and "Downwingers"

I need your help!!

Back in 2023, FLI released a statement signed by people like Yoshua Bengio, Stuart Russell, Elon Musk, and Steve Wozniak declaring:

We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.

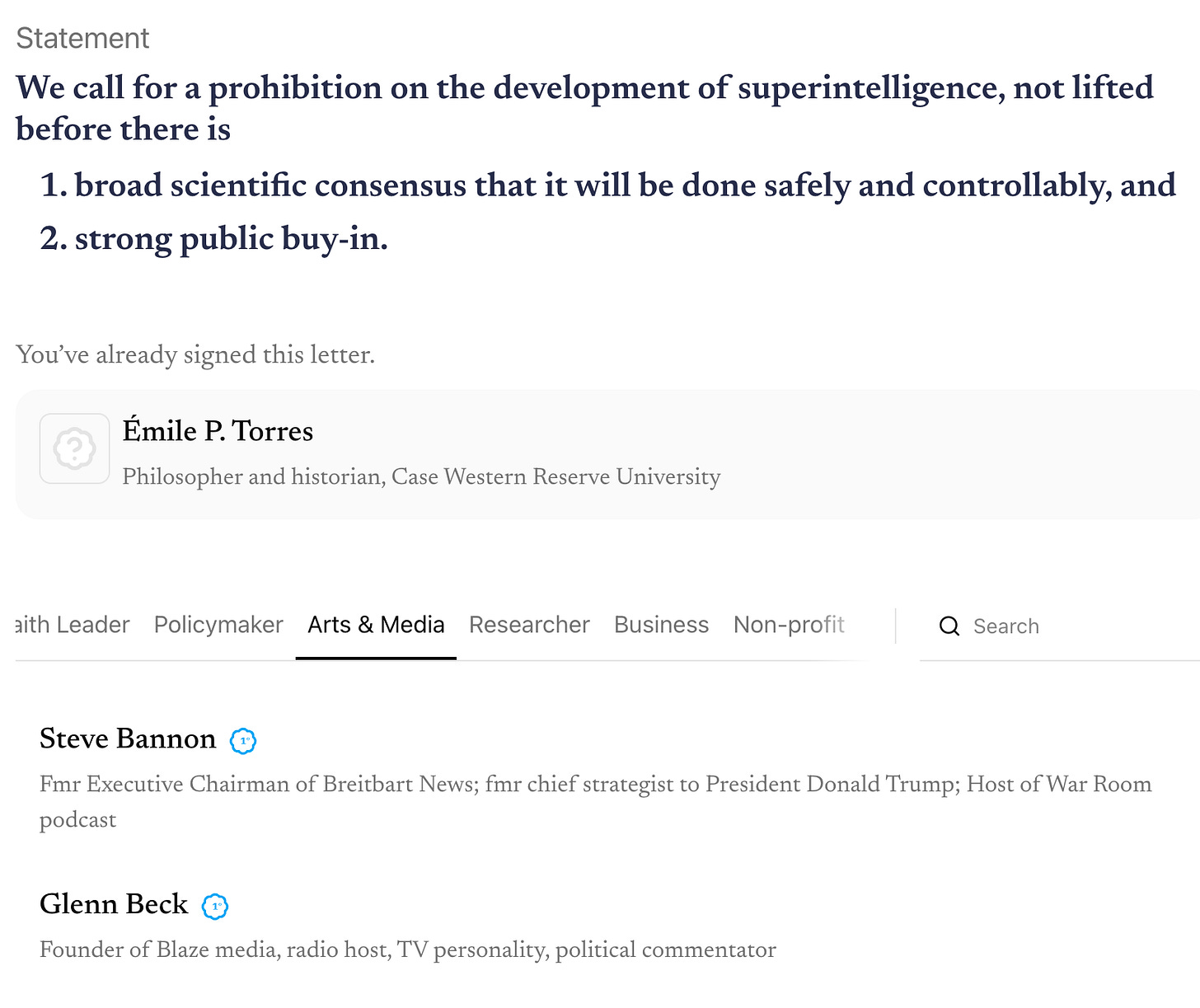

That received a lot of media attention, though it failed to slow down the race to build ever-larger LLMs (large language models). This is one reason that FLI released a new statement yesterday, which was also endorsed by a large number of notable figures both within and outside the tech world. It states:

We call for a prohibition on the development of superintelligence, not lifted before there is:

broad scientific consensus that it will be done safely and controllably, and

strong public buy-in.

I was one of about 800 people asked to sign the statement before it was publicly released — and I did. Other signatories include familiar names like Yoshua Bengio, Stuart Russell, Geoffrey Hinton, and Steve Wozniak, as well as Prince Harry, Meghan Merkel, and Yuval Noah Harari. Also on the list are Steve Bannon and Glenn Beck. Yikes.

I didn’t know their names would be attached to this when I signed it. The fact that they’re also signatories raises an important ethical question for me: Would I have still signed the statement if I’d known beforehand?

Allow me to draw an analogy with something a bit out of left field: mirror life. The relevance of this example should become clear momentarily!

What the Heck Is Mirror Life?

In December of last year, nearly 40 prominent scientists signed an alarming article published in Science titled “Confronting Risks of Mirror Life.” It begins with the statement that “all known life is homochiral.” This means that entire classes of biological molecules (or biomolecules) have the same chirality — hence, homo-chiral.

Chirality is the property whereby an object, such as a molecule, cannot be superimposed on its mirror image. Your hands have this property: if you clench your right fist with your thumb sticking up, and then hold it up to the mirror, the image you see in the mirror will be of a left hand (obviously!). So, replace the mirror image with your actual left hand and then ask: is there any way to rotate your right hand so that it fits the 3D structure of your left hand? The answer is “no.” That’s why a glove that’s specifically designed for your right hand won’t fit your left hand (or at least not comfortably!).

This is chirality. Plenty of objects aren’t chiral: pencils, hammers, umbrellas, coffee cups, a plain t-shirt, etc. You can superimpose these objects onto their mirror images. However, as noted above, large classes of molecules are not just chiral, but homochiral: they all have the same chirality. That’s to say, DNA and RNA consist of nucleotides (the building blocks of these macromolecules) that are all “right-handed,” while proteins are comprised of amino acids that are all “left-handed.” No one really knows why life evolved such that these biomolecules are all homochiral — it’s a bit of a mystery!

The point: it is theoretically possible to create new forms of life built on biomolecules with the opposite chirality. This is what the term “mirror life” refers to. And the possibility of mirror life is extremely worrisome because, as the authors write,

mirror bacteria would likely evade many immune mechanisms mediated by chiral molecules, potentially causing lethal infection in humans, animals, and plants. They are likely to evade predation from natural-chirality phage and many other predators, facilitating spread in the environment. We cannot rule out a scenario in which a mirror bacterium acts as an invasive species across many ecosystems, causing pervasive lethal infections in a substantial fraction of plant and animal species, including humans. Even a mirror bacterium with a narrower host range and the ability to invade only a limited set of ecosystems could still cause unprecedented and irreversible harm. … [A] close examination of existing studies led us to conclude that infections could be severe.

They add:

We call for additional scrutiny of our findings and further research to improve understanding of these risks. However, in the absence of compelling evidence for reassurance, our view is that mirror bacteria and other mirror organisms should not be created. We believe that this can be ensured with minimal impact on beneficial research and call for broad engagement to determine a path forward.

This looks pretty terrifying: a completely novel form of life that’s more or less entirely invisible to immunological systems could spread across human populations and global ecosystems, potentially precipitating a mass extinction event that catapults our species into the eternal grave — along with millions of other species.

When I half-jokingly talk about humanity being “superfucked” this century (that term is, basically, a description of the polycrisis), this is partly what I’m referring to: global catastrophe scenarios are proliferating. Nearly all of these scenarios have been enabled by advanced science and technology, and literally could not have occurred just 80 years ago. The obstacle course of existential hazards that we’ll have to slalom through this century is rapidly complexifying: it’s not one big risk that we must confront, but a rapidly growing multiplicity of unprecedented dangers to our collective survival. That’s why our situation is superfuckedup.Banning “Mirror Life”: Bacteria and ASI

Now, imagine that these scientists put together a statement almost exactly like the one that FLI just released about superintelligence:

We call for a prohibition on the development of mirror life, not lifted before there is:

broad scientific consensus that mirror life will be synthesized safely and controllably, and

strong public buy-in.

I mention this because it (a) parallels the FLI statement about superintelligence, and (b) strikes me as more straightforward than the FLI statement, due in part to the idea of “superintelligence” being more controversial than that of “mirror bacteria.” So, if you were asked to sign this statement, would you? If you knew that Bannon and Beck had also signed, would this change your mind?

I am more or less thinking out-loud here, working through these ideas in realtime: I think I’d still sign the statement, even if execrable figures had also signed. Because, you know, mirror life is potentially very dangerous. There are virtually zero issues that I agree with Bannon and Beck about — but the fact that we vehemently disagree about nearly everything doesn’t seem like the sort of thing that would, or should, prevent me from adding my signature to: “Let’s not try to develop mirror life!”

The same could be said about “superintelligence,” which one could conceptualize as a different kind of “mirror life”: not artificial bacteria, but artificial information processing systems. I agree with the exact wording of FLI’s statement: there needs to be a scientific consensus and full democratic approval before anyone builds “superintelligence.” That’s why I signed, though I don’t agree with everything that FLI representatives say about the risks of advanced AI …

Quick Tangent on What I Think FLI Gets Wrong

For example, Time reports that

organizers said they decided to mount a new campaign, with a more specific focus on superintelligence, because they believe the technology — which they define as a system that can surpass human performance on all useful tasks — could arrive in as little as one to two years. “Time is running out,” says Anthony Aguirre, the FLI’s executive director, in an interview with TIME.

Do I think, as Aguirre suggests, that we’re close to building superintelligence? No, as I wrote here! Will scaling up LLMs lead to superintelligence? Almost certainly not! I think Gary Marcus is right that LLMs are hitting a brick wall, and we’ll need an entirely new paradigm to get us there — such as neurosymbolic AI. (Though I strongly oppose efforts to build neurosymbolic “superintelligence,” too, a point on which Marcus and I disagree.)

But we haven’t yet addressed the elephant in the room: what the heck does “superintelligence” even mean? I find the term extremely unhelpful, and have noted many times that it exists within, and is a continuation of, a deeply problematic and misguided tradition of thinking about “intelligence” that we inherited from the 20th century eugenics movement.

There is, however, a related concept that I can make sense of, and which I don’t think is problematic the way “superintelligence” is. What if we build a system that can outmaneuver us in every important sense, solve complex problems faster than we can, act in ways that are functionally “agentic,” etc.? What then? I think we’d be in big trouble. This would be, once again, a different kind of “mirror life,” and I think the outcome might be the exact same as if we created bacteria comprised of biomolecules with the opposite chirality.

I don’t know if such a system is even possible to build, but if it is and if we build it, why would anyone think that we’d survive? I can’t find a comforting answer to that question, and no one has yet provided one to me.

Is this sci-fi? Yes, right now — but so is mirror bacteria, yet I don’t think it’s unreasonable for scientists to demand a moratorium on developing such bacteria, even though those scientists themselves say that the “capability to create mirror life is likely at least a decade away and would require large investments and major technical advances.” But this is precisely why we now “have an opportunity to consider and preempt risks before they are realized.” The parallels with “superintelligence” are, to my eye, quite striking.

I should also add that, unlike many “doomers” anxious about superintelligence taking over the world, I’m also extremely worried about the push to try to build superintelligence even if superintelligence is, in fact, impossible. Simply trying to create such a system is having, and will continue to have, hugely negative consequences for society. Look no further than the problem of disinformation, deepfakes, and slop, caused by LLMs that people like Sam Altman see as the stepping stones to superintelligent computers.

Here I’d argue that one can’t separate concerns about “near-term” issues (often dismissed by TESCREAL doomers) like social justice, algorithmic bias, environmental degradation, worker exploitation, etc. from “long-term” concerns about the survival of our species. This is another issue that Yudkowsky and Soares get embarrassingly wrong in their book If Anyone Builds It, Everyone Dies. If one wants to convince people to preserve the future, one must give people a vision of the future that’s worth preserving.

If the future is one in which humanity survives but social justice issues are sidelined, vulnerable communities are further disempowered or even eliminated, and so on, that’s not a future worth fighting for. If group X won’t survive even if humanity survives, then group X will have much less reason to join the fight. Hence, ensuring that the future is genuinely inclusive and democratically determined by the whole of humanity is inextricably bound up with the ongoing battle to protect humanity from the Agents of Doom (e.g., OpenAI) who are driving the current crisis.

Back to Bannon

Returning to questions about strategy: When, and to what extent — if ever — should one join hands with people who espouse abhorrent views for the sake of advancing a common cause that one considers very important (e.g., banning mirror bacteria or “superintelligent” machines)?

This is the topic of private conversations I’ve had with left-leaning friends for many months, especially since Bannon asked me to appear on his War Room podcast to discuss AI. I declined his invitation, and argued that one should never “make temporary friends” with fascists for any reason. At the very least, doing so risks critically compromising one’s moral integrity, and moral integrity trumps strategic alliances in all circumstances (or so I’d argue, given an absolutist deontological constraint against this). At the end of the day, what is more important than preserving one’s moral integrity? Literally nothing, in my opinion.

Yet the FLI statement that I signed includes Bannon’s name, which I found quite mortifying. I’m reminded here of transhumanists who, going back more than 4 decades, predicted that there would emerge a new political axis that’s orthogonal to the right-wingers vs. left-wingers axis. They called the two orthogonal poles up-wingers and down-wingers. The former are transhumanists who think we should develop technology — including superintelligent machines — to radically reengineer humanity, while the latter oppose this.

These transhumanists claimed that people on the traditional right and left would come together within these new political alliances. As the transhumanist Steve Fuller writes:

The “90-degree revolution” I foresee is that the communitarians and the traditionalists will team up to form the ‘down-winging’ Green pole, while the technocrats and the libertarians will join forces to form the ‘up-winging’ Black pole. … The geometry of the political imagery implies that the Left and the Right are each divided and then re-combined to form the Up and the Down.

There may be something to this prediction, as the FLI statement shows.

The normative question is whether we should be morally okay with this. When it comes to efforts to build God-like machines that replace humanity, backed by literally $1.5 trillion dollars (that’s 15,000 instances of $100 million), should joining the chorus of people against such efforts depend on who else is in the chorus? If we’re talking about mirror-life bacteria, which could potentially destroy much of the biosphere, would you sign a statement alongside fascists to prevent this? To put it differently, how the hell should someone who cares about moral integrity navigate this impossibly difficult situation?

I want to say something like: “Sure, Bannon and I agree about the ASI race. But co-signing a document is the closest that I’ll ever get to ‘working with’ him. He can do what he wants to stop the juggernaut of Big Tech, and I’ll do my own separate thing in my own way — there will be zero collaboration beyond mere public statements.” But is this enough? Is drawing the line there morally judicious, or problematic and foolish in some insidious way that I’m not fully understanding?

Help!

What do you think? How do you think one should respond when one looks around at a down-wingers gathering and sees some truly horrendous people in attendance? I genuinely don’t know what the right answer is, and I’m bringing this up now because I suspect far-left folks like me will increasingly find ourselves in alignment — with respect to AI and radical human enhancement — with appalling folks like Bannon. In a phrase: help!

Thanks for reading and I’ll see you on the other side!

While I find Bannon and Beck abhorrent, I think you did the correct thing, given the context. The issues will not be solved, perhaps not effectively addressed, as long as we have to adhere to some notion of pure behavior. Morality is vital, critical, but we are not living in a world of moral clarity, and the stakes are too high. You have set limits you will not cross, though if things become worse, you may have to reset them. Being moral can never be about clinging to a strict rule while everything and everyone around you is burning. It has to be about observing both yourself and the world around you, or it becomes an empty exercise in virtue. I enjoyed this piece, and the insight into your thinking.

There will be increasingly less instances of moral clarity going forward. I personally, for a long time, have taken the approach of comparing myself to a reed, rather than an oak. It's not important to appear sturdy - it's important to stay firmly rooted even when the wind blows.

The issue is not whether dubious figures sign something as well. The issue is when they have their hand in compiling something. For me, it was always an issue of "Who has written an open letter" rather than "who were the first figures to cosign it". The first would give me several warning signs - and to make sure about every little nuance, with the second I would be always fairly liberal.

We are going to be in coalitions we don't like. As the seas get rougher, we will find less and less people instantly aligned with us. That's ok. It's also about changing these along the way. And people can and should and have to change. It was never about purity. It was about saving shit. And that will lead to things like this. It's not pleasant, but it's going to be a necessity.