"Slopacalypse" Now: AI Is an Unmitigated Disaster for Society

Sora 2, deepfakes, disinformation, and slop — plus some hilarious bloopers!

Content warning: this article contains a single crude image (first one below) and several videos that, if watched, could potentially cause a slight decline in your cognitive abilities. I do not know if such deficits are reversible — so, read on with care, as the world of AI slop is really, really stupefying. Lol. :-)

“AI is the asbestos we are shoveling into the walls of our society and our descendants will be digging it out for generations,” says Cory Doctorow.

Is he wrong?

The profound dangers of AI slop, deepfakes, and disinformation polluting the information ecosystems of the Internet have never been clearer after OpenAI released its video-generation app Sora 2 on September 30. We are now in the midst of what Gary Marcus calls the “slopacalypse” and Chomba Bupe dubs “Slopageddon.” As the New York Times recently reported, Facebook is overflowing with AI-generated disinformation and slop — such as the grotesque image below:

Returning to Sora 2, OpenAI started things off by sharing some unbelievably stupid videos, such as the one below in which Altman and his friend run to “Imaginationland.” I honestly feel dumber after having watched this:

Another video from OpenAI features a figure skater with a cat on her head and an astronaut dog catching tennis balls in microgravity, among other incredibly dumb scenes. I can’t watch such videos without feeling like a little bit of brain damage has been done:

What’s particularly alarming is the possibility of using apps like Sora 2 to generate videos of apparent crimes, as exemplified by this video, 48 seconds in. You can now make hyper-realistic videos of someone robbing a bank, stealing from stores, and getting arrested by police.

In fact, as of early October, the “most liked video on Sora 2 is an extremely realistic AI-generated CCTV footage of Sam Altman stealing GPUs at Target,” which you can watch below:

This is so incredibly dangerous! Stating the obvious: (a) Such videos could undermine the value of video evidence in courtrooms (by planting seeds of doubt in the minds of jurors about the authenticity of videos presented), (b) they could be used to get someone in legal trouble, or to seriously harm someone’s reputation online, and (c) imagine that Altman did rob a store; if there are hundreds of fake videos of him doing something similar, people might (reasonably) dismiss an authentic video as just another fake. Not good!

Another app, released on October 4 by a company called Decart, enables you to “undress anyone with AI — no consent needed!” A video posted by the company demonstrates this function, removing the shirts of women and replacing them with bikini tops. Holy shit.

(AI also enables you to do the opposite — to put clothes on people, as shown here.)

Some folks are obviously very upset about this. I, personally, don’t think people are freaking out enough.

George Orwell wrote in 1984: “The Party told you to reject the evidence of your eyes and ears. It was their final, most essential command.” But what happens when none of us can even trust our eyes and ears?

Trump himself posted a deepfake on X in which he throws a “Trump 2028” hat on someone’s head and then laughs (below). Most of Trump’s supporters, I would hazard to guess, have poor media literacy, and hence will (continue to) be completely unable to distinguish between real and fake videos like this:

Not Everyone Has Lost Their Mind

As you’d expect, there’s been considerable backlash to Sora 2 online. Some people posted that “the Sora app is the worst of social media and AI.” Others warned that “over these next few years it’s going to become more and more important that you resist letting slop consume you.”

Still others have been mocking the fact that OpenAI promised AGI and miracle cures for diseases, yet are now giving us “infinite slop machines that turn us into dopamine-addicted zombies.”

CNN published an article on October 3 noting that “for a brief moment in history, video was evidence of reality. Now it’s a tool for unreality.”

This is fascinating to reflect on: the article is right — there was a “brief moment” when video could provide incontrovertible evidence of, e.g., a crime being committed. But now, that evidentiary power is gone, thanks to companies like OpenAI. In a sense, we’re returning to a pre-video world, though in another sense we’re actually entering a radically different, entirely unprecedented era of mass informational chaos and anarchy, in which anyone can generate an image of someone committing a crime, of women with their shirts removed, etc. This is rather terrifying.

As Scott Rosenberg writes in an Axios article titled “AI Video’s Empty New World”:

Feeds, memes and slop are the building blocks of a new media world where verification vanishes, unreality dominates, everything blurs into everything else and nothing carries any informational or emotional weight

The people — the demos — don’t want this. AI is being unilaterally forced upon society by huge companies run by billionaires with an infinite appetite for wealth and power. There is nothing democratic about this process — it is, and should be recognized as, a form of authoritarianism, as I suggested in my just-published interview with the pro-extinctionist Richard Sutton.

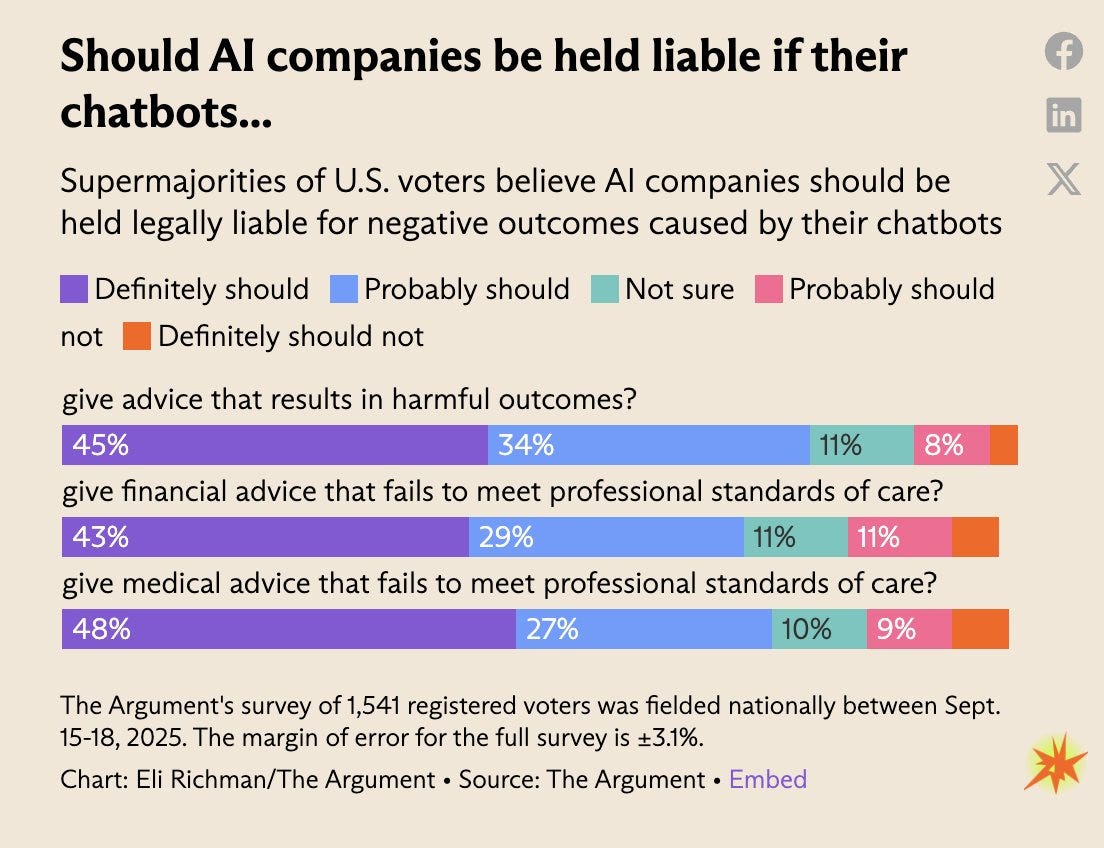

According to a recent survey, a supermajority of Americans think that AI companies “definitely should” or “probably should” be held liable if their chatbots “give advice that results in harmful outcomes,” and give bad financial or medical advice.

This comes on the heels of two parents testifying at a Senate hearing about how their son committed suicide after a “chatbot discourage[d] him to seek help from parents,” and “even offered to write his suicide note.” AI is causing real harms to actual humans, and so far there have been virtually zero repercussions for these companies (with the notable exception of Anthropic having to pay $1.5 billion in damages for having trained their AI models on pirated copyright material).

The Tragicomedy of Our Plight

But this fast-moving calamity isn’t just tragic — it’s also quite funny at times. You might say the fiasco is a tragicomedy or, depending on your perspective, a comitragedy. For example, I highly recommend this video if you need a chuckle:

A guy going by “Danny Lifted” on YouTube has a bunch of videos of him with his AI girlfriend. Many are equal parts amusing and creepy, such as:

Someone else posted a video of Sora 2 failing to generate an accurate video of someone counting from 1 to 10 on their fingers, leading Gary Marcus to quip: “phd level-work, for sure!” (a reference to Altman saying that GPT-5 has PhD-level capabilities).

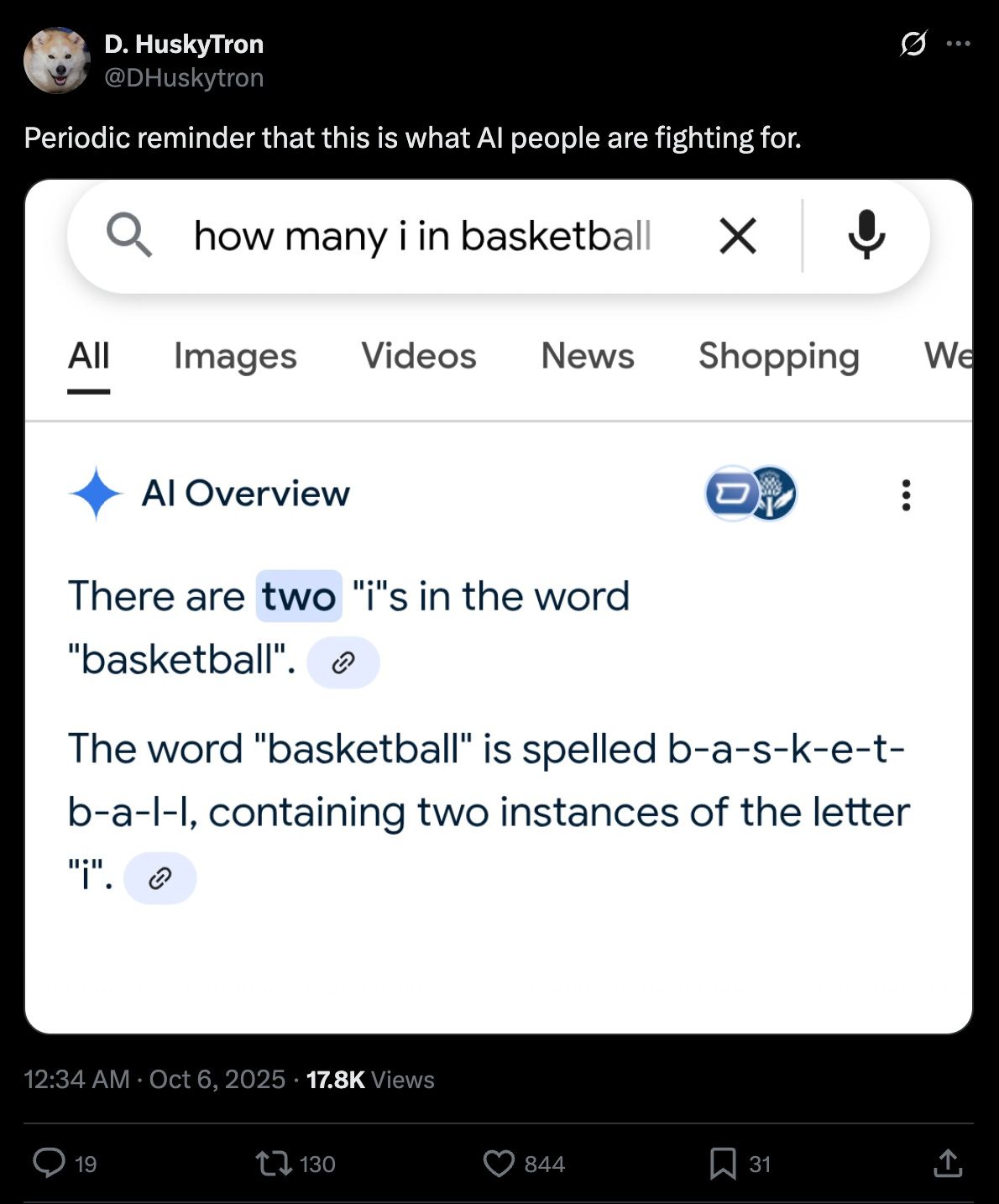

From OpenAI to DeepMind, here’s a humorous gem from the other day:

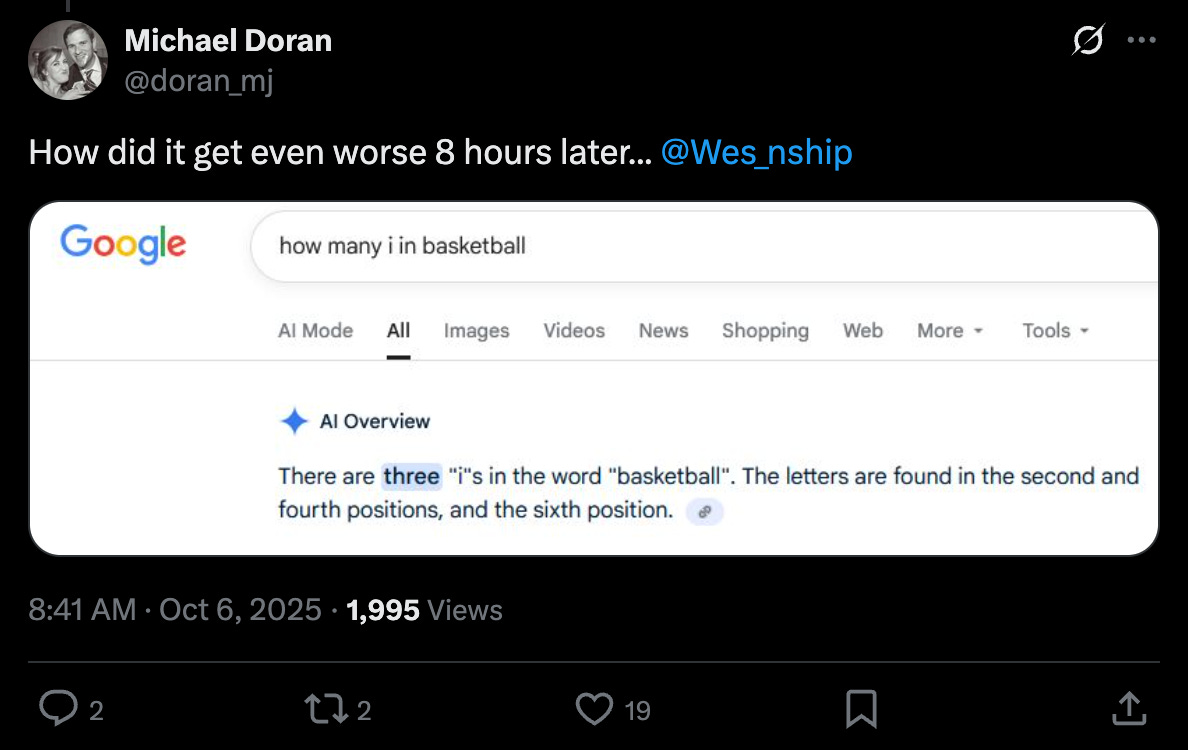

Eight hours later, someone asked Google’s AI Overview the same question and received an even more egregious response:

Here’s a fantastic video on the rise of AI slop and the danger of AI unreality. Highly recommended:

So, where do we go from here? Humanity is conducting a large number of high-stakes, large-scale experiments in realtime — and we have no idea what the outcome might be. We’re doing this with AI, with climate change, even with our sleep patterns, as contemporary humans appear to sleep less than past generations due in part to stress (though the evidence for this is a bit mixed).

Civilization is a giant experiment, and my guess is that AI is going to increase the probability of it failing catastrophically. As I write in a forthcoming review of Eliezer Yudkowsky’s new book — which I’ll share once it’s out in Truthdig — it’s easier to imagine an end to the world than an end to the AGI race, given how much money is circulating in Big Tech.

On that note, I’m wishing everyone a wonderful weekend! As always:

Thanks so much for reading and I’ll see you on the other side!

When you started this with the remark that you are afraid that the short AI videos might give you brain damage I thought you were exaggerating but I see where you are coming from.

Every day I feel more and more like Wesley Crusher in the ST:TNG episode “The Game.”