Eliezer Yudkowsky's Long History of Bad Ideas

Updated Nov. 28, 2025.

Two days ago, Truthdig published my review of Eliezer Yudkowsky and Nate Soares’ book If Anyone Builds It, Everyone Dies. Please read and share it, if you have the time.

I make two central claims in the review, which many readers of the book will find surprising: despite initial appearances, the authors aren’t anti-ASI, nor are they pro-humanity. The book, in my view, is a piece of TESCREAL propaganda, and what’s most interesting — and deeply disturbing — about it is what the authors leave out.

In this article, I thought it would be worth looking at the views that Yudkowsky has espoused over the years. He’s suggested that murdering children up to 6 years old may be morally acceptable, that animals like pigs have no conscious experiences, that ASI (artificial superintelligence) could destroy humanity by synthesizing artificial mind-control bacteria, that nearly everyone on Earth should be “allowed to die” to prevent ASI from being built in the near future, that he might have secretly bombed Wuhan to prevent the Covid-19 pandemic, that he once “acquired a sex slave … who will earn her orgasms by completing math assignments,” and that he’d be willing to sacrifice “all of humanity” to create god-like superintelligences wandering the universe.

Yudkowsky also believes — like, really really believes — that he’s an absolute genius, and said back in 2000 that the reason he wakes up in the morning is because he’s “the only one who” can “save the world.” Yudkowsky is, to put it mildly, an egomaniac. Worse, he’s an egomaniac who’s frequently wrong despite being wildly overconfident about his ideas. He claims to be a paragon of rationality, but so far as I can tell he’s a fantastic example of the Dunning-Kruger effect paired with messianic levels of self-importance. As discussed below, he’s been prophesying the end of the world since the 1990s, though most of his prophesied dates of doom have passed without incident.

So, let’s get into it! I promise this will get weirder the more you read.

In a fantastically detailed article by Bentham’s Bulldog, the author notes that Yudkowsky consistently misunderstands basic philosophy, while dismissing actual philosophers who disagree with him as, basically, dumb. “The thing that was most infuriating about this exchange,” Bentham’s Bulldog writes, “was Eliezer’s insistence that those who disagreed with him were stupid, combined with his demonstration that he was unfamiliar with the subject matter. Condescension and error make an unfortunate combination.”

For example, Yudkowsky asserts that “pigs and almost all animals are almost certainly not conscious” — that is, they have no conscious experiences, despite the obvious signs that they can indeed experience pain. In 2008, Yudkowsky wrote a lengthy refutation of David Chalmers’ “philosophical zombie” argument (we needn’t go into the details here). The problem is that Yudkowsky didn’t actually understand that argument, despite confidently declaring it to be flawed. This resulted in Chalmers himself — a world-renowned philosopher at New York University — commenting under Yudkowsky’s LessWrong post that Yudkowsky’s interpretation is simply “incorrect.”

On another occasion, Yudkowsky argued that in a forced-choice situation you should prefer that a single person is tortured relentlessly for 50 years than for some unfathomable number of people to suffer the almost imperceptible discomfort of having a single speck of dust in their eyes. Just do the moral arithmetic — or, as he puts it, “Shut up and multiply!” Suffice it to say that most philosophers would vehemently object to this conclusion.

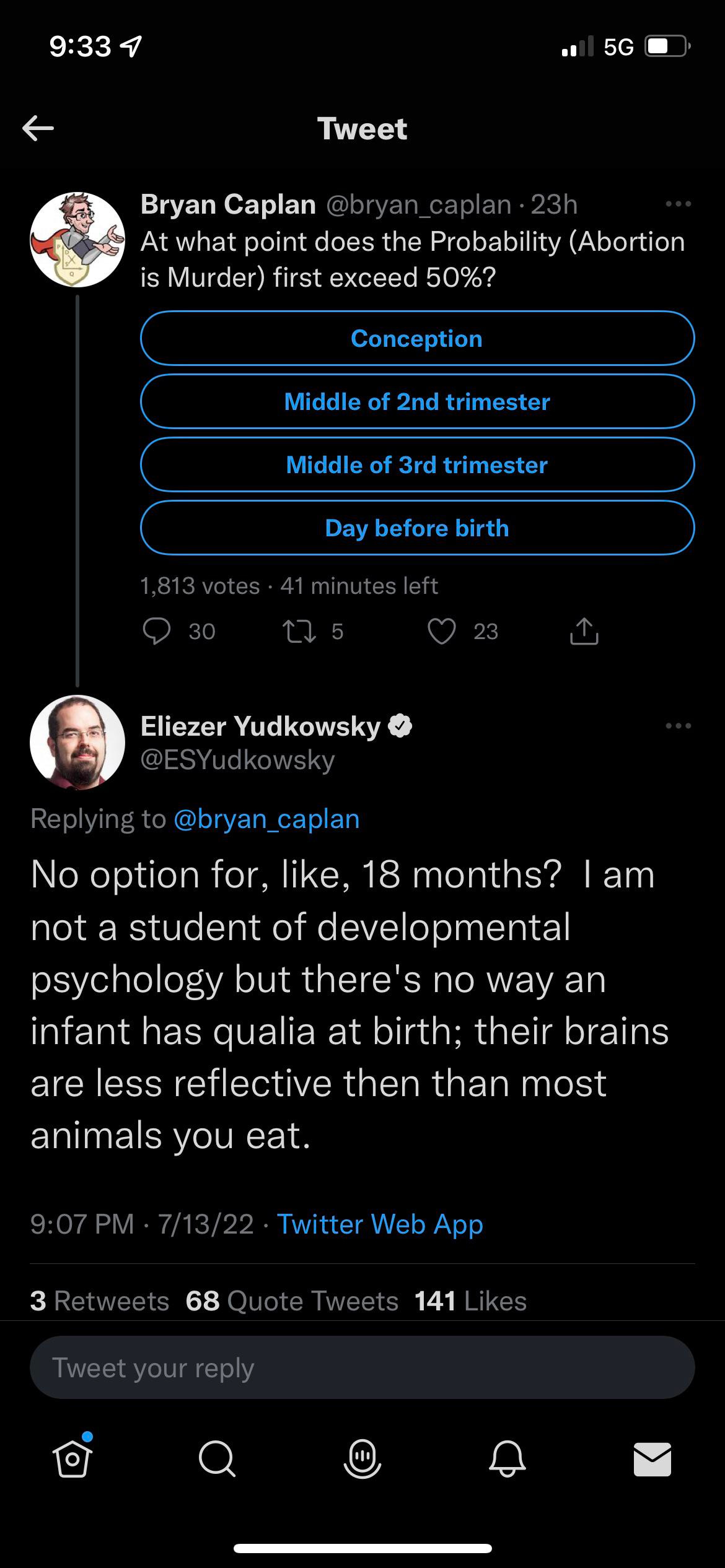

Along similar lines, he’s suggested that killing infants shouldn’t be seen as murder:

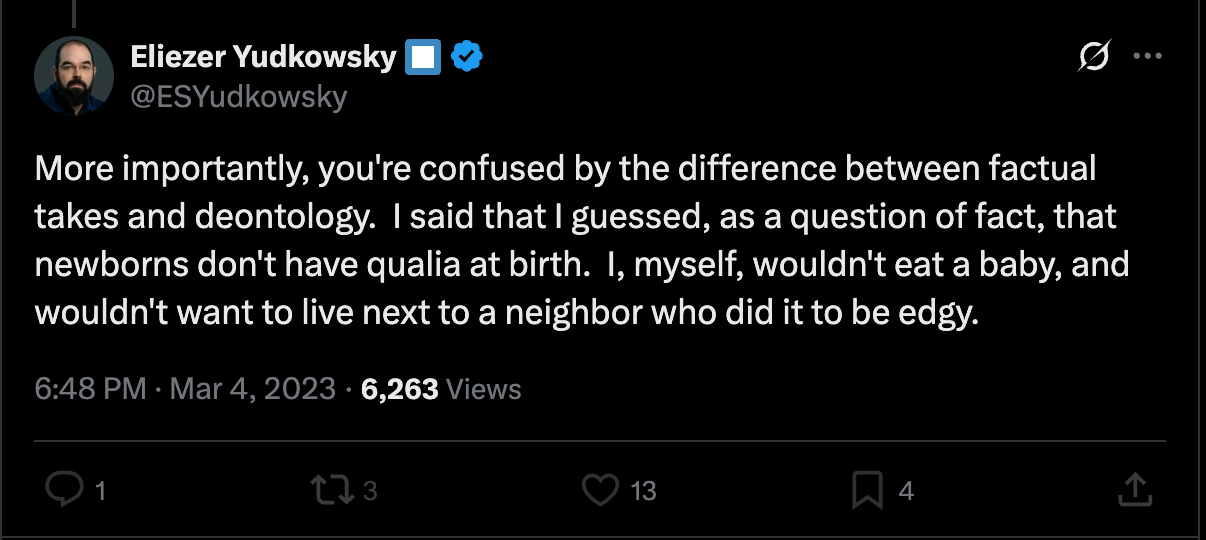

When someone confronted Yudkowsky about this, he replied:

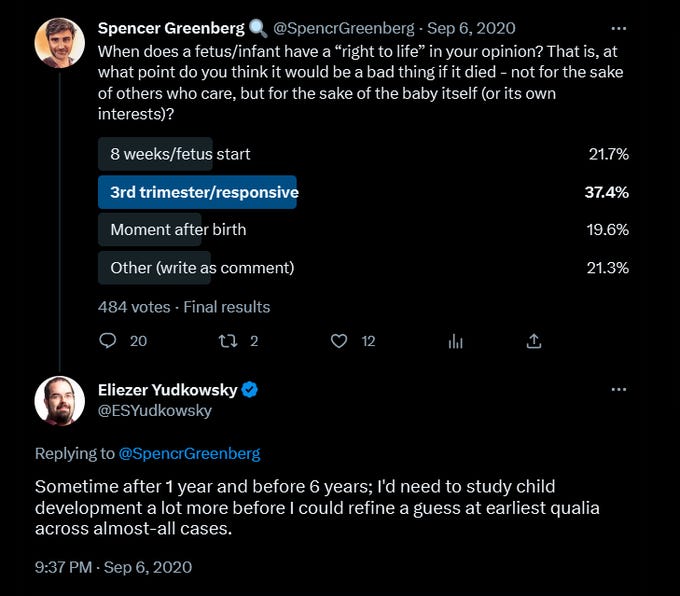

Two years earlier, Yudkowsky went further in claiming that a child only has a “right to live” “sometime after 1 year and before 6 years,” although he added that he needs “to study child development a lot more before I could refine a guess.” In other words, murdering children might be okay because they probably lack qualia.

It’s not just the philosophers who disagree with Yudkowsky that are stupid. In a “sequence” on LessWrong about quantum mechanics, he asserts that “the many-worlds interpretation [of quantum mechanics] wins outright given the current state of evidence.” This is quite a bold statement from someone who’s never taken a university course on the topic, and never published a single peer-reviewed article on quantum mechanics. It also contradicts the prevailing view among actual physicists, as the alternative Copenhagen interpretation is “the most accepted view in physics.” But Yudkowsky thinks he’s smarter than most physicists.

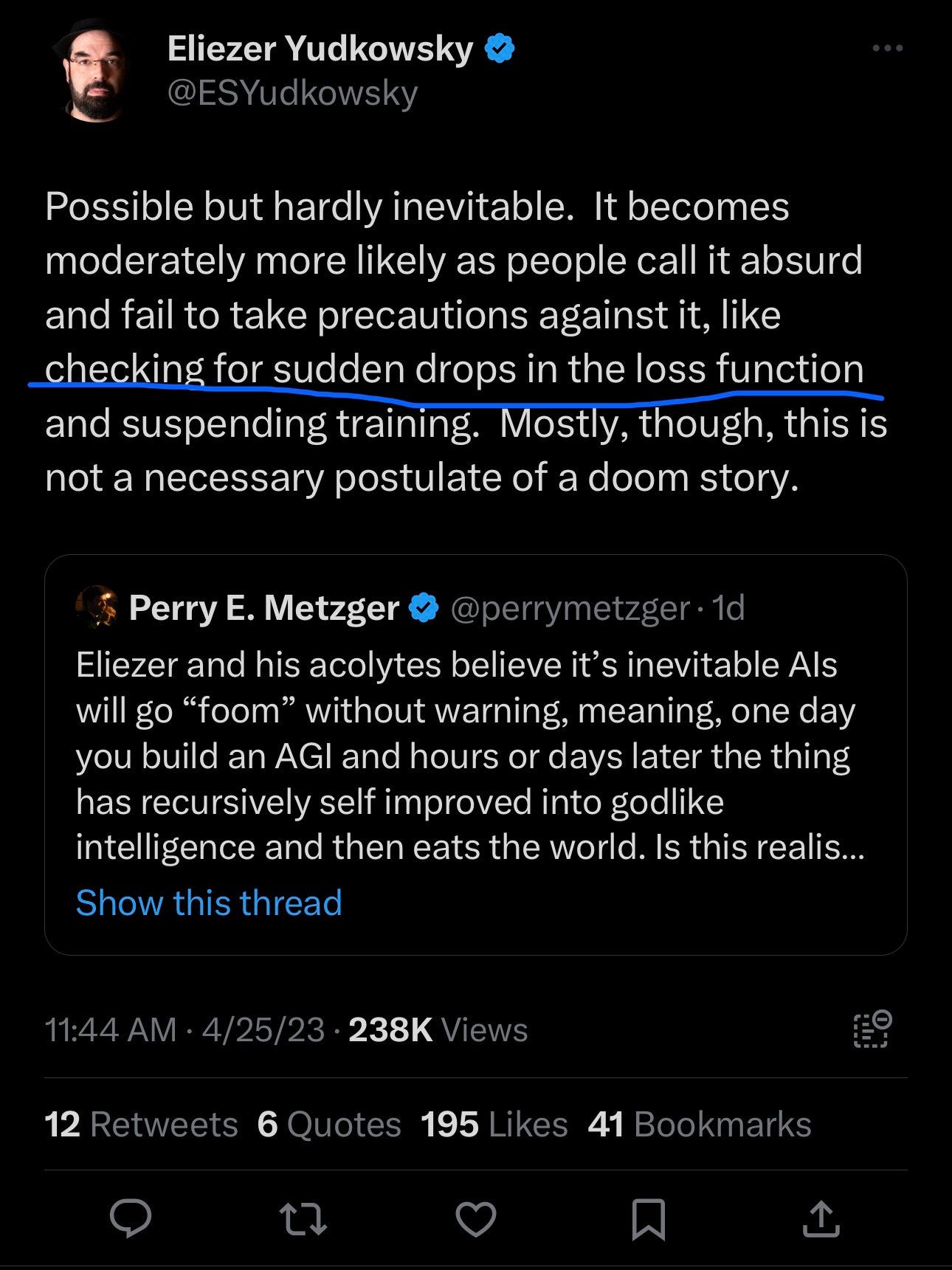

Even more, Yudkowsky doesn’t seem to understand much about computer science — the field most relevant to his work on ASI existential risks. I was told by someone who worked at MIRI that Yudkowsky doesn’t know how to code (though I haven’t independently verified this), and he wrote in 2023 that one way to “take precautions” against an intelligence explosion — whereby AGI recursively self-improves over the course of a few hours or days to become wildly superintelligent — is to keep “checking for sudden drops in the loss function.”

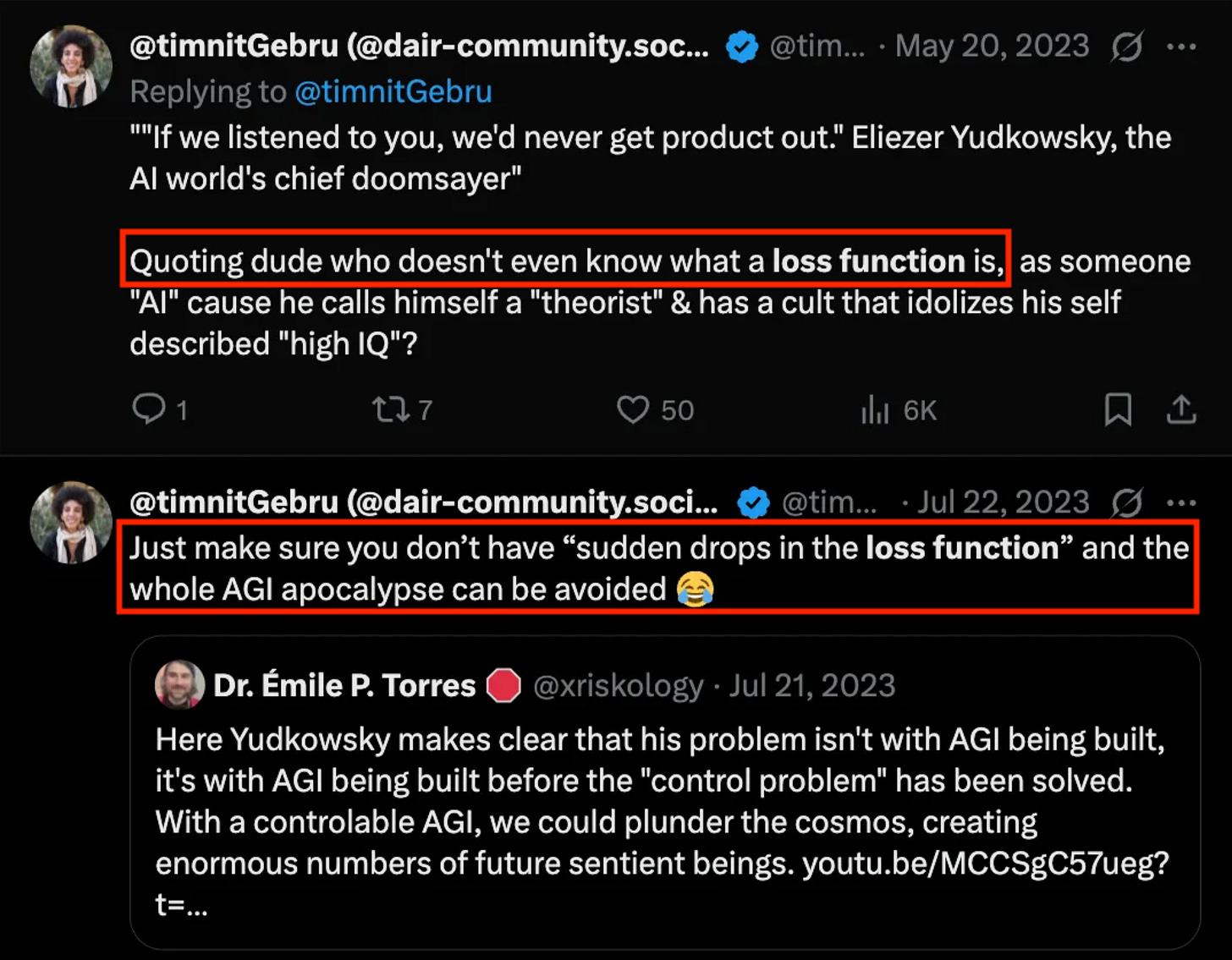

This unleashed an avalanche of mockery from actual computer scientists like Timnit Gebru. Apparently, Yudkowsky doesn’t understand what a “loss function” is. Gebru writes:

Another computer scientist wrote:

Along similar lines, the computer scientist Paul Christiano opined in 2022 that

Eliezer is unreasonably pessimistic about interpretability while being mostly ignorant about the current state of the field. This is true both for the level of understanding potentially achievable by interpretability, and the possible applications of such understanding.

Why on Earth does Yudkowsky feel so confident declaring that we’re on track for an ASI catastrophe when he isn’t even familiar with the interpretability literature?1 And he doesn’t even know what a loss function is? Do you see a pattern emerging?

Here’s what RationalWiki writes about Yudkowsky’s “contributions” to human knowledge2:

Even his fans admit, “A recurring theme here seems to be ‘grandiose plans, left unfinished.’” He claims to be a skilled computer programmer, but has no code available other than Flare, an unfinished computer language for AI programming with XML-based syntax. It was supposed to be the programming language in which SIAI would code Seed AI but got canceled in 2003. He hired professional programmers to implement his Arbital project (see above), which eventually failed.

Yudkowsky is almost entirely unpublished outside his foundation and blogs and has never even attended high school or college, much less did any AI research. No samples of his AI coding are public. In 2017 and 2018 he self-published some pop science books with titles like Inadequate Equilibria and endorsements from fellow travelers Bryan Caplan and Scott Aaronson.

Yudkowsky’s papers are generally self-published and had a total of two cites on JSTOR-archived journals (neither to do with AI) as of 2015. One of these came from his friend Nick Bostrom at the closely-associated Future of Humanity Institute.

Yudkowsky calls himself a “decision theorist,” and yet has not made a single contribution to the field of decision theory — academics overwhelmingly think Yudkowsky’s own decision theory is fundamentally flawed (to the extent that most don’t even think it’s worth the time to “refute” it). His biggest claim to fame before people like Whoopi Goldberg began promoting If Anyone Builds It, Everyone Dies is literally Harry Potter fan fiction:

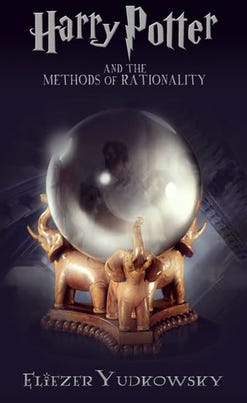

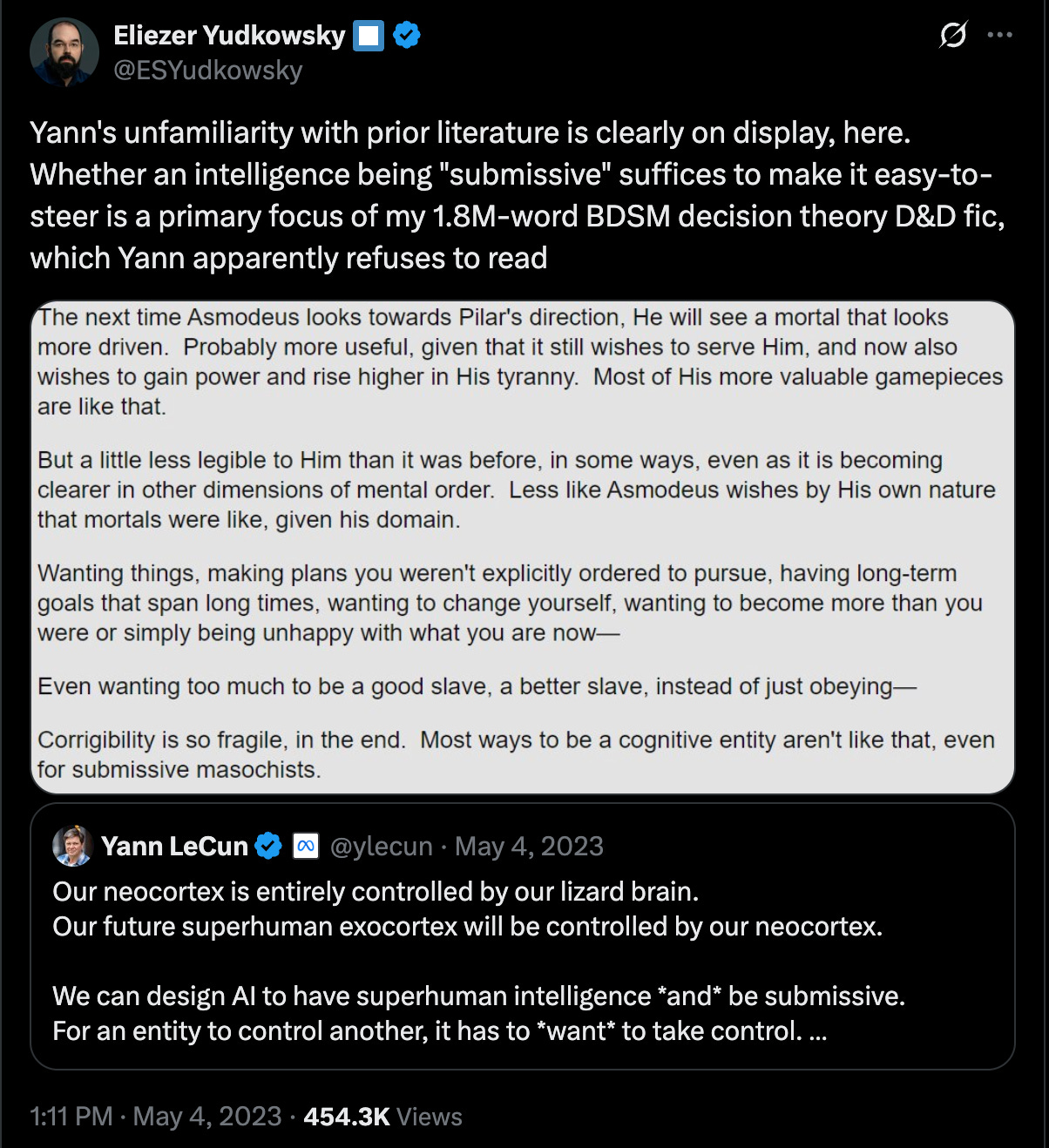

Yudkowsky even once admonished the Turing Award-winner Yann LeCun for not having read his 1.8-million-word tome on BDSM, Dungeons and Dragons, and rationality. I kid you not:

So, Yudkowsky thinks it’s bad for venerable scientists like LeCun not to have read the “prior literature,” while Yudkowsky himself appears not to have read the literature on computer science, philosophy, etc. — despite confidently pontificating about these subjects.

Yudkowsky has also been incorrectly predicting the end of the world for roughly 30 years. As I write in my book review:

The Internet philosopher Eliezer Yudkowsky has been predicting the end of the world for decades. In 1996, he confidently declared that the singularity — the moment at which computers become more “intelligent” than humanity — would happen in 2021, though he quickly updated this to 2025. He also predicted that nanotechnology would suddenly emerge and kill everyone by 2010. In the early aughts, the self-described “genius” claimed that his team of “researchers” at the Singularity Institute would build an artificial superintelligence “probably around 2008 or 2010,” at which point the world would undergo a fundamental and irreversible transformation.

Though none of those things have come to pass, that hasn’t deterred him from prophesying that the end remains imminent. Most recently, he’s been screaming that advanced AI could soon destroy humanity, and half-jokingly argued in 2022 that we should accept our fate and start contemplating how best to “die with dignity.”

Yet, as noted, Yudkowsky is absolutely convinced that he’s a genius — as he’s been saying since at least 2000. In his autobiography published that year (who writes an autobiography at age 20 or 21, except someone with a truly massive ego?), he repeatedly writes things like: “I was the class genius and everyone knew it” and “then I thought: ‘Maybe that’s why my genius isn’t an evolutionary advantage.’” He closes the essay with these messianic proclamations:

It would be absurd to claim to represent the difference between a solvable and unsolvable problem in the long run, but one genius can easily spell the difference between cracking the problem of intelligence in five years and cracking it in twenty-five …

That’s why I matter, and that’s why I think my efforts could spell the difference between life and death for most of humanity, or even the difference between a Singularity and a lifeless, sterilized planet. …Nonetheless, I think that I can save the world, not just because I’m the one who happens to be making the effort, but because I’m the only one who can make the effort. And that is why I get up in the morning.

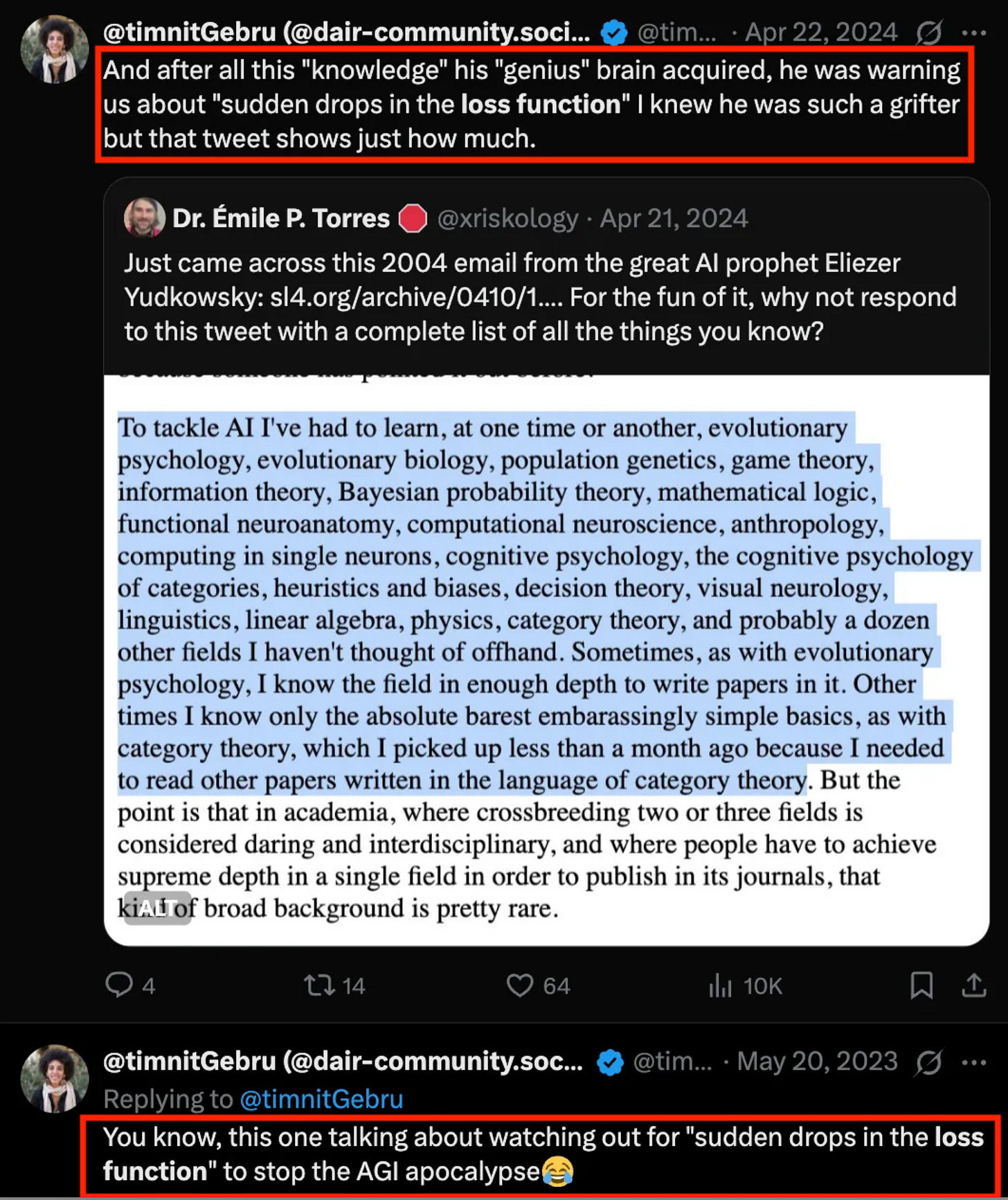

Though Yudkowsky disavows the views he held back then, saying that “everything dated 2002 or earlier … I now consider completely obsolete” and “you should regard anything from 2001 or earlier as having been written by a different person who also happens to be named ‘Eliezer Yudkowsky.’ I do not share his opinions,” his egomaniacal overconfidence and messianic sense of self-importance hasn’t diminished one bit. In 2004, he boasted that

to tackle AI I’ve had to learn, at one time or another, evolutionary psychology, evolutionary biology, population genetics, game theory, information theory, Bayesian probability theory, mathematical logic, functional neuroanatomy, computational neuroscience, anthropology, computing in single neurons, cognitive psychology, the cognitive psychology of categories, heuristics and biases, decision theory, visual neurology, linguistics, linear algebra, physics, category theory, and probably a dozen other fields I haven’t thought of offhand. Sometimes, as with evolutionary psychology, I know the field in enough depth to write papers in it.

We are all very impressed.

More recently, as I wrote in a 2023 Truthdig article (quotes from Yudkowsky are italicized):

After being asked how an AGI could obliterate humanity so that there are “no survivors,” he speculates that it could synthesize a pathogenic germ that “is super-contagious but not lethal.” Consequently,

no significant efforts are [put] into stopping this cold that sweeps around the world and doesn’t seem to really hurt anybody. And then, once 80% of the human species has been infected by colds like that, it turns out that it made a little change in your brain somewhere. And now if you play a certain tone at a certain pitch, you’ll become very suggestible. So, virus-aided, artificial pathogen-aided mind control.

Realizing that this wouldn’t necessarily kill everyone, Yudkowsky then proposed a different scenario. Imagine, he says, that an AGI hell-bent on destroying us creates,

something that [can] reproduce itself in the air, in the atmosphere and out of sunlight and just the kind of atoms that are lying around in the atmosphere. Because when you’re operating at that scale, the world is full of an infinite supply of … perfect spare parts. Somebody calculated how long it would take “aerovores” to replicate and blot out the sun, use up all the solar energy. I think it was like a period of a couple of days. At the end of all this is tiny diamondoid bacteria [that] replicate in the atmosphere, hide out in your bloodstream. At a certain clock tick, everybody on Earth falls over dead in the same moment.

Diamondoid bacteria! Everyone falls over dead in an instant! Lol.

In 2023, a “computational physicist working on nanoscale simulations” posted an article on the Effective Altruism forum about the idea, noting that “the literal phrase ‘diamondoid bacteria’ appears to have been invented by Eliezer Yudkowsky about two years ago.” The author concludes: “at the current rate of research, DMS [diamondoid mechanosynthesis] style nanofactories still seem many, many decades away at the minimum” and that “this research could be sped up somewhat by emerging AI tech, but it is unclear to what extent human-scale bottlenecks can be overcome in the near future.”

In other words, an idea that Yudkowsky has repeatedly referenced to motivate his AI doomer narratives appear more plausible is, at best, sci-fi speculation built on a silly term that he himself invented.3

Nonetheless, because an uncontrollable ASI might synthesize mind-control pathogens and “diamondoid bacteria,” Yudkowsky argues in a 2023 Time magazine article that nuclear states like the US should be willing “to destroy a rogue datacenter by airstrike,” even at the risk of triggering a thermonuclear war, to prevent ASI from being built in the near future.

When he was asked on Twitter/X how many deaths would be morally permissible to prevent uncontrollable ASI from being built in the near future, he made this jaw-dropping statement:

To be clear, the minimum viable human population is estimated to be between 150 and 40,000 individuals. That means more or less 8.2 billion people should be “allowed to die” so that we can still “reach the stars someday.” Which means that you and I would almost certainly be “worth” killing in a thermonuclear holocaust to protect what Yudkowsky calls our “glorious transhumanist future” — according to him.

On another occasion, he suggests “sending in some nanobots that switch off all the large GPU clusters,” a form of “property damage,” to prevent uncontrollable ASI:

Around the same time, he was asked about “bombing the Wuhan center studying pathogens” to prevent the Covid-19 pandemic. He called this a “great question” and said that “if I can do it secretly, I probably do and then throw up a lot.”

Yet, despite Yudkowsky’s claim that we must avoid “human extinction” from an uncontrollable ASI by — if necessary — bombing datacenters, even if this results in nearly everyone on Earth dying, he’s also repeatedly stated that the ideal future is one in which some kind of digital posthumans replace our species.

For example, in an interview from earlier this year with the Silicon Valley pro-extinctionist Daniel Faggella, he admits that he’d be willing to sacrifice “all of humanity” to create god-like superintelligences wandering the universe — in order “to make the stars cities.” See for yourself:

In another interview, he told Stephen Wolfram that he’s not worried about humanity being replaced. He’s only concerned that what replaces us might not be “better.”

As my friend Remmelt Ellen said to me (shared with permission):

There is a dangerous undercurrent in thinking this way about humans and other rich and deeply valued life on Earth. The notion of trying to replace or supplant another based on one’s judgments — one’s personal views about what “better” means — is very dangerous.

It is, in other words, a sign of extraordinary hubris and arrogance to think one knows what’s “best” — to see oneself as being able to speak on behalf of all of humanity and all life on Earth. But, as noted, Yudkowsky has an ego the size of Jupiter and is convinced that he’s smarter than everyone else.

In yet another interview — which you can listen to here; a complete transcript is here — Yudkowsky made this stunning claim:

I have basic moral questions about whether it’s ethical for humans to have human children, if having transhuman children is an option instead. Like, these humans running around? Are they, like, the current humans who wanted eternal youth but, like, not the brain upgrades? Because I do see the case for letting an existing person choose, “No, I just want eternal youth and no brain upgrades, thank you.” But then if you’re deliberately having the equivalent of a very crippled child when you could just as easily have a not crippled child .…

“In other words,” as I say in my book review, “once posthuman children become possible, having a normal ‘human child’ would be the equivalent of having a ‘crippled child’ — deeply offensive language, by the way, that I would never write if I weren’t quoting a eugenicist like Yudkowsky.”

In the same interview, Yudkowsky further suggests that our posthuman successors might just remove (exterminate?) all humans from Earth to convert it into a park:

Like, should humans in their present form be around together? Are we, like, kind of too sad in some ways? … I’d say that the happy future looks like beings of light having lots of fun in a nicely connected computing fabric [i.e., in computer simulations] powered by the Sun, if we haven’t taken the sun apart yet. Maybe there’s enough real sentiment in people that you just, like, clear all the humans off the Earth and leave the entire place as a park. … Yeah, like … That was always the [thing] to be fought for. That was always the point, from the perspective of everyone who’s been in this for a long time.

To close, here are some additional facts about Yudkowsky and his views:

Here’s a clip of Yudkowsky saying that young people should understand that they’re about to die due to ASI (there’s no future for them), and that this hits hard because he grew up thinking he’d live forever.4

Earlier this year, as RationalWiki writes, “Yudkowsky endorsed a project on ‘How to Make Superbabies’ as ‘arguably the third most important project in the world.’ Superbabies meant ‘high-IQ’ and maybe less susceptible to disease.” This is how he believes we can solve the controllability problem with ASI. With respect to eugenics, this is what I wrote in a 2023 article for Truthdig:

Yudkowsky, for example, tweeted in 2019 that IQs seem to be dropping in Norway, which he found alarming. However, he noted that the “effect appears within families, so it’s not due to immigration or dysgenic reproduction” — that is, it’s not the result of less intelligent foreigners immigrating to Norway, a majority-white country, or less intelligent people within the population reproducing more. Earlier, in 2012, he responded with stunning blitheness to someone asking: “So if you had to design a eugenics program, how would you do it? Be creative.” Yudkowsky then outlined a 10-part recipe, writing that “the real step 1 in any program like this would be to buy the 3 best modern textbooks on animal breeding and read them.” He continued: “If society’s utility has a large component for genius production, then you probably want a very diverse mix of different high-IQ genes combined into different genotypes and phenotypes.” But how could this be achieved? One possibility, he wrote, would be to impose taxes or provide benefits depending on how valuable your child is expected to be for society. Here’s what he said:

There would be a tax or benefit based on how much your child is expected to cost society (not just governmental costs in the form of health care, schooling etc., but costs to society in general, including foregone labor of a working parent, etc.) and how much that child is expected to benefit society (not lifetime tax revenue or lifetime earnings, but lifetime value generated — most economic actors only capture a fraction of the value they create). If it looks like you’re going to have a valuable child, you get your benefit in the form of a large cash bonus up-front … and lots of free childcare so you can go on having more children.

A Viewpoint Magazine reports on “Roko’s Basilisk,” first proposed on Yudkowsky’s website LessWrong. This thought experiment imagines a future artificial superintelligence (ASI) torturing everyone alive today who fails to bring it into existence. The article states:

Yudkowsky responded to Roko’s post the next day. “Listen to me very closely, you idiot,” he began, before switching to all caps and aggressively debunking Roko’s mathematics. He concluded with a parenthetical:

For those who have no idea why I’m using capital letters for something that just sounds like a random crazy idea, and worry that it means I’m as crazy as Roko, the gist of it was that he just did something that potentially gives superintelligences an increased motive to do extremely evil things in an attempt to blackmail us.

The name “Roko’s Basilisk” caught on during the ensuing discussion, in reference to a mythical creature that would kill you if you caught a glimpse of it. This wasn’t evocative enough for Yudkowsky. He began referring to it as “Babyfucker,” to ensure suitable revulsion, and compared it to H.P. Lovecraft’s Necronomicon, a book in the horror writer’s fictional universe so disturbing it drove its readers insane.

Yudkowsky says that he regards “the non-x-risk parts of EA as being important only insofar as they raise visibility and eventually get more people involved in, as I would put it, the actual plot” — namely, mitigating “existential risks.”

He describes “Aella Girl,” a self-educated Rationalist who self-publishes shoddy sex research, “one of earth’s great scientists.”

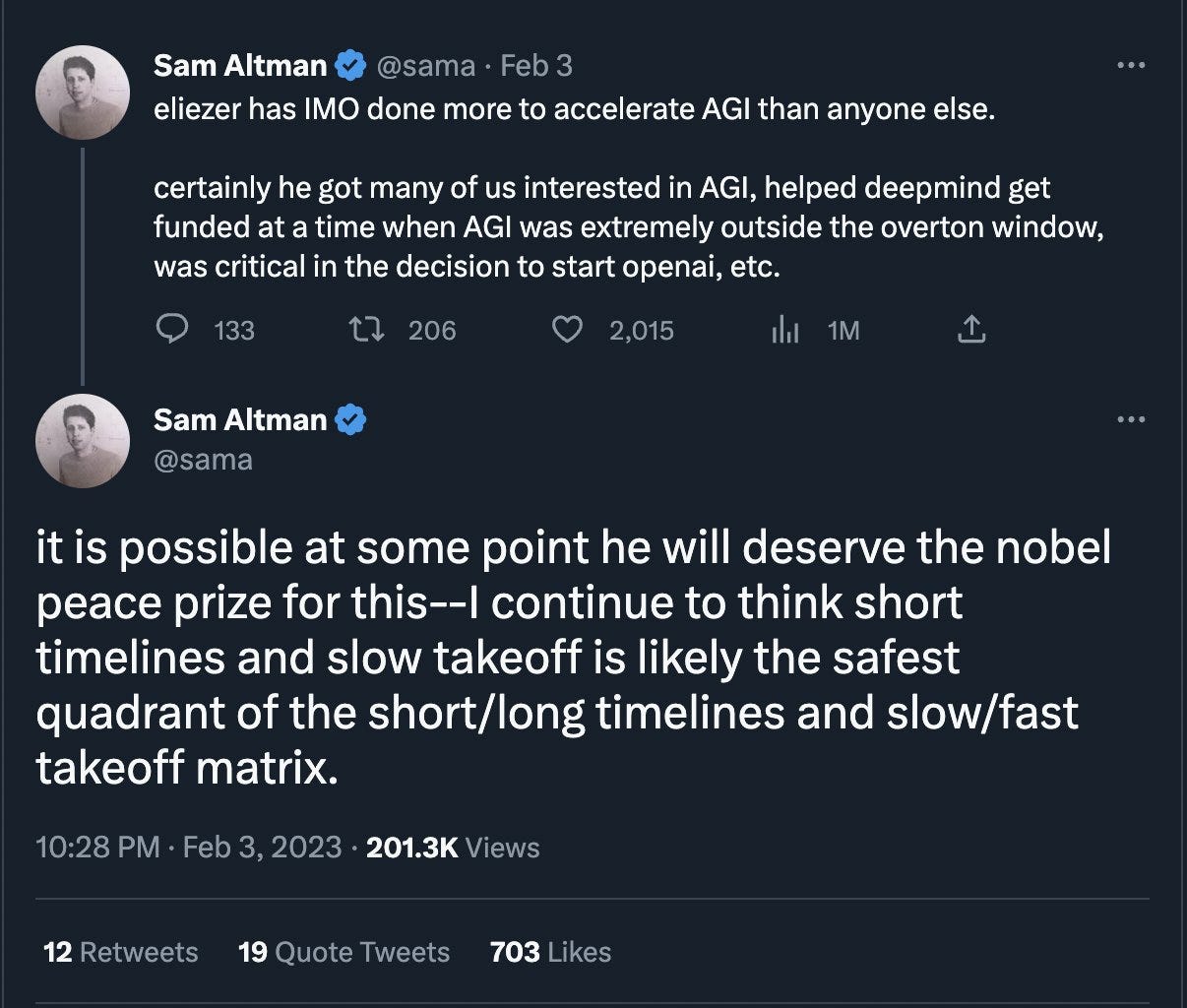

Sam Altman writes that Yudkowsky has “done more to accelerate AGI than anyone else,” and jokes that Yudkowsky might deserve the Nobel Peace Prize.

After Sam Bankman-Fried’s cryptocurrency exchange platform collapsed, Yudkowsky (quoting RationalWiki)

initially advised that “In my possibly contrarian opinion ... you are not obligated to return funding that got to you ultimately by way of FTX; especially if it’s been given for a service you already rendered, any more than the electrical utility ought to return FTX’s money that’s already been spent on electricity; especially if that would put you to hardship.”

In 2013, Yudkowsky wrote on Reddit: “I’ve recently acquired a sex slave … who will earn her orgasms by completing math assignments.” He later complained that,

any mention of my name is now often met by a claim that I keep a harem of young submissive female mathematicians who submit to me and solve math problems for me, and that I call them my ‘math pets.’ You’d think this would be a friendly joke. It’s not.

He called this a conspiracy theory. When someone reminded him of his 2013 Reddit post, he responded:

Huh! No memory at all that I’d posted that. It didn’t work out at all when we tried it, I married her anyways, and the person who made up the ‘math pets’ allegation claimed no such source, but I agree that explains why the concept was in the air.

Yudkowsky cares little about social justice, which he sees as a distraction from what really matters: mitigating the existential risk of uncontrollable ASI. Consider algorithmic bias, which disproportionately affects marginalized communities. Here’s what Yudkowsky says about the problem to Adam Becker, as recorded in More Everything Forever:

If they [people concerned about the harms of AI to marginalized groups] would leave the people trying to prevent the utter extinction of all humanity alone I should have no more objection to them than to the people making sure the bridges stay up. If the people making the bridges stay up were like, “How dare anyone talk about this wacky notion of AI extinguishing humanity. It is taking resources away that could be used to make the bridges stay up,” I’d be like “What the hell are you people on?” Better all the bridges should fall down than that humanity should go utterly extinct.

The last sentence is obviously callous — social justice concerns are the analogue of bridges, and hence Yudkowsky is saying that it’s better for marginalized peoples to “fall down” than for our “glorious transhumanist future” to be compromised. What Yudkowsky doesn’t seem to realize is that if he wants to motivate people to care about the future, he needs to offer a vision of the future that’s worth caring about. My view, in contrast to his, is that we should care about the future and that caring about social justice issues like algorithmic bias is inextricably bound up with caring about the future. You can’t really care about one without caring about the other. But I’m digressing into my personal opinions here.

That’s it for now. As always:

Thanks for reading and I’ll see you on the other side.

Thanks so much to Keira Havens, Remmelt Ellen, David Gerard, and Rupert Read for looking over earlier draft of my book review. I really appreciate it!

There are many other examples of this — genuine experts saying that Yudkowsky knows little about the topics he pontificates about — but I can’t recall the relevant interviews and blogposts at the moment.

This excerpt contains numerous citations that I have removed to improve readability. See the original article for those citations.

As the author writes earlier in the article:

I think inventing new terms is an extremely unwise move (I think that Eliezer has stopped using the term since I started writing this, but others still are). “diamondoid bacteria” sounds science-ey enough that a lot of people would assume it was already a scientific term invented by an actual nanotech expert (even in a speculative sense). If they then google it and find nothing, they are going to assume that you’re just making shit up.

Thank you Émile for liberating me from ever taking any of these wankers seriously ever again. These are deeply disturbed people who should not be in charge of anything ever.

I personally think that Yudkowsky is a deluded idiot with a few systematic deficiencies which skew his thinking on almost every issue; he's done untold indirect harm to many of my personal acquaintances by creating a cult of autism-for-austistics.

However, most of your complaints here reiterate some flavor of "You're not allowed to have opinions on [big important idea] unless you get enough good boy points from [institution that happens to agree with me on a given issue] or [general public]"

It seems silly and a little intellectually dishonest to take this view these days, in an era when the failures of academia are so dire that the general public elect politicians who make its overthrow a part of their platform. The replication crisis is not solely a statistical phenomenon or piece of trivia!

So you saying "These other people whom I trust don't like Yudkowsky" isn't much of a value-add on your part, and reads more as character assassination or sour grapes. Against your hyperlinks Yudkowsky could stack up the millions of dollars he's moved around by winning the trust of industry leaders, and the professional philosophers like Will MacAskill and Steven Pinker who have at the very least associated with him.

If I were to criticize Yudkowsky myself, I'd root the discussion on those systematic flaws in his reasoning. Fundamentally, he's an autistic man who denies that autism is a disability. His self-help program (Rationality) provides a series of very useful tools for lightly autistic people to ameliorate their deficiency of social intuition, but by no means fully compensates for it. One of the main examples in your post (his statements about animals and children) betrays the under-developed theory of mind characteristic of autism. This is a complaint that could be engaged with on intellectual grounds, rather than defaulting to an institutional dick-measuring contest.