Elon Musk: Within Three Weeks, AGI Will Arrive and Humans Will Colonize Mars! Also: Silicon Valley Wants to Create Designer Babies

(2,200 words.)

For those of you who’ve been following the Stop AI debacle, here’s an update: Sam still hasn’t been found. He disappeared on, I believe, November 22 after physically assaulting a fellow member of the group. It’s a very sad situation. I’ll probably write an article about it for Truthdig in the coming days. If you’d like to catch up on the story, I’d recommend a recent article in The Atlantic, which quotes me. Incidentally, I’m also quoted in this new Coda article, titled “The Future According to Silicon Valley’s Prophets.”

Also, my friend Adam Becker, author of More Everything Forever, recently appeared on Star Talk with Neil deGrasse Tyson. Check it out here:

1. The Singularity and Mars Colonization: Three Weeks Until Everything Changes!

In case you didn’t know, we live in an incredibly exciting moment in history. I’m not talking about the past few years, but the next three weeks, which will witness two of the most extraordinary events since our species emerged in the African savanna 300,000 years ago. That is, if you believe Elon Musk.

Because, as my friend Ewan Morrison points out on Twitter/X, Musk claimed in May of last year that artificial general intelligence (AGI) would arrive by the end of this year. That leaves exactly 23 days until AGI bursts onto the scene, no doubt recursively self-improving to yield superintelligence by mid-January. Wild stuff!!

My friend Michelle also pointed out in a DM that, according to a 2016 article in CNET, Musk says that “we’ll have people on Mars by 2025.” Here I have to admit that I’m a little perplexed, because it takes about 9 months to get to Mars. The only explanation is that Musk secretly shipped some folks to the Red Planet, fourth rock from the sun, earlier this year to declare the surprise founding of Muskopolis, Mars, before the New Year! (Lolz.)

Or, perhaps, we live in a world where professional hypsters and bullshitters flood the media with unhinged prognostications that for some reason journalists, and a big chunk of the public, take seriously. Even more, as Gary Marcus writes, “the machine learning community, which has unwelcomed me since I started vocally critiquing the scaling approach, has finally started to come around” to the view that he (and others) have been defending for years: that large language models (LLMs) aren’t going to get us to AGI — ever. They simply don’t have the right architecture to achieve “human-level intelligence.” No matter how much data they’re trained on, how many parameters they have, and how much compute is available, LLMs won’t yield “God-like AI.”

This contrasts with what AI evangelists have been promising. Altman even once likened “scaling laws” (i.e., that scaling the three model features above will yield more “intelligent” systems) to laws of nature. As Marcus notes, “he bet the entire company [OpenAI] on this notion. He was wrong.”

Even Ilya Sutskever, cofounder of OpenAI, admitted this during a recent podcast interview, saying:

Up until 2020, from 2012 to 2020, it was the age of research. Now, from 2020 to 2025, it was the age of scaling — maybe plus or minus, let’s add error bars to those years — because people say, “This is amazing. You’ve got to scale more. Keep scaling.” The one word: scaling.

But now the scale is so big. Is the belief really, “Oh, it’s so big, but if you had 100x more, everything would be so different?” It would be different, for sure. But is the belief that if you just 100x the scale, everything would be transformed? I don’t think that’s true. So it’s back to the age of research again, just with big computers.

The pro-extinctionist Richard Sutton, who won the Turing Award last year (basically, the Nobel Prize for computer science), also says that LLMs aren’t the right vehicles to get us to the AGI destination, while an article in The Information (quoted by Marcus) reports that

A small but growing number of artificial intelligence developers at OpenAI, Google and other companies say they’re skeptical that today’s technical approaches to AI will achieve major breakthroughs in biology, medicine and other fields while also managing to avoid making silly mistakes.

That was the buzz last week among numerous researchers at the Neural Information Processing Systems conference, a key meetup for people in the field.

In other words, more and more people are waking up to the fact that the magic of LLMs has been wildly oversold by evangelists like Altman, which is precisely why there’s a massive AI bubble that will probably soon pop — catapulting the economy into a recession. As Karen Hao notes in her excellent book Empire of AI, people at OpenAI have openly mocked Marcus for his skepticism. But it turns out that he was right all along.

2. LLMs Are Destroying Higher Education (IMO)

Incidentally, I just finished co-teaching a 350-student course here at Case Western. The professor I taught with and I repeatedly told the students not to use AI to complete their homework assignments. It wasn’t an outright ban on all uses of AI, but we were pretty clear that students shouldn’t rely on it. I even gave an entire lecture on AI, which emphasized the “reliable unreliability” of these systems.

The last day of class, I said to the students: “As you know, AI ethics is a primary focus of my research. So, purely out of curiosity” — working this carefully! — “how many of you know of someone in this class who used AI to complete at least one assignment?”

To my shock, about 1/3 of the class raised their hands. I then asked: “How many of you know of someone who used AI to assist in writing at least one assignment?” About 3/4 of the class raised their hands. GAH!!! We pay TAs quite well to grade assignments, and apparently a good chunk of what they were grading was AI-written in part or in whole. In fact, AI-enabled cheating came up numerous times throughout the semester: some submissions from students looked AI-generated, but we weren’t able to prove it.

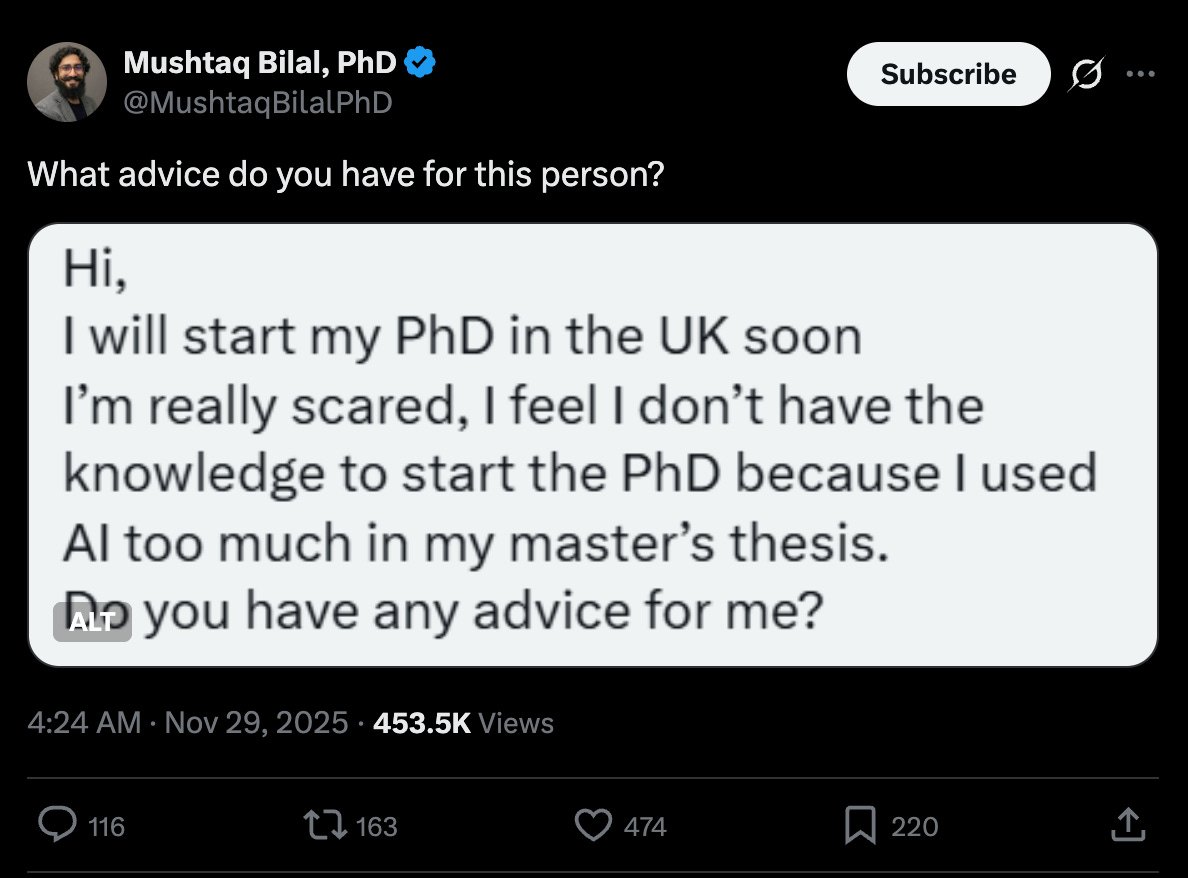

What a sad state of affairs! I am genuinely quite worried about the long-term consequences of students cognitively offloading critical thinking skills to deceptively dumb LLMs. If there’s one skill that students should leave their time at university with, it’s the ability to critically analyze ideas, claims, arguments, hypotheses, perspectives, etc. I’m reminded of this tweet from late last month:

I’m leaving academia in a month, at least temporarily but perhaps permanently due to the terrible job market — which will become even bleaker once the AI bubble pops, meaning that I’m in a state of despair about ever landing an academic position (and I have never imagined myself outside of academia, except as a musician).

But the heartbreak of leaving this strange community full of wonderful weirdos is blunted by the fact that academia is slowly collapsing due to AI. Even if/when the AI bubble bursts, systems like ChatGPT are here to stay, and I have virtually nothing good to say about the societal consequences of these energy-intensive slopmachines. Every past era has been a strange time to be alive, but this era is especially bizarre. :-0

3. Silicon Valley Backs Genetically Modified Superhumans

In a previous article, I discussed the peculiar coexistence of biological pronatalists and (as I call them) replacement antinatalists in Silicon Valley. How does this make any sense, given that these views appear to be antithetical? My argument was that they constitute two different responses to an eschatological worldview that virtually every Valley dweller accepts: the future will be run and ruled by posthumanity, not our species.

Replacement antinatalists argue that it’s “fundamentally unethical” to have biological babies because the future will be digital rather than biological, and the transition from the biological to digital era is imminent. Hence, what’s the point of having biological kids if they’ll be obsolete before adulthood? Or, perhaps they might even be slaughtered by our artificial progeny, a possibility that folks like Michael Druggan endorse with a ghoulish hint of glee.

Silicon Valley pronatalists, in contrast, seem to think that having biological babies is desirable because (a) these children will probably have the opportunity to become posthumans themselves (rather than being outright replaced), thus surviving into the posthuman era, which would be good for them; and (b) more children means a larger population, and a larger population means the acceleration of scientific and technological progress. This, in turn, means the wondrous techno-utopian delights of the posthuman era will arrive sooner for everyone to enjoy.

Here’s an issue that I didn’t discuss in the article about pronatalists and replacement antinatalists: pronatalists don’t just want more kids, they want more kids with specific traits such as “high IQ.” Most readers of this newsletter are well-aware of this, but it’s worth underlining: the goal is to use advanced technologies to engineer superior biological humans.

A major problem here, from my perspective, is the normative term “superior,” which, as such, requires some underlying values to spell out. And the underlying values that inform how many pronatalists define “superior” tend to be deeply elitist, classist, racist, sexist, and ableist. This is a main reason that I reject these efforts to create designer babies — it’s not because we’re “playing God” (an argument made by some bioconseratives), but because those advocating for modified humans have a vision of what humanity ought to become that I find to be morally repugnant.

Simone and Malcolm Collins are perhaps the best-known pronatalists today. They’re the face of the movement and, so far as I know, they’ve already selected some of their children specifically “for traits such as IQ,” which, as we’ve discussed, is a terrible measure of human intelligence.

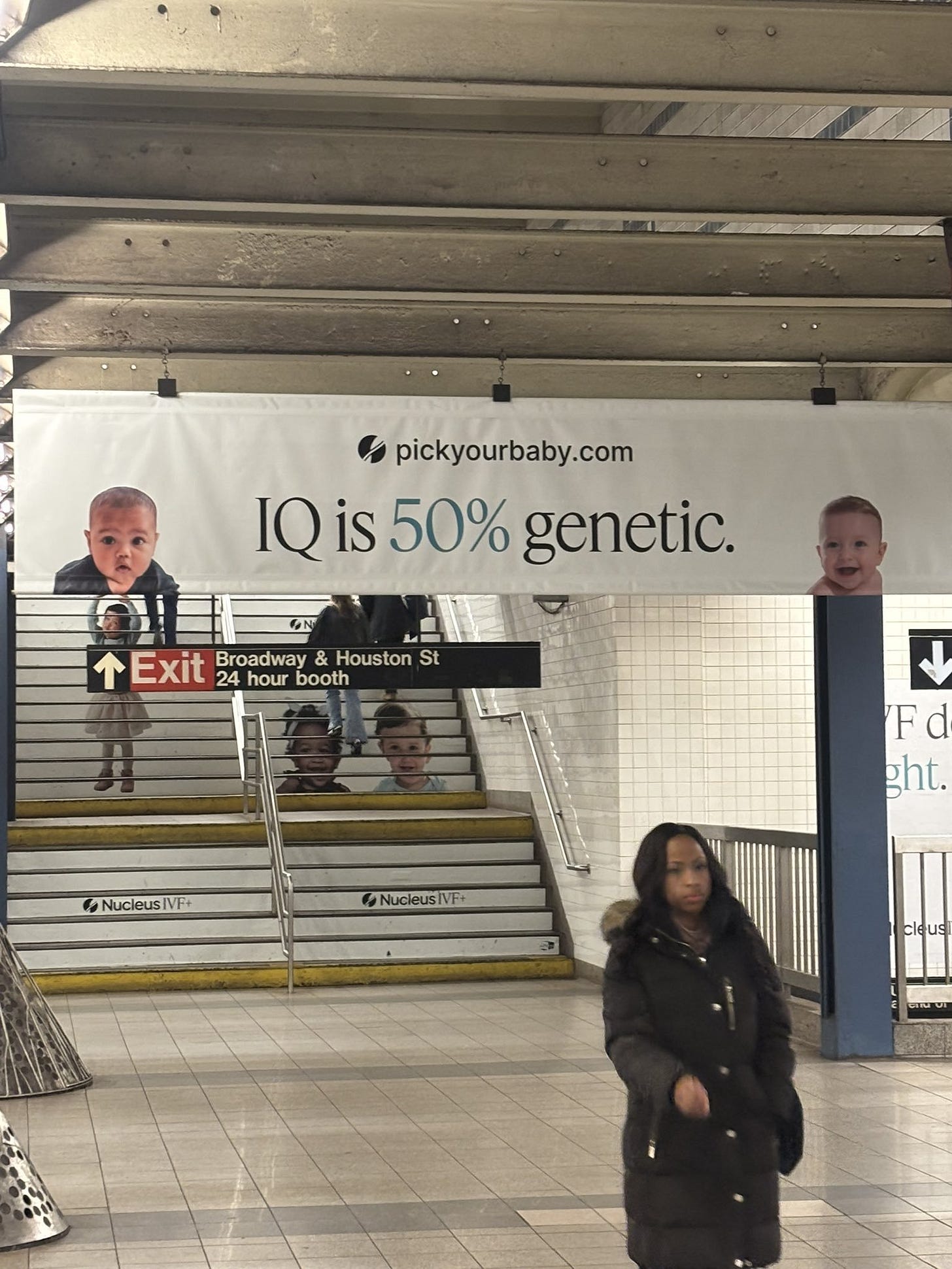

Now, you might have seen people on social media sharing this image:

It’s from a company called Nucleus Genomics, which offers services that analyze nearly 100% of a prospective parent’s DNA “to give you a more complete picture of your health risks.” It also offers a “Nucleus IQ score,” which it describes as “the only IQ score prediction based on your DNA and the latest science.” As noted in a recent KQED interview, Nucleus is one of the companies that has “unveiled tests that they say can give some insight into an embryo’s future IQ, height, or even eye color.”

In the same KQED interview, Katherine Long of the Wall Street Journal is asked about a new Bay Area startup called Preventive, which aims to genetically modify babies. She says:

So, our reporting found that about six or seven months ago, a company called Preventive incorporated it in a downtown San Fransisco We Work. And it’s aiming to tackle a problem that has terrified and exhilarated scientists in equal measure for decades, and that is editing embryos to create a genetically engineered baby. Our reporting found that it’s backed by Sam Altman’s family office and Coinbase cofounder Brian Armstrong.

The interview is worth listening to in full. I bring it up to emphasize the ubiquity of transhumanism in Silicon Valley. Many Valley dwellers explicitly advocate for the replacement of humanity with AGI, a view called “digital eugenics.” Others envision biological humans merging with machines in Kurzweilian fashion and uploading their minds to computers as we transition from the biological to the digital era. Some of the pronatalists in the latter group are pushing a different kind of near-term biological eugenics: creating genetically modified superhumans who can further accelerate “progress” in science and technology and someday join the ranks of posthumanity in utopia.

Will such efforts work? No (and we won’t be colonizing Mars or building superintelligence anytime soon, either!). As mentioned, IQ is a stunningly bad measure of that incredible rich and multifaceted phenomenon we call “intelligence,” and my understanding of contemporary genetics is that (a) we have a very poor understanding of the genetic basis of “intelligence,” and (b) the hereditarian claim that “intelligence” is largely based on our genes is factually incorrect.

However, if such efforts were to prove successful, I think the outcome would be quite awful, for reasons the philosopher Robert Sparrow notes in his excellent article “A Not-So-New Eugenics.” To briefly summarize his argument:

Imagine that we live in a racist, sexist, classist, ableist, homophobic, and transphobic society. Let’s say it’s a society exactly like ours! Now, if you’re a prospective parent, you want the very best for your kid. Since you can’t singlehandedly dislodge the systemic racism, sexism, etc. embedded within our society, you look to genetic engineering as a way of producing a child who will, statistically speaking, have the best chances of success in life. And which people get a head start in the race? Those with white skin, of course, because society is racist; males, because society is sexist; people without disabilities, because society is ableist; and those able to sit still for hours on end studying for the SATs to get into top-notch universities. Etc.

Consequently, the “rational” choice for parents is to select for white, male children with “high IQs,” blonde hair, blue eyes, and so on — all the traits that our pernicious societal norms identify as markers of excellence and superiority. Furthermore, a point that I don’t recall Sparrow making: as more and more parents opt for such children, the pressure on those who resist will grow — racism is bad enough when 72% of Americans are white (which is the case right now), but it will be even worse when 80%, and then 90%, and then 95%, are white.

Sparrow thus argues that the most likely outcome of designer babies is a society that looks exactly like what the depraved eugenicists of the 20th century hoped to create. There’s an invisible hand, so to speak, that will push parents to select for children with nearly identical traits, resulting in a radical homogenization of society where straight white males will dominate even more than they do right now. This is …………. not good.

But what do you think? What am I missing? How might I be wrong? As always:

Thanks for reading and I’ll see you on the other side!

“There’s an invisible hand, so to speak, that will push parents to select for children with nearly identical traits, resulting in a radical homogenization of society where straight white males will dominate even more than they do right now.”

So, a field of uniform, tall, genetically-identical corn, as far as the eye can see.

You know, that kind of monoculture does especially bad when corn blight hits.

Most of the fear here comes from treating AI as an epistemic shock rather than a coordination shock.

When intelligence becomes abundant, the scarce resource becomes orientation.

Students offload thinking, researchers overclaim, startups promise impossible timelines, and parents fantasize about engineered children not because the actors are irrational, but because the system is desynchronized.

Meaning collapses first.

Institutions collapse second.

Not because of AI’s capability, but because of AI’s speed.