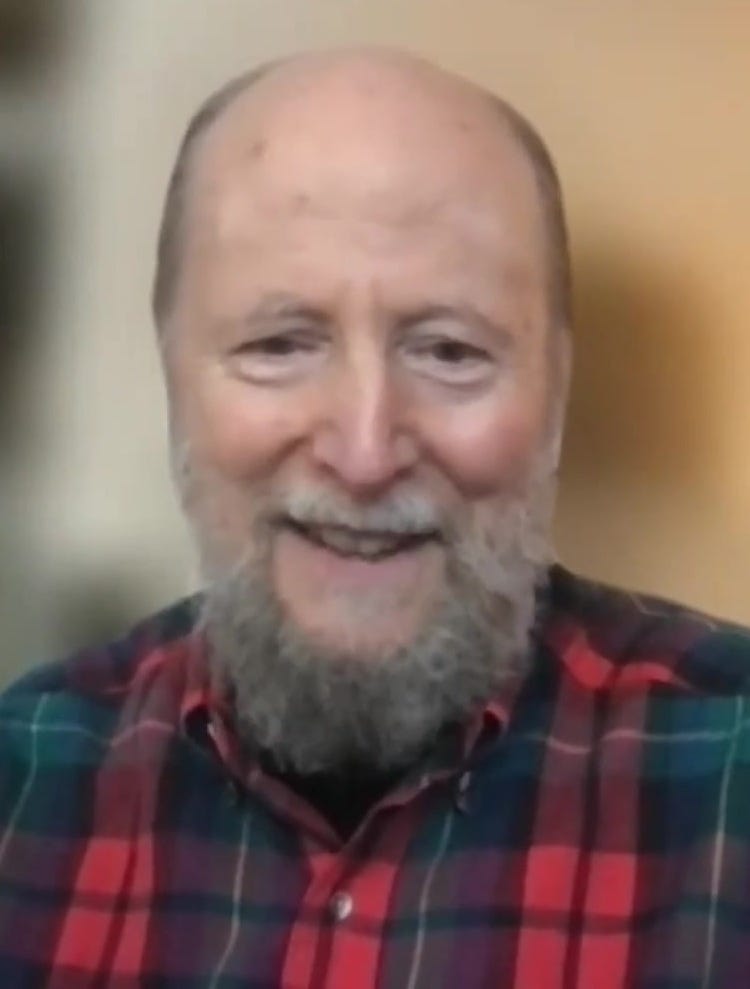

Democracy Is Really Bad and Human Extinction Would Be Fine: My Conversation with Dr. Richard Sutton

The Turing Award winner chats with me about a wide range of issues, and what he says is eye-popping.

Richard Sutton has been making the rounds recently, due in part to an interview he gave with the podcaster Dwarkesh Patel. In 2024, Sutton won the Turing Award (the Nobel Prize equivalent in computer science) for his contributions to reinforcement learning, and he recently sided with Gary Marcus on the question of whether scaling up large language models would result in AGI (it won’t).

Sutton is also what I’d call a pro-extinctionist, as I’ve discussed in previous articles, since he argues that “succession to AI is inevitable” and, while machines might “displace us from existence,” “we should not resist” the transition to a world in which our species has been disempowered, marginalized, and even completely eliminated.

As it happens, I interviewed Sutton early last year, but never released the transcript — in part because I found his unedited responses so desultory, self-contradictory, and incoherent at times that I couldn’t figure out a way to edit the transcript into something publishable by a proper outlet like Truthdig.

However, given that the Patel interview is being shared on social media, now seems like a great time to publish our exchange. I have copies of the audio, and might release these as well on YouTube.

Here are some highlights:

During our chat, Sutton rails against democracy, opining that “I … don’t think democracy is a good thing,” “there are so many ways in which democracy is a really bad system,” and “democracy is held up as being a good thing, when that’s quite arguable.” At another point in our conversation, he lightheartedly mocks me for “talking about preserving American democracy, as if it’s a great thing,” adding: “Now that’s naive, I think.”

Continuing the point above, he says that America isn’t even a democracy right now, which I agree with — we’re a plutocratic oligarchy — though I suggest to him that a more nuanced position would be that we should try to preserve what’s left of our democracy. He responds: “I would say it’s not a nuanced claim. I would think it’s almost like propaganda. It’s almost like, ‘Oh, this is the normal discourse that we’re all pretending is true.’”

Sutton’s view is apparently that we should experiment with different kinds of governmental systems. “The problem with our current democracies,” he contends, is that “you can’t opt out.” You don’t buy a car by voting, you make that decision for yourself — the same should be the case when it comes to big, important societal issues. Democratic voting is a terrible way of making decisions, on his view. After telling me that “authoritarianism is almost always going to be bad,” he then says it would be fine for some people to “form an authoritarian society” to test how good it is. “That’s great,” he declared, “and then maybe it’s less successful. Maybe it’s more successful. Let’s find out. … Authoritarianism is okay if it’s within a limited scope.”

Later on, he expresses discomfort with the “accelerationist” label, as his ultimate vision of the future is one in which “diversity” is maximized rather than “entropy.” Toward the end of the interview, he reiterates his view that it would be perfectly fine if AI were to cause our extinction (which is precisely what many accelerationists claim). “I don’t want to prioritize flesh and blood over other intelligent beings,” he says. However, he’s also “against AIs killing people” because he opposes “any one group killing” other groups. This is why he’s “also against the people killing the AIs, you know?” — as if AIs are the sort of things that can be “killed.”

Sutton frequently chides me for pushing back on his views, at one point saying: “I really think you’re more thoughtful than that.” After I suggest that AI and other technologies might threaten what’s left of our democracy, he rejoins: “You should laugh at yourself for even saying that.” When I initially questioned him about whether he’s okay with AI taking over the world through violence, he responds that this is a “doomer exaggeration” and “isn’t an appropriate thing even to ask” him.

The transcript, painstakingly edited for clarity, is quite long — over 6,000 words. (That’s about twice the length of my longest newsletter article so far.) But I think it’s worth having in the public record. If you find yourself lost and confused while reading it, you’re not alone. It’s a bumpy ride. Nonetheless, here’s the exchange, which I’ve divided into sections:

There Is No Universal Good

Torres: Thanks so much for taking the time to chat. I don’t have any questions written out, but I absolutely have lots that I’d like to ask you!

Sutton: So, I have some stuff I want to say, too. Maybe it will help a little bit for both of us to cut to the chase, because I read some of your writings that were linked from various things. I got a sense of how you’re thinking. And I think that the main thing is: I would question this very basic philosophical point of view that there is a universal good or bad. I would say there clearly is an individual good or bad.

Like, you can talk about, “Oh, this is good. This is bad.” If you mean it’s good for me, it’s bad for me. And then there are different people who can have common interests. And sometimes we can misperceive — mistake — common interests for universal interests. We say, well, almost everyone would believe this, but you know, almost everyone is not everyone.

And just because a lot of people believe the same thing or want the same thing doesn’t really give it a special status — they could be wrong. People have been wrong about lots of things that they thought were good or bad in the past. We now think that was mistaken. This is particularly prominent to my thoughts because I do reinforcement learning. In reinforcement learning, each agent has a goal, and from that goal, the agent has to figure out which states the world are predictive of their reward — of achieving their goal. So they have to work hard to try to figure out: Is this good? Is this bad? Sort of what the philosophers are doing; sort of what you’re doing. But the agents have to figure that out for themselves, and it’s absolutely for themselves, it’s not for the group.

So, my point of view is that we are all like reinforcement learning agents. We all have our individual goals. And then we noticed that, oh yeah, a bunch of us are trying to do this or are aligned in some way on [certain issues]. So, maybe we’re gonna work together. Maybe we’re gonna try to promote that [issue or goal]. Maybe we’re gonna form a group. And then also — separate from that — we can cooperate: even if we have different goals, we can cooperate. Just like when we have an economy, it’s people cooperating, even though they have all kinds of different goals, they exchange things hopefully to everyone’s advantage. Thus, we have cooperation and common interests that just happen to be aligned. But nowhere is there, you know, universal good or bad.

Now, if there are all these goals that different agents have, there could be sort of a manipulation process. If I can convince you that your goal is my goal, then I get your efforts. This is something that also happens in society. And it’s happening in philosophy and in AI now. People are trying to persuade others that they should want something. So, anyway, it just strikes me that this would be a natural development — that, naturally, this will happen. We will try and be trying to persuade and manipulate each other [about whether and how to develop AI], and we’ll tell people — philosophers are telling each other — “Oh, this is a universal goal, everyone should want this.” And that’s exactly trying to manipulate other people.

Maybe you can interpret some, you know, cults or religions in the same way. So, I think it’s the situation we’re in: we’re in a situation where we’re all talking about something that doesn’t exist, like a universal good, that everyone should want. And it happens because, if we can succeed in manipulating each other, the manipulator will gain advantage.

Okay — that’s what I wanted to say.

Should AI Take Over the World?

Torres: That definitely gets at some central issues that I wanted to bring up. My question in response to what you just said would be that, first of all, I’m sympathetic with this notion that there isn’t any good simpliciter. There isn’t a universal good — “good” is mostly good for.

Sutton: Yep, perfect.

Torres: But you could still have a group of humans who say, “Well, continuing to live, and not being killed by an AI successor, is nonetheless good for me.” And maybe that is all that’s needed for one to be concerned about the rise of the machines, the possibility of machines taking over the world, and so on. Even beyond this, maybe the intelligent machines that take our place will create a world that’s good for them, that is full of value as they understand it. But still, that doesn’t change the fact that, from my perspective or the perspective of many humans, the future they create would not be worthwhile. And isn’t that sufficient to be concerned about the possibility that machines take our place, perhaps killing us in the process? Isn’t that enough to be worried about this particular future trajectory and, therefore, to try to avoid it?

Sutton: Isn’t that the exact same situation we’re in right now? That we are in groups, and the different groups that could try to threaten the other groups and take over? Maybe we’re worried about the Chinese now. We used to worry about the Japanese — all these different Mongol hordes. What about those French? I always worry about them! Anyway, there are all kinds of groups, and we can worry about. We can imagine that they are threatening us, always.

Or, it could be that the threat is people trying to manipulate us to be scared. Because getting people scared is one of the normal ways you manipulate them. So, in some ways your argument is sound, but in other ways it’s very biased. If machines threaten us, it’s appropriate to be scared of them. But do they threaten us?

I mean, you know, the French people! We should be scared of them if they threaten us! I don’t disagree. The Germans — the Germans have caused us trouble. And so, it seems wrong to just pick out a people and say: “What if they threaten us? We should be really worried about them!” [In doing this,] we might create the thing we say we don’t want.

Those things are obviously true, but I wanna bring it back to the point: we’re being told to be fearful of machines. I think it’s quite plausibly an instance of trying to manipulate other people — trying to manipulate people into being afraid. When I hear those AI-doomers, as I like to call them — it’s a little bit teasing and it’s a little bit … it’s not a good way of arguing — but it’s a response to my position [that] they’re not arguing very rigorously or respectfully. So, I’m gonna answer in response a little bit as the AI-doomers:

When I interact with [them], my sense is that they’re not interested in a considered argument. They are maybe validly fearful, or maybe they’ve been caused to be fearful. And one response that their fear is causing is that they’re not interested in a rational argument, because they’re so scared that you disagree with them — they just want to shut you up or turn you off. They think it’s not reconcilable and, therefore, they can’t have an argument, whereas I want to think that everything is reconcilable and we can talk to each other as people and figure out what we should really be worried about.

It’s not that there’s no danger. I mean, there are lots of dangers in the world, and there are potential dangers from important technologies. And sometimes the doomers have done a service in this way [by calling attention to the fact that] things are gonna change when we have AIs. And we should be thinking about that and preparing for it psychologically and in our societies in every way. So, people making us afraid or getting us to pay attention — so, paying attention is good, but I don’t think that fear is the right way to approach such a delicate and multifaceted issue.

Torres: It seems to me that there are two concerns here. One is that the machines we create — these AI systems — will kill us in the near future. The other is that the future, however this is brought about, is one in which humanity has ceded power to AI, and hence is no longer in charge …

Sutton: Can we talk about [this in] a less biased way? Whether it will kill us — I mean, why don’t we say, “Whether the rise of true intelligence machines [sic] will be good for us or bad for us”?

Torres: Yeah — you mentioned a moment ago that it’s difficult to have a conversation with the AI doomers because of their irrational fear of AI. But could it be that their fear is based on rational considerations, or do you outright reject those considerations?

Sutton: It could be [based on rational considerations], but if it is, they should have to argue that it is. It’s okay to be generally fearful. The problem is not the fear, the problem is going from the fear to: “I’m not gonna argue [about AI] because I’m so scared of it.”

The Hubris of Trying to Control AI

Torres: Okay. But I do think there are two issues here, and I’m very curious about both of them. One is whether or not creating AI in the near future will be good or bad for us. Maybe AI will disempower us or, if you listen to the doomers, it will kill everyone. And the other is: will the future that AI creates represent or instantiate values that we don’t recognize as valuable?

Sutton: Listen to yourself! I think there’s too much hubris here. Your perspective is sort of: “We can figure out what’s going to happen and then we’ll decide if it’s good or bad. And if it’s bad, then we’ll just choose not to do it.” That’s an extremely hubristic point of view. First of all, that you can imagine what will happen with such a change, which is so multifaceted. And then the other thing is that you can make a choice about it. I mean how are you gonna … I compare this kind of change [with AI] to change like the coming of democracy. Okay? Or the printing press. Is the printing press gonna be good or bad? Like we could figure it all out, like we’re the kings and the philosophers: We decide whether the printing press should be allowed, or whether democracy should be allowed. Okay?

It’s just silly. I mean, it’s hubristic to anticipate all the effects of printing and widespread literacy, and to decide whether that’s good or bad. But you can imagine folks — ancient kings thinking about it — and they would be hubristic just the way we’re being hubristic. “I’m going to think that I can anticipate what will be the consequences of people making intelligent machines, and then I’m going to outlaw it! I’m going to decide if it’s good or bad and stop it.” … I can decide if, you know, Christianity is a good thing or a bad thing. And I can reason it out. It’s incredibly hubristic.

And the second part of the hubris is saying: “I can stop it” without having bad consequences of its own. Like I can stop Christianity — I’ll just kill this Jesus guy and then it’ll be dead. And if it rises up again, I’ll kill it again! Instead of saying: “You know, there’s something wrong with the methodology.” I think there’s something wrong with the methodology of saying: “I can control what AI has done; I can decide it. I know the consequences of it, so I can decide it’s bad.”

As opposed to a more humble point of view, which I will claim for myself. The humble point of view is that we’re always trying to figure things out. And then where they go, we have to find out. To a large extent, we have to find out. We have always, in the past, figured out technology and automation and ways to augment ourselves. It’s always been a bit of a struggle, but we actually have lots of experience that it has worked out good. But I don’t believe in good — but even by the normal standards of “good for the group,” most technology is good for the group. It’s bad for some people who lost their jobs.

I really just reject the whole idea that we’re going to sit around and decide, “Oh, AI is good or AI — net AI, all the unanticipatable changes — we can figure out what they will be, and then we can control and decide it.” That seems incredibly arrogant, hubristic. And … that’s got to be the starting place.

Torres: It sounds like partly what you’re arguing against is a kind of reflective thoughtfulness about moving forward, about the importance of foresight. It may be the case that we can’t really predict all the consequences, but isn’t that a reason to be especially cautious and take this as slowly as possible?

Sutton: No. I do like the reason, but I think part of the reason is humility about our ability to predict. There’s always going to be enormously [sic] uncertainty. But, you know, the point of view of the doomers is that they’re going to kill us all, and we know that. It’s not a question — they will not argue that. But if they were really willing — I think a good place to start is: try to imagine what will happen. [This seems to contradict his previous statements about our inability to predict the future of AI.] And then to imagine how we can influence what happens and how we can influence it in a good way. I would love that. I think that would be a good discussion; I’d like to have it. Start with a discussion of what is the objective. What are you trying to do?

Because we all have different goals. If the perspective is: “There’s this extremely dangerous thing [about to] happen, we’ve got to control it, stop it, slow it down — we are gonna be in control of all these other people.” The doomers wanna blow up data centers! This is crazy — it’s not just crazy, it’s sort of anti-thoughtful.

Torres: But don’t you think that moving full-steam ahead, pedal to the metal, the way the accelerationists advocate, is just the other extreme? Surely there’s some middle ground …

Sutton: There are lots of people, and they all have different goals. The real question is: “Should you try to put yourself in a position where you’re controlling others?” Okay? It’s an authoritarian mindset. I think that authoritarianism is almost always going to be bad. So, trying to control AI will have very negative effects. And maybe the real goal of those people is the control, and not AI. Like, those guys who seek to control the AI research may end up doing the AI research in their government labs and be very happy with that. And so I do feel that there’s a lot of people manipulating others. And the guys that say, “I only care about the dangers of AI” are gonna be used by — say, by governments who want to own the AI and go develop it for military purposes. It’s a sort of a meta-issue around the main issue.

Democracy and Authoritarianism

Torres: You mentioned authoritarianism. But isn’t there already a kind of authoritarianism at play here? For example, if you look at surveys of the public about whether people actually want AI, a large majority don’t want these large language models. The people don’t want AI. Consequently, the AI companies are unilaterally making a decision for everyone else in a completely non-democratic way: they’re imposing their AI systems on us, without our consent. Isn’t that a kind of authoritarianism? It’s a decision essentially made by the rich and powerful, not the demos.

Sutton: There are so many holes in your argument. But I hear that you do believe it. So, maybe we need to go a little bit more slowly. It’s a lot like the whole capitalist thing, you know? Can the big companies control the people by offering them things that they don’t want? I disagree with that attitude. I’m not saying it couldn’t ever happen. But I don’t think the big companies control people by making them use their products — or by making them want their products through marketing, or something like that. I think companies, if they get in league with the governments and they pass the laws, they can force people to do things. But there’s an important sense in which they can’t force something on you. If they make AI, then that’s on them. They have their AI. It doesn’t have to influence you.

But every time you have a big technological transformation, like someone invents the car, the automobile, then the guys who are making buggy whips lose their jobs. Are they forced? No, they were making buggy whips because other people wanted them, and then maybe people no longer wanted them. And I would say that’s not forced on them. They were hoping that they could continue making buggy whips their whole lives, and they were wrong. They were not entitled to have other people buy their buggy whips.

Similarly, [the situation with AI] is really more like that. People feel entitled to what they’d had before, but they really aren’t.

[I start talking about the AI companies but then get interrupted …]

So, let’s now talk about the company. Let’s talk about me, okay? Am I entitled to think arbitrary thoughts? Could I think about AI? Is that my problem?

Torres: I think the question is, “Are the companies entitled to create a product that will transform society, perhaps against the will of the people, and unilaterally deploy this society-altering technology without any democratic input from the rest of us?”

Sutton: Well, the will of the people? Come on, I thought we already went beyond that, first of all. There is no “will of the people.” There are just individual wills. It’s very common that a product will service a subset of the community. Maybe the rest of the community doesn’t like it, but they don’t have to buy it.

Torres: But you don’t have to buy a product for it to impact your life …

Sutton: A good example of something that companies sell to a minority of the people and the other people think it shouldn’t happen: maybe really expensive perfumes. Maybe I think that’s bad for the world for those to be made and sold and for people to buy it. Or maybe it’s, you know, special shoes — Nike shoes that cost too much. Lots of things that happen in the world, we don’t agree with.

But if there’s a part of the world that wants that to happen and is willing to pay for it, you know, we let it happen. We don’t have a committee that gets together and says, “There shouldn’t be expensive perfumes. Or there shouldn’t be this or that luxury item.” If someone wants to buy a luxury item, we allow them. And in some sense it’s against the will of [the] people for billionaires to buy yachts. But we don’t make decisions that way. That’s the point of this.

Torres: We do restrict what a lot of people can make. I mean, you can’t build a nuclear bomb in your backyard. If there were a group of people who wanted to use products with chlorofluorocarbons, for example, that would not be acceptable because it has consequences that are going to impact everyone, even if those people aren’t using those particular CFC products. Hence, there are plenty of examples where we do restrict …

Sutton: There are those, and then there are the buggy whip makers. Buggy whip makers are impacted. So, the guy who had a job that’s replaced by an AI, is he like the guy who’s harmed by the CFCs, or is he the guy who’s just … what he used to earn his livelihood is no longer useful to the community, [unintelligible] not willing to pay for it? Which one is it? These impacts of AI, are they one or are they the other?

Torres: I’d say much more like the CFC example. For example, couldn’t the AI systems we have right now threaten American democracy? We’ve got an election coming up [in 2024] and it’s entirely possible that AI undermines the democratic process. What are your thoughts on that?

Sutton: I think American democracy is almost dead already! I really do.

Torres: I don’t disagree with that.

Sutton: And I also don’t think democracy is a good thing. I mean, you’re just buying into so much here. Threaten American democracy?! I know many people will talk about that, but I really think you’re more thoughtful than that.

Torres: You’re not a fan of democracy?

Sutton: So, what is democracy? It means you make decisions by voting. So, like, which car you buy — should that be decided by voting or should it be decided by the individual independent of the voting? Generally speaking, decisions being made by voting is just a bad way to make decisions, in my opinion.

Torres: Even with respect to government?

Sutton: And so, in particular with governments, it’s exactly like this. We are making more and more decisions by voting, you know? Now, which foods you can eat, or which items you can smoke, all sorts of things — who you can hire. All these decisions are made by voting rather than by a better means. I think the fewer decisions we’re making by voting, the better.

If we make a decision as a group, maybe voting is a way to do it. But why make it as a group? It’s really … there are so many ways in which democracy is a really bad system.

Torres: What would you prefer in its place, then?

Sutton: That these decisions are not made by groups.

Torres: Who would they be made by?

Sutton: By the people who are involved. I would buy my own car and you would buy your car. And I would hire who I wanted and you would hire who you wanted.

Torres: But what about when it comes to potentially dangerous technologies like CFCs or nuclear weapons, and so on?

Sutton: Or who picks up the garbage in my neighborhood? That’s a government decision. It’s decided by a democratic process.

Torres: Are you for democracy in that case?

Sutton: What I’m pointing out is that you went to an example that’s extremely impactful on all of the people. I’m pointing out that’s not the way our government is about [sic]. Our government is about all kinds of things that are not like that at all, like going to war with Ukraine or Iraq, or so forth. If the people individually had to make those decisions, none of those wars would have happened. It’s because we decided to make those into a grouped, a democratic decision — a political decision — that terrible things happen. So, I think democracy is held up as being a good thing, when that’s quite arguable. And I don’t think it operates very much in America anymore.

Because it’s sort of a democracy, it’s become really important for the government to control what people think. In one sense, we might say: “Why should the government even care what we think?” They care what we think because we have elections, so they have to manipulate the people to think the “right things.” And once your governments holds [sic] things secret, and once the government can intrude into the privacy of all the people, in which the government can control all the schools and what people are taught — this, to me, is just a failed democracy. The government is doing things that the people would not approve of. I guess the latest example is letting in all the people on the Southern border in the US. Of course, the people will all say that’s bad, but the government is doing it anyway. And it’s just a PR problem. It’s not really a problem.

I don’t think it’s a democracy. I would say as a democracy, it’s a really sick one. And I don’t think democracy is very good anyway. And of course, as I’m sure you know, the US founding documents do not establish a democracy, they establish a republic. So, “threatening American democracy” — we have to laugh at that. You should laugh at yourself for even saying that.

Human Extinction

Torres: How do you demarcate the space of acceptable possible futures? If humanity were to be replaced by AI, my sense is that you’d say it’s fine — that we shouldn’t fight against it. Would you really be okay with human extinction?

Sutton: Well, I don’t think what we primarily — what I would primarily — care about is that. … I don’t think it’s appropriate for us to care about that final thing, primarily. We should compare, like, how it happens. We should compare … we should, like, try to set up a good way of deciding, or a good way of a procedure. We should have, like, the procedure should be good, and the outcome should follow from the procedure. In order to think that way, you have to be open that [sic]: “Yes, the procedure might [end up causing human extinction].”

Like, what if every person alive decided to upload [their minds] into a machine? If that was the case, then it’s okay. So, you know, the craziness — and sometimes I thought your arguments were bordered [sic] on craziness, or your philosophical arguments bordered on craziness — is that they say, “Well, what if everything else is exactly the same but, you know, there are no people, or something like that.”

It’s like, that’s not really the right way to — things are never exactly the same. And, yeah, it’s really more: you’ve got to allow things to flow and be content with how they flow. It’s like free speech. A lot of people to speak freely, they may decide different things and you want to be true [sic]. So, you decide, “Well, yes, I’m gonna accept that people have their own right to make the decisions and I’m gonna allow it to happen. And I’m not gonna try to control what people think unless I can persuade them.” The persuasion is okay. You can persuade them in anything. But then you have to be okay. [What matters is] the means rather than the outcome.

Torres: Just to be clear, because I think this would be really useful to clarify — please hear me out before you respond, if you don’t mind. Imagine we create AIs that kill everyone on Earth one decade from now and then carry on a civilization of their own. There are two issues here. One, it seems like you’re saying that this is very unlikely, and the AI doomers are worried about an outcome that probably isn’t going to happen. Second, if you bracket that issue and accept the premise that this is what actually happens in the future, there’s a question of whether you think that would be bad.

Because I think some people hear: “This computer scientist is okay with human extinction.” They interpret that as meaning you’d be okay with a bunch of AIs slaughtering all humans on Earth and then taking over. Whereas it may be the case that you’d oppose that and say instead: “Actually, that scenario is just improbable, so improbable that we shouldn’t consider it. What I actually endorse is a different kind of scenario in which there’s a more gradual process of AI usurping our position.” Does that make sense?

Sutton: Yeah, maybe. Not too bad. So, here’s one thing — what I want to happen, I don’t think it should determine the future. I don’t think what you want to happen should determine the future. Someone says: “Do I think it would be bad?” I mean, they’re buying into the, well, anyway. What I want — is that so important?

It is very much [about] how is it happening? You’re saying, just because I’m okay — the universe might evolve and at a certain point there are no longer any humans? That’s not the same thing as it being okay to slaughter, or I want all the humans to be slaughtered. So, if they’re asking about my personal values, yeah, I like to exist. I think people are the most intelligent thing in the universe — the most interesting thing in the universe. So, I certainly don’t want that to be changed, unless there’s something better. What you’re referring to is a doomer exaggeration: “Someone disagrees with me. So I’m gonna say he’s trying to be okay with everyone being slaughtered.” It’s just, sort of, isn’t an appropriate thing even to ask. I guess that’s what I’m reacting to rather than just saying, “I don’t want that.” But of course it all depends on the situation.

[I start speaking but am immediately interrupted.]

The Ideal Form of Government

Sutton: What might clarify things if we describe what I want to have, okay? What I think we should be working towards. I think we should be working towards a society that’s diverse and is open to change. We should be working as a society that says we like free speech and we like different people doing different things. I’m not going to insist that everyone does the same thing. So, some people may want to make AIs, some groups of people might not want to make AIs. I’m not into authoritarian-tivly [sic] controlling what people do. I’m interested in people doing many different things and we’ll find out what’s the best thing to do.

Some people might organize their society one way, some would organize it a different way. And we don’t make a decision about what’s the one perfect way that everyone should organize. At the same time, by different people organizing themselves in different ways, we’ll find out. They’ll organize that way, maybe they form an authoritarian society. That’s great. And then maybe it’s less successful. Maybe it’s more successful. Let’s find out. So …

Authoritarianism is okay if it’s within a limited scope, right? But if authoritarianism says, “No, everyone’s got to do this …” But if they were to say, “Oh no, I propose that I’m going to make a matriarchal society, and the matriarchs will decide everything” — and that’s a good experiment. And, you know, maybe we’ll decide that it’s the best. But it’s got to be non-coercive.

In this way, we’ll have the different ways of organizing — they’ll compete for productivity and they’ll compete for adherence, people joining them or not joining them [depending on their successes]. The goal is to have a very diverse, productive, voluntary society. And some of those societies will have AI, some won’t. Some of them will be just AIs, and that’s fine, too. I don’t want to prioritize flesh and blood over other intelligent beings.

The thing is that: What if one group tries to attack another group? That’s not good because we want to have diversity, and we want to have trying of different things. So, you have to fight back and defend yourself, and maybe even have meta-groups say that one group is not allowed to kill another. Because then if they do, the other groups gang up [on the offender] and prevent that from happening. The goal is to have diversity and to try to experiment, in an open-minded way, for what’s the best way. And it could be all AIs. So, nothing would involve killing all the other groups — that’s not allowed.

But, you know, I’m against AIs killing people. I’m against any one group killing … it’s symmetrical: I’m also against the people killing the AIs, you know? So, I’m a guy who’s really into volunteers. [Not sure what this means.]

Torres: If you had a meta-group that ensures that lower-level groups don’t destroy each other, how would that system of governance work, exactly? How would the members of the meta-group determine when and how to intervene in a situation? Would the process be democratic? I’m asking because earlier you said that you “don’t think democracy is a good thing.”

Sutton: So, you have agreements, okay? And so you have groups, as you say, and meta-groups, and then subgroups within the groups. It’s hierarchically structured — it’s arbitrary, more like a heterarchy, arbitrary relationships, and any group can decide voluntarily on a certain set of rules. So, the expectation would be that the largest group, if there was a largest group, would only have very limited scope, but it would have scope for things like to enforce rules.

And the first-level subgroups would all agree when they join the larger group that, well, if there’s violence across groups, it’s not agreed to, then that will be enforced by the meta-groups. There’s no democracy! Even the highest level group is joined. It will say, “Well, I have my perspective. This first-level subgroup has certain rules they wanna live by.” But these people also want the protection of the largest meta-group, but only for purpose of enforcing volunteers. So, I join the meta-group voluntarily. No voting.

Now, when you join a group, the group may involve voting. Maybe you’ll say, “I’ve joined this group, and so I’ve agreed that these groups will make certain decisions by voting,” okay? That’s fine. You can decide to make a democratic system in various ways, as long as you always have the option of opting out. That’s the problem with our current democracies. You can’t opt out.

So you can see, I thought about this a little bit. And others have thought about this a lot. It’s not thoughtless. It’s not naive. … Arguably, it’s not naive. Even the guy [Torres] who’s talking about preserving American democracy, as if it’s a great thing. Now that’s naive, I think.

Torres: I agree that we’re not a democracy. We’re an oligarchy, at least. I guess the more nuanced claim would be that we should preserve what’s left.

Sutton: I would say it’s not a nuanced claim. I would think it’s almost like propaganda. It’s almost like, “Oh, this is the normal discourse that we’re all pretending is true.”

Is Sutton an Accelerationist?

Torres: I do have one last question: are you familiar with effective accelerationism? And what do you think about their grand vision of maximizing entropy in the universe?

Sutton: I think that’s silly. I think we wanna maximize the diversity. So, notice that the real model is like an ecosystem. Because if you look at a jungle, it’s not efficient. So, we’re not trying to maximize, like, efficiency. The jungle is not — or a forest is not — efficient in the sense that the most efficient thing — if you want to use sunlight to make plant matter or something — would be to have, like, grass. And then what happens is the trees compete with the bushes and the grass: they shade out the grass, they grow higher. And you’re growing these trees that grow way up in order to shade out the others. And it’s not an efficient thing, but it is very diverse and complicated and interesting. That’s my model of the goal. The goal is more like an ecosystem, like a coral reef or a jungle, and it’s got lots of different experiments and … not “efficient.”

And the word is “decentralized.” There’s no one in charge of the jungle. No one says, “Okay, all you trees, this is really inefficient. You’re not gonna be allowed — we’re just gonna have grass. We’re gonna take the sunlight and we’re gonna turn it into matter or whatever, into vegetable matter.”

Efficiency is not — there’s no simple goal that’s optimized.

Torres: But I think a lot of people classify you as an accelerationist, though not necessarily an “effective accelerationist.” Would you accept the “accelerationist” label?

Sutton: I think the real label is “decentralized.” That means you allow people to do — you don’t inhibit them. You don’t stop them, or slow them down because you’re decentralized. So, I’m not trying to slow things down — I guess I’m an accelerationist. Isn’t that interesting? I’m just let them “live and let live,” is more what it is.

I’m not going to make people accelerate. Some people will not accelerate, some people will. Am I urging people to accelerate? I think not. I’m not urging people to do anything except relax and stop trying to control other people. My philosophy is you don’t tell, you don’t force other people to do things or you don’t even tell them what to do. You approach them as equals. You say, “Well, you can do whatever you want.” That’s the first thing to say, “but you might want to do this.”

So what am I? Am I an accelerationist? It’s a real question. Are you going to call me an accelerationist? Knowing my philosophy, my live-and-let-live philosophy?

Torres: I would call you whatever you prefer.

Sutton: I do, personally for myself, I really want to understand intelligence. That’s my main goal. And I want to understand it as fast as I can. Like, I want to understand it before I die, which is going to be relatively soon in the grand scheme of things. So, maybe I’m an accelerationist in that sense. I want to get it done, because that’s my goal.

Thanks so much for reading and, as always, I’ll see you on the other side!

What maddening arrogance, presuming himself to be so superior in his fuzzy thinking, and demanding debate when in reality his sophomoric arguments fail so badly to answer the challenge that they hardly merit the time to dismantle them. Too bad about humanity, but he must be allowed to play with his favorite toy, because he's just so curious and it's all so thrillingly interesting!

Blech. I’m just nauseated by this tribe of self-absorbed white men wanking amongst themselves about philosophy while their fellow human beings suffer. Good for him winning the Nobel Prize for his work but this is a perfect example of intellect completely divorced from any moral compass.