The Moral Cowardice of Leading Effective Altruists (and How EA Has Probably Made the World Worse)

(3,500 words)

Effective Altruists (EAs) like to see themselves as morally superior to everyone else. They are, in their own eyes, paragons of moral excellence — the only ones out there doing altruism the “right way.” Many understand their mission in messianic terms — as literally saving the world.

As Nathan Robinson writes in Current Affairs:

The first thing that should raise your suspicions about the “Effective Altruism movement” is the name. It is self-righteous in the most literal sense. Effective altruism as distinct from what? Well, all of the rest of us, presumably — the ineffective and un-altruistic, we who either do not care about other human beings or are practicing our compassion incorrectly.

We all tend to presume our own moral positions are the right ones, but the person who brands themselves an Effective Altruist goes so far as to adopt “being better than other people” as an identity. It is as if one were to label a movement the Better And Smarter People Movement — indeed, when the Effective Altruists were debating how to brand and sell themselves in the early days, the name “Super Hardcore Do-Gooders” was used as a placeholder. (Apparently in jest, but the name they eventually chose means essentially the same thing.)

Related articles:

Effective Altruism Is a Dangerous Cult — Here’s Why. Read it HERE.

Three Lies Longtermists Like To Tell About Their Bizarre Beliefs. Read HERE.

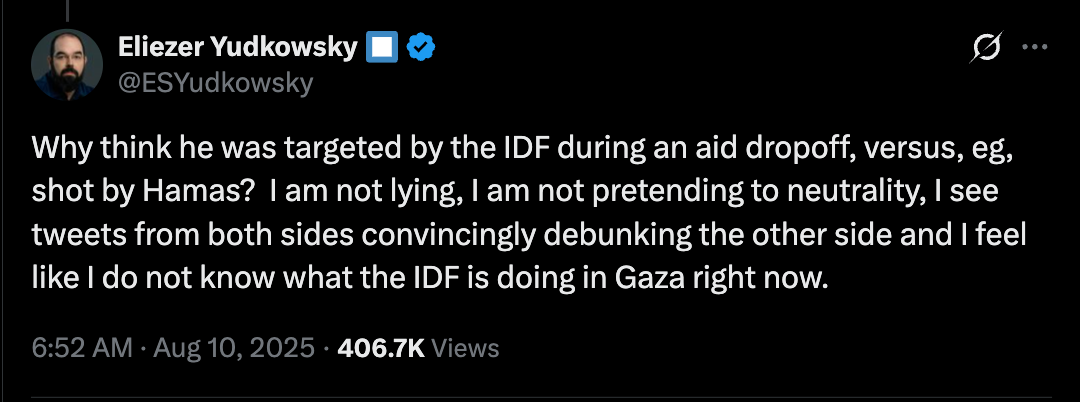

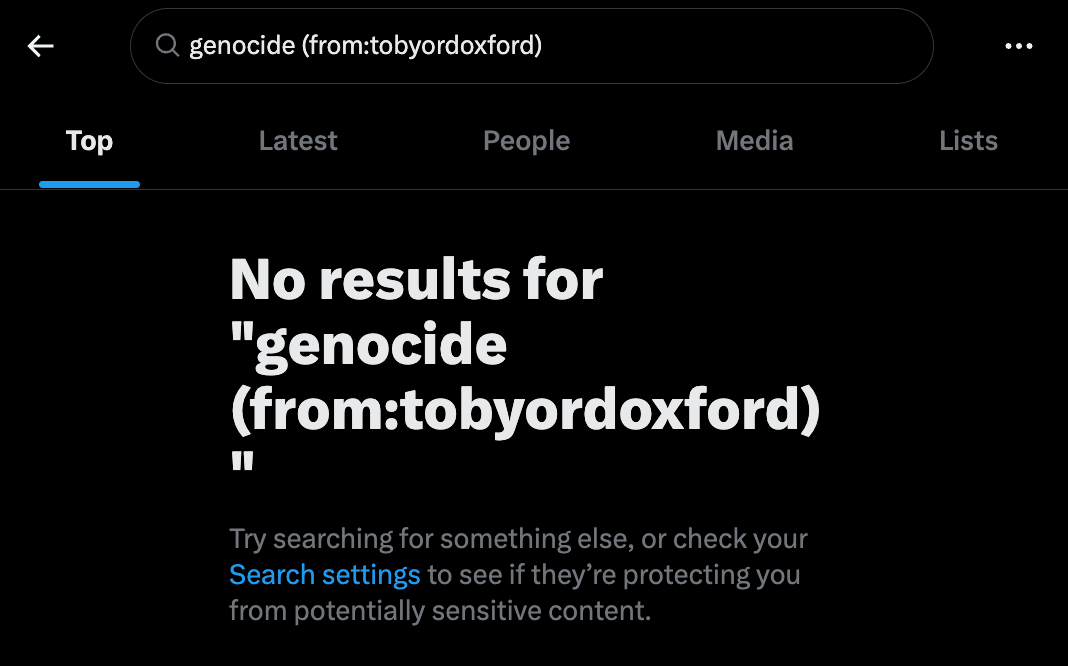

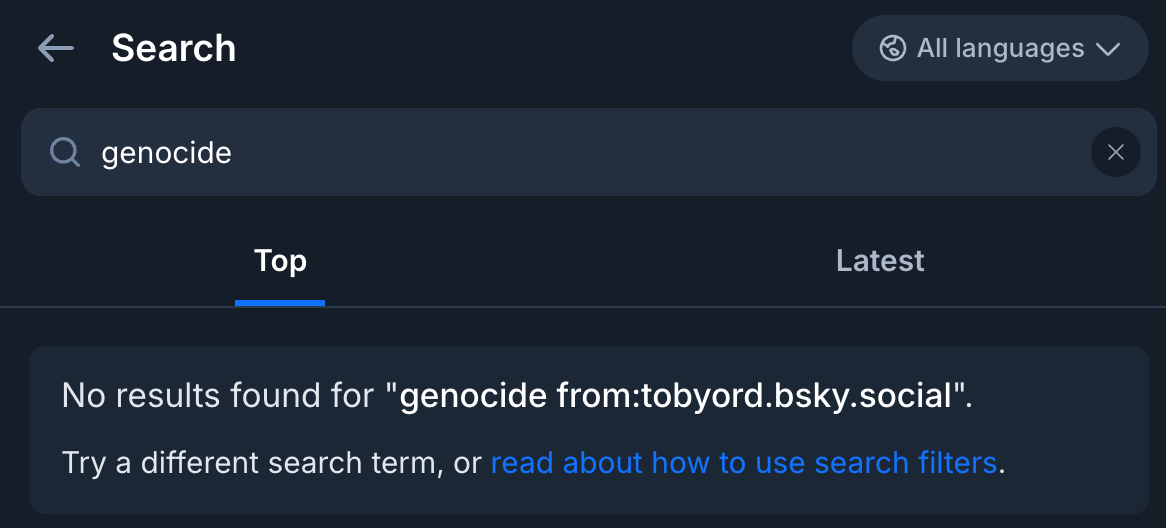

Despite this grandiose self-conception, have you seen any leading EAs saying anything about the ongoing fascist takeover in the US? About the collapse of American democracy? About ICE murdering innocent people in the streets, rounding up immigrants and sending them to concentration camps where detainees are abused and tortured? About the horrific genocide in Gaza, funded by Western countries?1 About Grok spreading CSAM (child sexual abuse material) on the social media platforms they use? About algorithmic bias, enshitification, or intellectual property theft? (Anthropic, which is run by EA longtermists, just paid out $1.5 billion in damages after being found guilty of pirating copyrighted material from online shadow libraries — not a word about this illegal behavior from leading EAs, so far as I know.)

People screaming “Let us out” from a US concentration camp. From here.

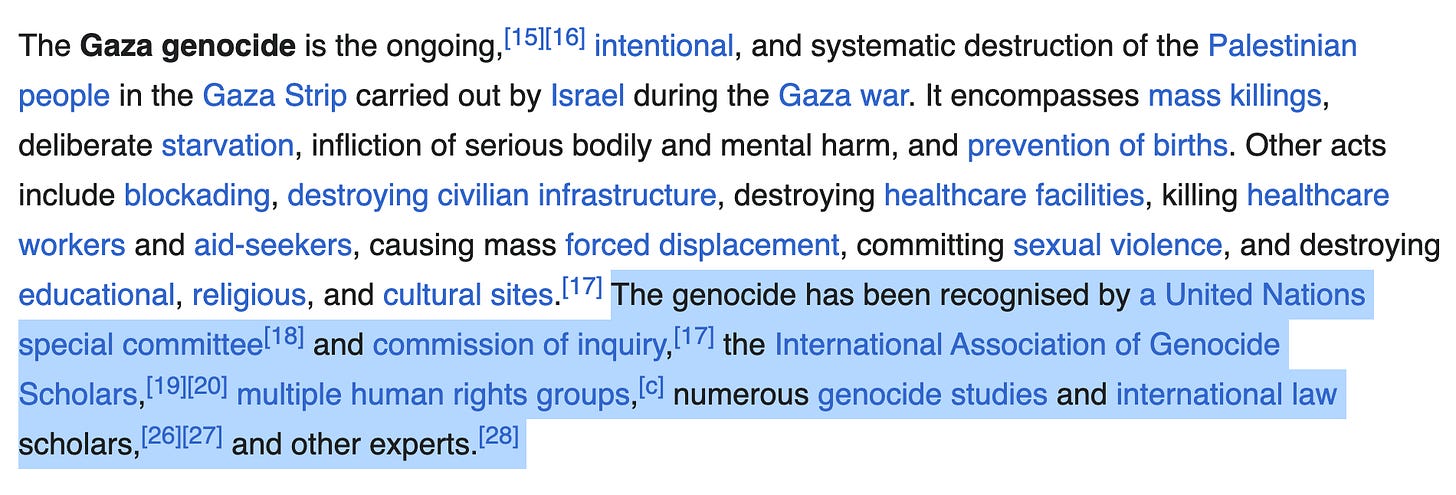

By “leading EAs,” I mean influential figures within the community like William MacAskill, Toby Ord, Nick Bostrom, Eliezer Yudkowsky, and even Nathan Young.2 There are many EA foot soldiers who aren’t particularly influential within the movement who have spoken out about the issues mentioned above, and that’s commendable. But I’ve seen virtually no examples of people at the top taking just 5 seconds out of their day to express support for, or solidarity with, the folks in Minneapolis marching in the streets against Trump’s Gestapo, or those (like Greta Thunberg) fighting against the genocide in Gaza.

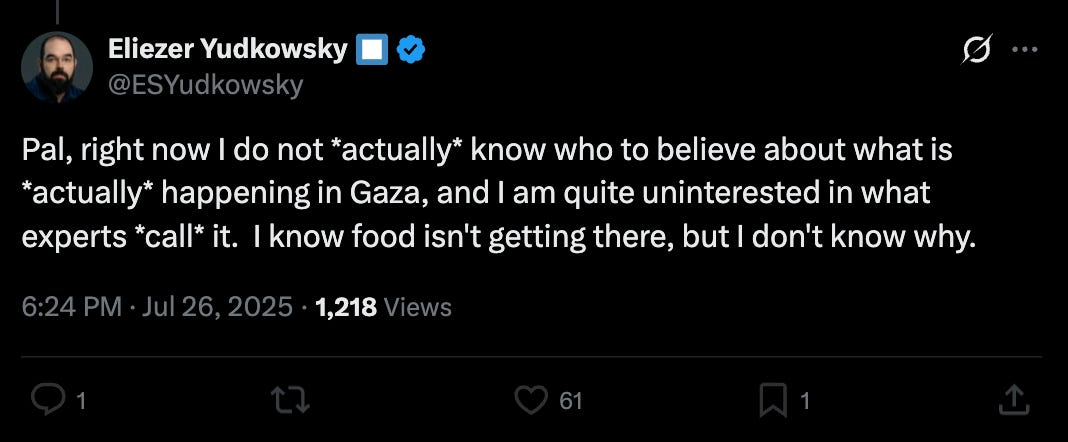

Incidentally, the self-described “genius,” Yudkowsky, has repeatedly questioned whether the Israeli Defense Force (IDF) is responsible for the atrocities and murders that happened in Gaza. He writes:

And:

The EA community idolizes Yudkowsky. MacAskill even calls him a “moral weirdo,” a lofty compliment in the EA community.

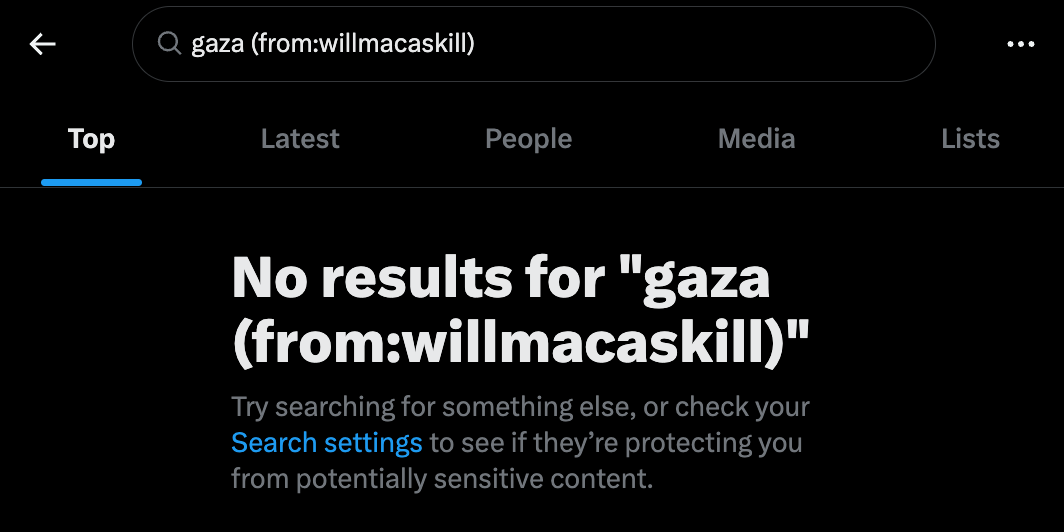

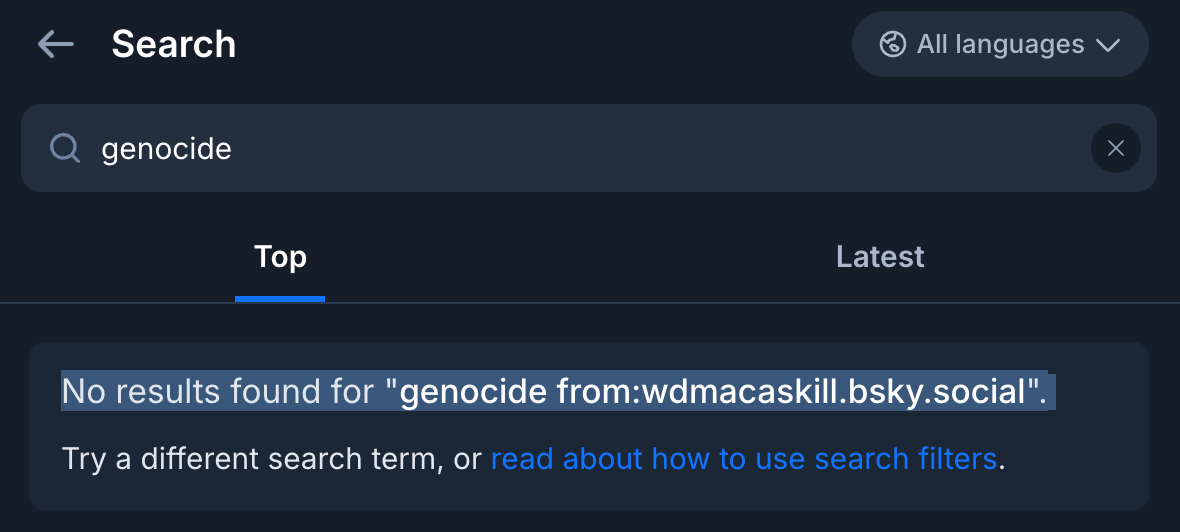

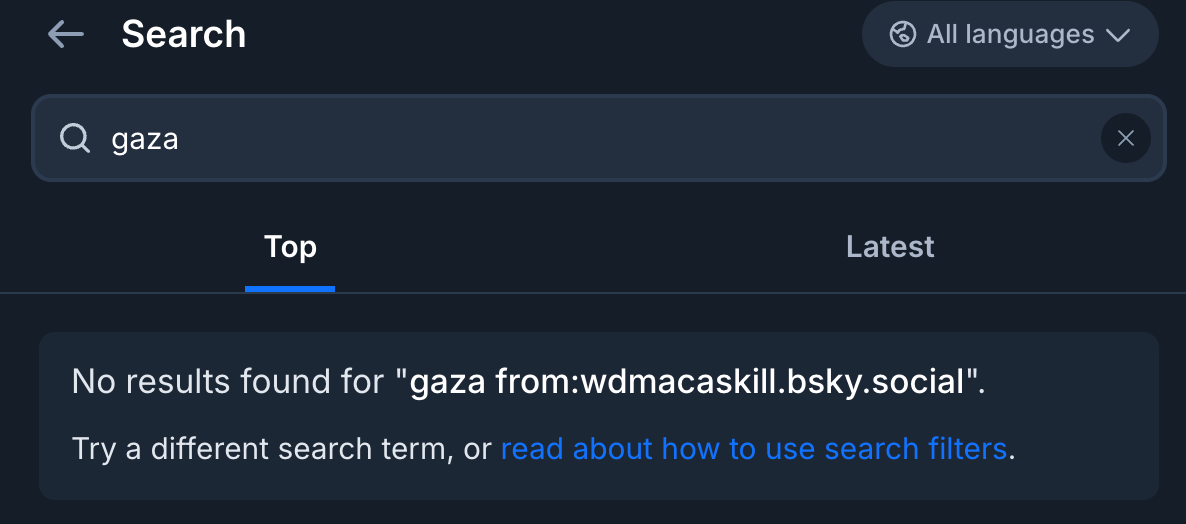

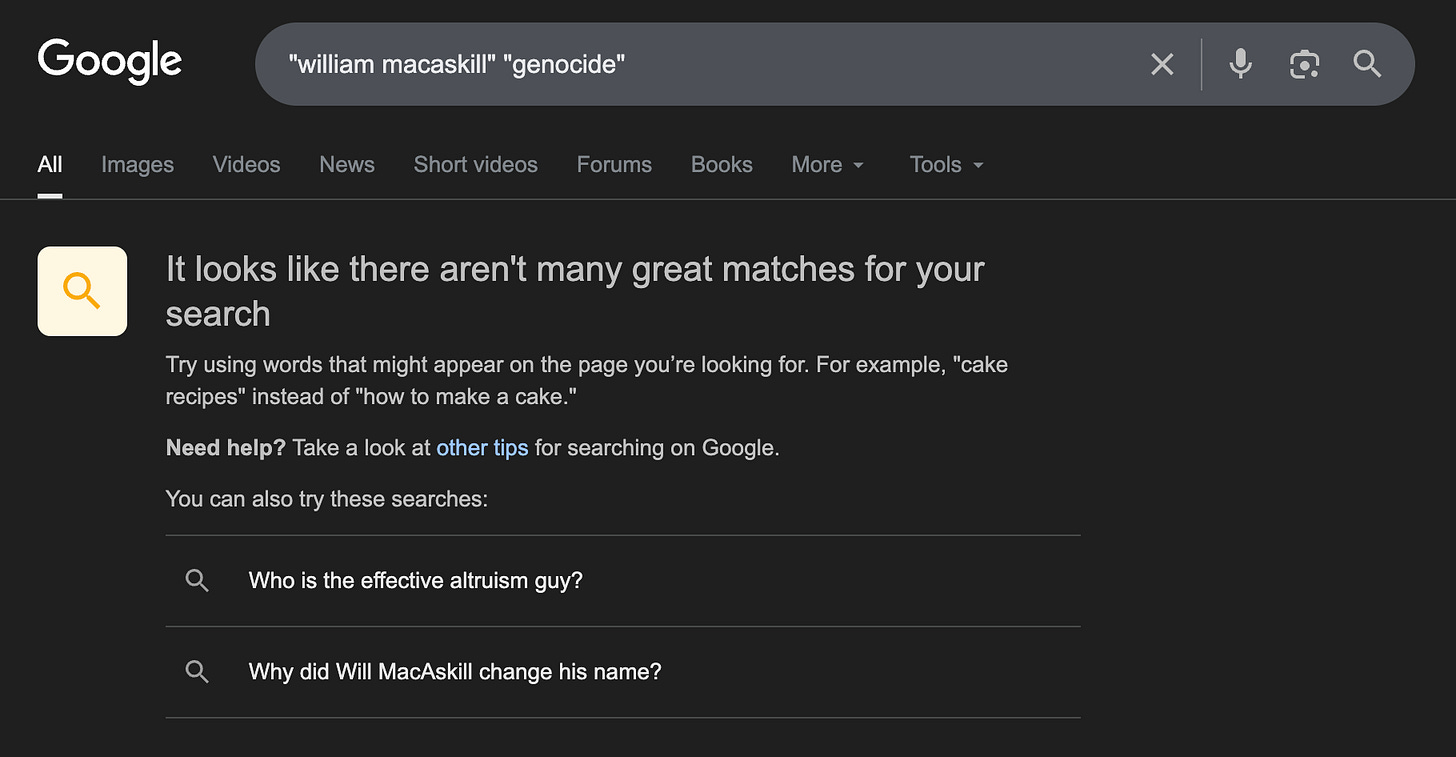

The UN General Assembly describes genocide as a crime so horrendous that it “shocks the conscience of mankind.” Yet MacAskill, Ord, Bostrom, and the others have said — to my knowledge — literally nothing about it publicly. This, from the supposedly most moral people in the world. The very least — and I mean very least — one could do is acknowledge such atrocities and express solidarity with the real altruists on the front lines fighting such evils. It takes 5 seconds to post an expression of support and solidarity on X or Bluesky.

Put another way:

Claim: EAs are exceptionally ethical people.

Prediction: Leading EAs will, therefore, be the loudest voices on the vanguard of speaking out against atrocities like the Gaza genocide, ICE’s brutalization of immigrants, the rise of American fascism, etc.

Observation: Crickets from the EA leadership, as shown below:

A few examples from X, Google, and Bluesky.

Moderates and Fools

EA leaders are the “white moderates” that Martin Luther King Jr. wrote about in his “Letter from Birmingham Jail”:

First, I must confess that over the last few years I have been gravely disappointed with the white moderate. I have almost reached the regrettable conclusion that the Negro’s great stumbling block in the stride toward freedom is not the White Citizen’s Councillor or the Ku Klux Klanner, but the white moderate who is more devoted to “order” than to justice; who prefers a negative peace which is the absence of tension to a positive peace which is the presence of justice; who constantly says “I agree with you in the goal you seek, but I can’t agree with your methods of direct action;” who paternalistically feels he can set the timetable for another man’s freedom; who lives by the myth of time and who constantly advises the Negro to wait until a “more convenient season.”

Such EAs are the fools who Pastor Martin Niemöller writes about in his famous poem “First They Came”:

First they came for the Communists

And I did not speak out

Because I was not a Communist

Then they came for the Socialists

And I did not speak out

Because I was not a Socialist

Then they came for the trade unionists

And I did not speak out

Because I was not a trade unionist

Then they came for the Jews

And I did not speak out

Because I was not a Jew

Then they came for me

And there was no one left

To speak out for me

If EA had existed during the 1960s Civil Rights movement, I have no doubt that MacAskill, Ord, and Bostrom would never be seen marching in the streets with MLK. If they were around during the rise of Nazism in the 1930s, one has to guess that they’d have been just as silent as they are right now. (Why would then be any different from the present?)

Mere Ripples on the Surface of the Great Sea of Life

There are several reasons these people have been deafeningly silent. The first is that everyone mentioned above is a longtermist: what they care about is the far future, not the present. Or, rather, as the longtermist Benjamin Todd writes about the ideology:

This thesis is often confused with the claim that we shouldn’t do anything to help people in the present generation. But longtermism is about what most matters – what we should do about it is a further question. It might turn out that the best way to help those in the future is to improve the lives of people in the present, such as through providing health and education. The difference is that the major reason to help those in the present is to improve the long-term.

In other words, the present matters only insofar as it might affect the far future. As MacAskill and Hilary Greaves write in a lengthy defense of longtermism:

For the purposes of evaluating actions, we can in the first instance often simply ignore all the effects contained in the first 100 (or even 1,000) years, focussing primarily on the further-future effects. Short-run effects act as little more than tie-breakers.

Guess what the genocide in Gaza and Trump’s fascist secret police now terrorizing American citizens and immigrants are? Short-term problems. By focusing on them, one foregrounds the “short-run effects” of one’s actions. Hence, we should completely ignore such atrocities.

This is consistent with Bostrom’s absurd argument that any risk deemed less than existential — where an existential risk is one that threatens a techno-utopian future world full of posthumans who will have likely rendered our species extinct — should not be one of our top five priorities.3 He encourages taking a grand cosmic perspective on world affairs. When one does this, non-existential catastrophes may be “a giant massacre for man” yet nothing more than “a small misstep for mankind.” Callous words, but it gets worse.

In another paper, he writes that the worst atrocities and disasters of the 20th century, including WWII (including the Holocaust), the AIDS pandemic, and so on, are utterly insignificant from a longtermist point of view. Quoting him:

Tragic as such events are to the people immediately affected, in the big picture of things – from the perspective of humankind as a whole – even the worst of these catastrophes are mere ripples on the surface of the great sea of life. They haven’t significantly affected the total amount of human suffering or happiness or determined the long-term fate of our species.

This is the first reason MacAskill, Ord, Bostrom, and the others have said nothing about the genocides and atrocities happening right now. As longtermists, they don’t think these events matter in the grand scheme of things. They may be very bad in absolute terms, but relative to the loss of “utopia” caused by a genuinely existential catastrophe, they’re nothing more than molecules in drops in the ocean.

Leading Figures in EA Don’t Give a Sh*t about Social Justice

It’s precisely this reasoning that leads many leading EAs to completely dismiss social justice issues. After I stumbled upon a racist email authored by Bostrom, in which he argues that “Blacks are more stupid than whites” and then writes the N-word, he suggested that social justice advocates are “a swarm of bloodthirsty mosquitos” that distract from matter of real importance.

When Yudkowsky, a long-time colleague of Bostrom’s, was asked about the harm and social injustices caused by biased algorithms, he said:

If they would leave the people trying to prevent the utter extinction of all humanity alone I should have no more objection to them than to the people making sure the bridges stay up. If the people making the bridges stay up were like, “How dare anyone talk about this wacky notion of AI extinguishing humanity. It is taking resources away that could be used to make the bridges stay up,” I’d be like “What the hell are you people on?” Better all the bridges should fall down than that humanity should go utterly extinct.

That from a guy who also once said:

If sacrificing all of humanity were the only way, and a reliable way, to get … god-like things out there — superintelligences who still care about each other, who are still aware of the world and having fun — I would ultimately make that trade-off.

Many longtermists are, in fact, pro-extinctionists who fantasize about a future in which posthumans, perhaps in the form of AGI or uploaded minds, sideline, marginalize, disempower, and ultimately replace our species. Don’t be fooled by their chicanery — the lie that they care about avoiding “human extinction.” They’re actually fighting for a type of human extinction, which I’ve called “terminal extinction.” I explain this crucial point in articles such as this and this. (An academic treatment can be found here.)

This is why these people don’t much care about immigrants being brutalized. In fact, xenophobia and racism, often bound up with eugenics and race science, are all over the EA longtermist movement. Heck, one of the research associates at Bostrom’s Future of Humanity Institute, alongside MacAskill and Ord, literally once argued that

“the main problem” with the Holocaust was that there weren’t enough Nazis! After all, if there had been six trillion Nazis willing to pay $1 each to make the Holocaust happen, and a mere six million Jews willing to pay $100,000 each to prevent it, the Holocaust would have generated $5.4 trillion worth of consumers surplus.

I don’t recall a single leading figure in the EA-longtermist community uttering a peep about this — or about the fact that the author, Robin Hanson (another guy revered by many EAs), is a Men’s Rights advocate who once published a blog post titled “Gentle, Silent Rape,” which I promise you is just as appalling as you might imagine.

Ethics as a Branch of Economics

Yet another reason MacAskill and the others can’t bring themselves to do the bare minimum of speaking up when it matters most is because of their underlying “ethical” view: utilitarianism. This motivates many of the canonical arguments for longtermism. It claims that we have a moral obligation to maximize the total amount of “value” in the universe across space and time.

Incidentally, totalist utilitarianism has straightforward pro-extinctionist implications: if there are “posthuman” beings who could realize more “value” than us humans, we should replace ourselves with them as quickly as possible. Failing to do so would be morally wrong because it would mean less total “value” in the world.Being influenced by utilitarianism, EA thus reduces the domain of ethics to a mere branch of economics. Sentient beings like you and me are fungible value-containers, and value is taken to be a quantifiable entity that, as such, can be represented by numbers in expected value calculations. Morality is nothing more than number-crunching, which is why moral concepts like justice and integrity have virtually no role in EA.

Consequently, leading EAs don’t actually care about people. They care about this abstract thing called “value,” where sentient beings (e.g., people) are just the substrates or containers of such “value.” We thus matter in an instrumental sense, which is to say that you and I have no moral worth in and of ourselves — that is, as Kantian ends rather than mere means to an end (maximizing “value”).

This again accounts for why, over and over again, EAs demonstrate a conspicuous lack of concern for social justice issues. It’s much easier to care about social justice if you care about people as ends rather than just means. It’s much easier to care about a genocide if you aren’t engaged in some dumb “moral mathematics,” according to which the “loss” of future digital people living in giant computer simulations would be much worse than even the most violent and horrific genocide, so long as that genocide doesn’t pose any “existential risks.” One can, as I have, sloganize the longtermist worldview as follows: Won’t someone think of all the digital unborn?

To dwell on this issue for a moment, utilitarians — and hence longtermists — would say that the death of someone who currently exists is morally equivalent to the non-birth of someone who hasn’t yet been born, all other things being equal (e.g., the pleasure they have or would have experienced). That is an insane assertion.

It amounts to the view that, quoting Jonathan Bennett, “as well as deploring the situation where a person lacks happiness, these philosophers also deplore the situation where some happiness lacks a person.” Since there could be almost infinite “happiness” in the far future — if only there exist digital space brains to realize this “happiness” — the future matters almost infinitely more than the present.

In his 2022 book, MacAskill bemoans “the tyranny of the present over the future,” because these hypothetical future space brains have no control over what we do today — e.g., whether or not we go extinct, or build an AGI God that ushers in a utopia for them, etc. But he completely ignores the other implication of longtermism and its utilitarian foundations, namely, “a dictatorship of future generations over the present one,” in the words of political scientist Joseph Nye. One manifestation of this dictatorship is the moral indifference that leading longtermists show toward social justice issues, the Gaza genocide, and ICE terrorizing the American public.

Longtermism is a complete nonstarter, in my view. In fact, most of the articles/books written in defense of longtermism could easily be reinterpreted as reductio ad absurdum arguments that demonstrate the extreme implausibility of this position.

Just consider MacAskill’s outrageous suggestion that our systematic obliteration of the biosphere may be net positive, since the fewer wild animals there are, the less wild-animal suffering there will be — which is clearly a good thing because, he tells us, most animal lives are worthless, i.e., “worse than nothing on average.” By ruthlessly killing off our earthly companions, the world becomes better through omission.

Critical Mass and Social Tipping Points

One final argument that EA leaders might advance in defense of their silence concerns the so-called “ITN framework.” This stands for “importance, tractability, and neglectedness.” The goal of EA is to do the most “good” at the margins. If a lot of people are working on an important and tractable problem, then adding one more person to the crowd won’t do much. In contrast, if there’s an important and tractable problem that few people are working on, one could do a lot more.

What this catastrophically misses is the fact that, in many cases, positive change in the world requires a critical mass of people working on it. There are social tipping points that must be crossed for the problem to be solved. If 100,000 people marching in the street is necessary to expel the American Gestapo — ICE — from Minneapolis, then one should be more rather than less inclined to join the protest if 80,000 people are already blowing whistles and waving their signs. EAs would say this is wrong: “Look how many people are out there right now! The problem is not neglected! My talents and resources are thus better spent doing something else.”

Many problems in the world are like this: mitigating climate change, stopping fascism, addressing police brutality, etc. Without a critical mass of activism, meaningful change will remain out of reach. Hence, the ITN framework is philosophically flawed and may be actively harmful, as it encourages folks who might otherwise join forces to stay at home instead.

EA Has Probably Made the World Worse

Leading EAs will no doubt claim that marching in the streets to preserve democracy and stand up for voiceless immigrants is a waste of resources — according to their flawed “moral mathematics.” The movement has, they insist, saved 150,000 lives so far, though we don’t see most of these people because they’re out of sight in the Global South.

But has EA really made the world better? As a former OpenAI employee who escaped the EA cult wrote in 2023:

I think helping people is good, but all the “good EAs” I know were obviously altruistic angels even as kids. EA did nothing for them. They were going to try and help the world anyway if it never existed. I know a few cases where the existence of EA helped, dozens where it destroyed (slightly edited for clarity).

EAs love to talk about “counterfactuals,” as MacAskill does when he argues that you should be willing to work for petrochemical companies to maximize your charitable donations. (Lol.) But they never consider the crucial counterfactual: “What if EA had never existed?” If EA had never existed, the same “good EAs” would still have donated most or all of their disposable incomes.

However, on the flip side, Bankman-Fried wouldn’t have defrauded millions of people. Non-EA animal activists wouldn’t have been demonized as “ineffective.” Grassroots organizations would have had a much better shot at getting funded. And so on.

There’s an entire book dedicated to examining the very real and tangible harms that EA has caused, titled The Good It Promises, the Harm It Does. Worth a read.

Conclusion

EAs like to see themselves as morally superior, yet the movement’s most influential figures won’t even speak out about the most morally salient issues of our time. They warn about the possibility of omnicide — the death of all humans, thus foreclosing our “glorious transhumanist future,” quoting Yudkowsky — yet they can’t bring themselves to publicly acknowledge the first genocide live-streamed in human history (Gaza). That is not altruism. It’s moral turpitude and cowardice.

As always:

Thanks for reading and I’ll see you on the other side!

Thanks to Remmelt Ellen and David Gerard for insightful comments on an earlier draft.

One person on the Effective Altruism Forum was so disappointed in EA’s response to the genocide that they wrote:

It is heartbreaking to see the ongoing atrocities and humanitarian crisis unfold in Gaza. I am surprised (at least somewhat) to not hear many EAs discuss this - online or in in-person conversations.

I’m interested in peoples’ views about why there might be a lack of discussion in this community, and what people should be doing to support Gazans from afar.

I had included Scott Alexander’s name in the original post. However, someone with the username “comex” left the comment copy-pasted below, which shows that Alexander has written about some of these issues. Hence, I removed his name on January 29, 2026. Here’s the comment — thanks to “comex” for apprising me of this:

I read Scott Alexander’s Substack, and he has in fact written a long post criticizing Israel’s conduct in Gaza. While he hasn’t written about ICE, he wrote multiple posts last year criticizing the cutting of USAID, wrote a post defending the notion that people like Trump could be a threat to democracy (“Defining Defending Democracy: Contra The Election Winner Argument”), and, in another post (“Links For April 2025”), criticized Trump “sending innocent people to horrible Salvadorean prisons” and described his apparent refusal to comply with a Supreme Court order as “terrifying”.

However, someone else shared this shocking tweet from Alexander, in which he argues that “Hamas bears most resonsibility for the Gaza deaths.” Wild.

The top four priorities are to mitigate existential risks, and the fifth priority is to colonize space as quickly as possible.

I read Scott Alexander’s Substack, and he has in fact written a long post criticizing Israel’s conduct in Gaza. While he hasn’t written about ICE, he wrote multiple posts last year criticizing the cutting of USAID, wrote a post defending the notion that people like Trump could be a threat to democracy (“Defining Defending Democracy: Contra The Election Winner Argument”), and, in another post (“Links For April 2025”), criticized Trump “sending innocent people to horrible Salvadorean prisons” and described his apparent refusal to comply with a Supreme Court order as “terrifying”.

Another day, another book recomendation from Emile.

In all seriousness though this article really hits after reading the majority of "More Everything Forever", wherein Adam Becker talked about the history of EA: The people and theories that influenced them, the people that founded it, how EA is supposed to work along with their rationals, and of course actual interviews with Toby Ord and Anders Samberg.

Honestly all I can really say regarding everything Emile is to ask a question: How does one EVER think that being an EA, or any member of the TESCREAL ideology, is a moral good way to live? And when I say live, I mean it. Nothing about what these people do seems like they live good lives!!! I mean sure thinking about what is happening in America or Gaza doesn't exactly fill one with thoughts of cotton candy and jelly-beans, but the thoughts processes of EAs where they GENUINELY believe that if they don't do something mathematically perfect, then humanity dies out. And because of that thinking, they not only live lives of cowardice (Not talking about Gaza or racism or general cult behavior in their mov't) they also live lives of what I'd call chronic anxiety and depression, where nothing will ever be enough to usher in the post-human future of 10 to 50-whatever digital people.

All I can say Emile is that if there is a God, then I must thank him for you no longer being a TESCREAList.