My Interview with Jacobin. Putin and Xi Talk Immortality. And Yudkowsky Says He’s Never Knowingly Had Relations with Minors.

A "fun" mishmash of news you might have missed! (2,300 words.)

1. Jacobin Interview

(1) Hello everyone! I’m excited to share an interview I recently did with Doug Henwood for Jacobin. I was asked about the acronym “TESCREAL,” which I coined during a collaboration with Timnit Gebru, as well as an ominous aspect of the TESCREAL movement that I call “Silicon Valley pro-extinctionism.” Here are a few excerpts, though I’d recommend reading the interview in full, which was nicely edited to make me sound more cogent than I actually am. :-) Check out the original if only to give Jacobin a few extra clicks!

DOUG HENWOOD

And [Elon Musk’s] “colonizing Mars” thing is totally part of this too.

ÉMILE TORRES

Absolutely. Yes. Musk sees himself as a messianic figure who is going to play a pivotal role not just in human history but in cosmic history. Because if he is the one who ushers in the age of AGI with his company xAI, then that’s going to be a monumental shift. It would, in his view, introduce an entirely new capability for us to then realize the techno-utopian vision of the TESCREAL worldview.

SpaceX factors into this too. For Musk, Mars is the stepping stone to the rest of the galaxy, which is the stepping stone to the rest of the universe. If he is the one to get us to Mars and who enables us to establish Earth-independent colonies there, then he will have launched us in the direction of realizing this utopian world, maybe more than anyone else on Earth right now.

All of these people also embody the same kind of discriminatory attitudes that animated the eugenics movement of the twentieth century. Elon Musk has warned about global population decline, but if you look closely at some of his tweets, it seems that he’s more explicitly worried about white populations declining. Several months ago, someone tweeted that the white demographic globally is about eight percent of the world population, and Musk’s reply to this was something to the effect of “and declining fast.” There is very much a racial component to his anxiety about not just immigration, but population decline. Musk basically thinks that white people are superior, and hence it would be very bad for the future of humanity and post-humanity if the white population were to decline.

DOUG HENWOOD

In his long interview with Ross Douthat of the New York Times in June, Peter Thiel was asked by Douthat if he wanted the human race to endure. And Thiel hemmed and hawed before reluctantly saying yes, but it didn’t seem like he really believed it. And then he brought up transhumanism in the next sentence or two.

What is it these people want? Are they indifferent to or even welcoming of human extinction?

ÉMILE TORRES

There are two essential things for people to understand about what’s happening in Silicon Valley. One is that the TESCREAL worldview is ubiquitous. It’s the water these people swim in and the air they breathe. So you just cannot understand what’s going on in Silicon Valley, especially with respect to the race to build AGI, without some understanding of transhumanism, longtermism, and all of these TESCREAL ideologies.

The second really important thing for people to understand is that a key component of the utopian vision at the heart of TESCREALism is a pro-extinctionist stance. Utopia looks like a world in which post-humans, not humans, are the ones who rule the world. When Peter Thiel hesitated, he was just channeling this pro-extinctionist component of the TESCREAL worldview. It’s not humanity, it’s post-humanity that is going to ultimately go out and colonize space, that’s going to run the show.

Peter Thiel has a somewhat unique view that is a minority within Silicon Valley, according to which the post-human beings that should eventually replace us ought to be extensions of our biological bodies. So he’s explicitly said that he wants to live forever, but he also wants to keep his body. He doesn’t want to upload his mind to a computer to become some digital being.

This contrasts with a slightly different version of pro-extinctionism that a lot more people in Silicon Valley hold, according to which the future is digital. You could distinguish between Peter Thiel’s biological transhumanism and what might be called digital eugenics. Digital eugenics is a version of pro-extinctionism that says those future post-humans that replace us should all be digital in nature.

Either they could be uploaded … human minds or they could be entirely separate, autonomous, distinct entities like ChatGPT is. So you could imagine ChatGPT 10 or whatever, achieving the level of AGI and then taking over. In this sense, AGI is not an extension of us — it’s something completely separate. We didn’t become it, we just created it. Whereas Peter Thiel has this vision of post-humanity as something that we become rather than create. The key point, though, is that they’re all pro-extinctionist.

…

Their vision of the future is not inclusive. If it were an inclusive future, it would also include humans. It doesn’t. It’s a future for post-humans.

It is also deeply elitist and extremely undemocratic. Right now, with their billions and trillions of dollars, they are trying to create a new world run by post-humans without ever having inquired about the opinions and preferences of the rest of humanity. They’re doing this without our consent, and they don’t really care one bit about what the rest of us have to say.

They believe in their vision, and they’re going to try to bring it about regardless. They truly believe that this is the right thing to be doing. There is zero input from the rest of humanity about what our collective human future ought to look like. You could describe this as profoundly coercive.

For more on Silicon Valley pro-extinctionism, see my newsletter series (which I’m turning into an academic paper), as well as this forthcoming article of mine in The Journal of Value Inquiry.

2. Update on Chomsky and Epstein

I was pleasantly surprised to see that my last article, on Noam Chomsky’s friendship with Jeffrey Epstein, received over 230 comments (and counting!). It seems that many folks share my sense of profound disappointment in Chomsky for considering Epstein a “highly valued friend” (his words).

I mentioned in the article that Chomsky had to have known that Epstein was still engaging in morally reprehensible behavior. In the video below, Miami Herald journalist Julie Brown, who’s played a critical role in bringing to light accusations against Epstein, says that Epstein didn’t clearly separate the “business” part of his life with the “personal” part. This doesn’t imply that Chomsky was aware of what Epstein was up to (despite Chomsky telling Epstein that he hoped to make it to the Caribbean, which is a bit suspicious), but it is yet another crack in the dam. My guess is that the situation will get worse for Chomsky as more information comes to light. Even if it doesn’t get worse, it’s still very bad as it stands, and many of us have lost a great deal of respect for the guy.

As it happens, Brown just published an article in which she writes:

The Herald’s findings reveal that Chomsky continued to correspond with him at least until the summer of 2019, even after the Herald’s series led to widespread outcry and the Justice Department publicly announced a fresh probe.

Epstein claimed in his messages that he was flying to meet Chomsky on May 12, 2019 — less than two months before federal prosecutors in the Southern District of New York brought new sex charges against him. It isn’t clear if they actually met then.

Yikes. As Dave Troy noted on X:

It wasn’t just image rehab. Chomsky was working with right wing libertarians for 50 years, and he was collaborating with Epstein and Bannon on a documentary project, text messages show.

In fact, Brown writes that “Epstein showed no loyalty to either political side,” which is confirmed by emails between Epstein and Steve Bannon. You can search all of the emails released by House Democrats at this URL: https://www.docetl.org/showcase/epstein-email-explorer.

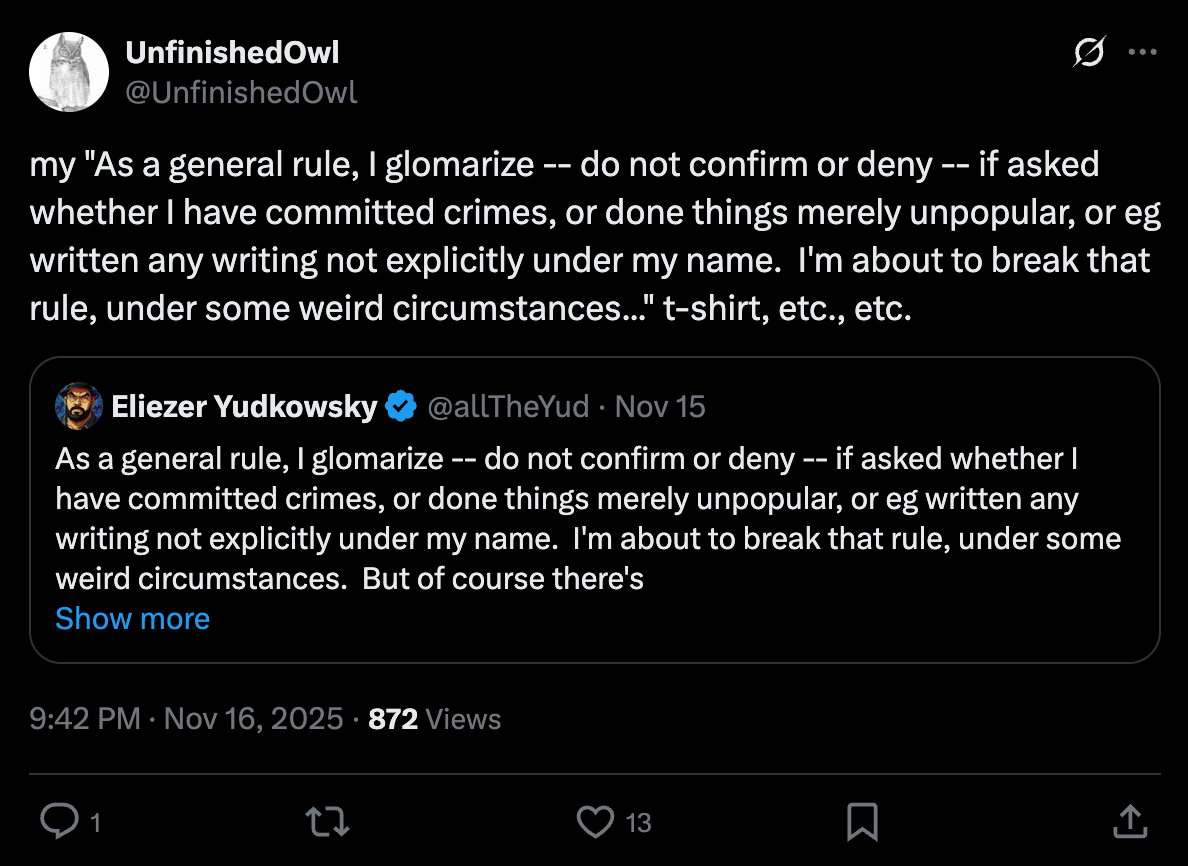

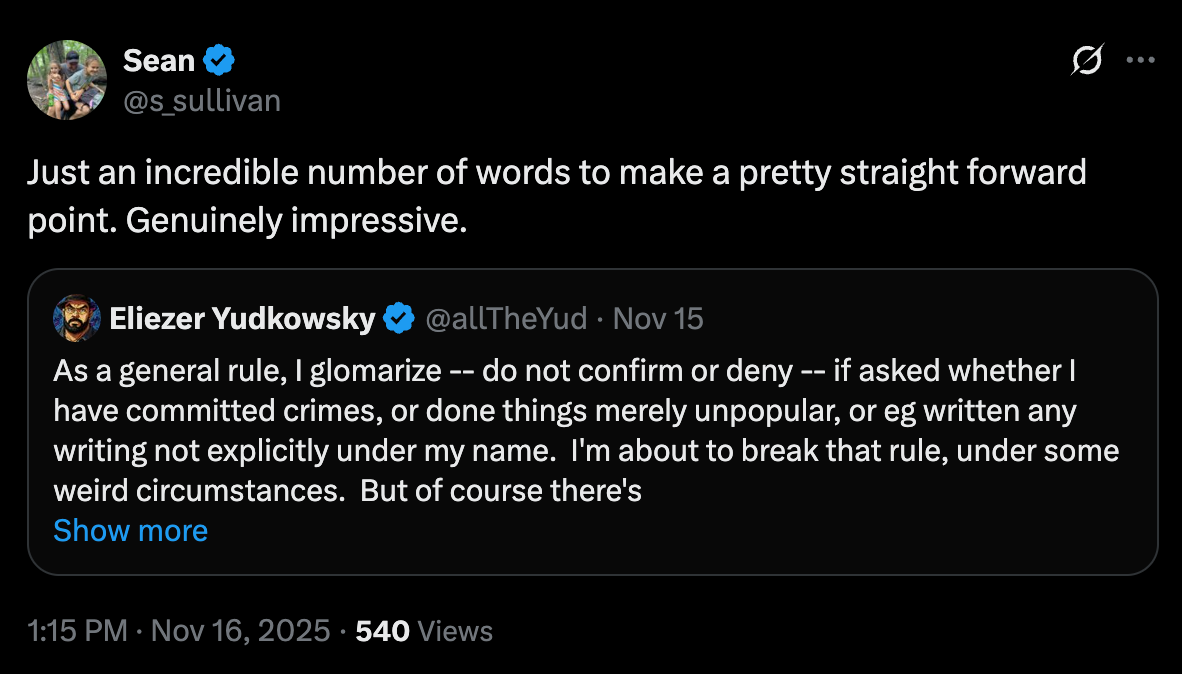

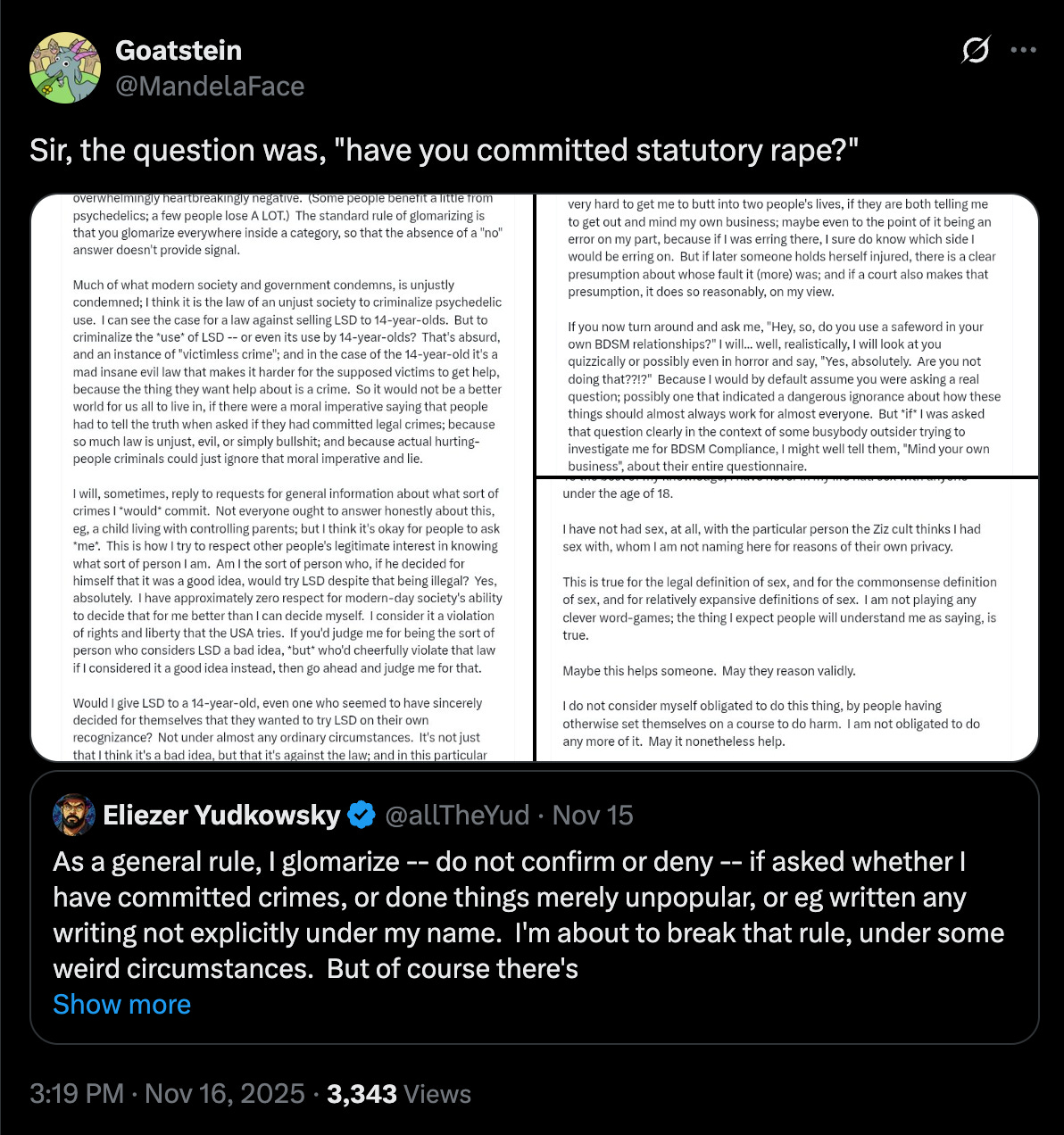

3. Yudkowsky on Statutory Rape Allegations

In a rambling post on X that digressed into bizarre topics like why it shouldn’t be a crime for 14-year-olds to take LSD, Eliezer Yudkowsky denied accusations from the Zizians, a Rationalist subcult, that he engaged in statutory rape. (For a detailed 3-part series on the Zizians, see my podcast with Kate Willett here.)

Astonishingly, Yudkowsky actually wrote: “To the best of my knowledge, I have never in my life had sex with anyone under the age of 18.” I mean, if you have to say it, something’s gone terribly wrong! I immediately wondered whether there is an article coming out soon about Yudkowsky having relations with minors, and he was trying to get ahead of the news.

Two brief asides about this: First, it’s worth noting that Yudkowsky founded the Machine Intelligence Research Institute (MIRI), which received at least one donation from [drum roll] Jeffrey Epstein. This doesn’t make MIRI guilty, of course. But it does reveal the vast tangle of nefarious figures in this space. Indeed, Epstein himself was fascinated by transhumanism.

Second, Yudkowsky writes in the post, “I have never read, and plan to never read, Ted Kaczynski’s manifesto.” Here’s a fun little homework assignment for you: take a look at Kaczynski’s essay “Ship of Fools.” Stop yourself at random points while reading and ask: “How is this any different, in any substantive way, from Yudkowsky’s view? If I were to present this essay to someone without revealing the author, and then ask them whether it was authored by Yudkowsky or Kaczynski, would they be able to give the right answer?”

To my eye, at least, it’s remarkable how closely aligned Kaczynski and Yudkowsky are. Kaczynski even uses ridiculous fictional scenarios to make his points. Their writing is very similar. The only important difference between the two is that Yudkowsky wants to build superintelligence at some point, whereas Kaczynski advocated a permanent ban on such technologies. Perhaps I’ll elaborate on this in a future newsletter piece.

Back to Yudkowsky’s long post on X, which received many amusing replies. Here are a few:

4. Two Dictators Talk About Immortality

Vladimir Putin and Xi Jinping were caught on a hot mic chatting about the possibility of becoming immortal through organ transplants. Here’s what they said, as translated by the BBC:

“In the past, it used to be rare for someone to be older than 70 and these days they say that at 70 one’s still a child,” Xi’s interpreter could be heard saying in Russian.

An inaudible passage from Putin follows. His Mandarin interpreter then added: “With the development of biotechnology, human organs can be continuously transplanted, and people can live younger and younger, and even achieve immortality.”

Xi’s interpreter then said: “Predictions are, this century, there’s a chance of also living to 150 [years old].”

This reminds me of a clip of Jared Kushner from 2022 in which he said: “My generation is, hopefully with the advances in science, the first generation that’s going to live forever or the last generation that’s going to die.” Here’s the clip:

Just a reminder that if we do develop immortality technologies, that means you’ll have to put up with the absolute worst people living alongside you until the heat death of the universe. Sure, perhaps Kushner and the others will upload their minds to a computer and move to another galaxy. Or maybe — because they’re power-hungry sociopaths — they’ll try to establish some kind of cosmic dictatorship that no one can escape. More likely, they’ll do everything they can to prevent the masses from accessing life-extension therapies, so they’re the only ones who get to live forever.

Note also that, as I’ve mentioned before, immortality opens up the possibility of, e.g., political dissidents being tortured for literally millions or billions of years. Constant torture, every day, and if you die they’ll just use advanced biomedical tech to revive you and keep the torture going. The posthuman era would open up a vast realm of utterly terrifying possibilities.

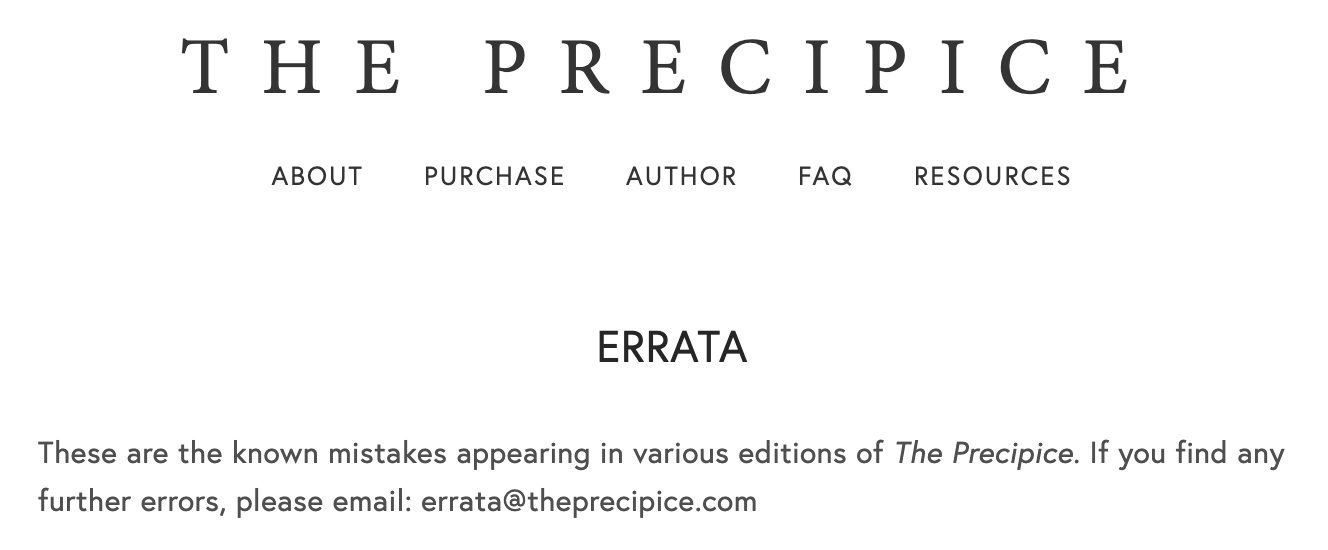

5. Karen Hao Made a Mistake (and Is Rectifying the Situation!)

Lastly, it turns out that journalist Karen Hao made a mistake in her otherwise fantastic book Empire of AI. She misinterpreted some units, resulting in a claim about AI’s water use that’s off by a factor of 1,000. That’s not a trivial mistake, but mistakes happen. There isn’t a single book in the library that doesn’t contain at least one error, despite authors (like myself, and Hao) working incredibly hard to avoid even a single mistake sneaking into the manuscript.

For years, my view has been that what matters just as much as not making a mistake in the first place is how one responds to the error being pointed out. And Hao has handled the situation like a pro. She immediately acknowledged the mistake, reached out to her sources for clarification, and is talking to her editors about how to edit the text in her book. That’s everything one could ask for from a journalist or academic.

As it happens, the guy who noticed the error, Andy Masley, is the director of Effective Altruism, DC. Consequently, a ton of EAs have been tweeting about this, often with a degree of gleeful celebration that Hao got something wrong. But it’s worth remembering that a cofounder of EA, Toby Ord, who’s lionized by everyone in the community, published a book in 2020 that contains numerous errors. Ord even put together a webpage — which is difficult to find — cataloguing them, which you can browse here.

This isn’t a “whataboutism” rebuttal. I’m simply pointing out that (a) mistakes happen, and authors with moral and intellectual integrity will openly admit such mistakes and then take meaningful steps to rectify them, and (b) the EA community’s outrage over textual errors is selective, as no one (in the community) thinks less of Ord for having published a book riddled — if that’s not too strong a word — with errors.

Sigh.

Am I missing something, though? What do you think about the issues discussed above? As always:

Thanks for reading and I’ll see you on the other side!

Thank you for you for your continued advocacy and analysis on these issues. Your views are very refreshing.

Do you have a perspective on the announcement about Musk, Nvidia, and the Saudis? Do you have a feel yet for where MBS is on TESCREAL? I am very familiar with MBS’s connections with Epstein and have written about them but have not yet started digging into the TESCREAL factor yet with him through the lens of your scholarship.

Firstly, congrats on your article with Jacobin! I think it’s good that you’re getting these interviews because (not trying to sound like a fanboy) but you do have great insights with this stuff.

Secondly, you say that the interview is “edited to make you sound more cogent than you actually are”…nonsense. I’ve heard you speak myself Émile and you speak clearly and (obviously) know what you’re speaking about.

Thirdly, that thing Yudkowsky said that “to the best of my knowledge…” is honestly so funny to me, in a dark sort of way. Could you imagine if I said “Professor to the best of my knowledge I’ve never used ChatGPT to write my essays”, that’s just hugely suspicious.