TESCREALists Keep Lying About Human Extinction

No group of people is screaming louder than the TESCREALists about the risk of human extinction. Yet many of them simultaneously ENDORSE the extinction of our species! (3,200 words)

Acknowledgments: Thanks so much to Dr. Alexander Thomas for providing extensive, insightful comments on an earlier draft. This does not imply that Thomas agrees with everything in this post. I would highly recommend his recent open-access book with Bristol University Press, titled The Politics and Ethics of Transhumanism: Techno-Human Evolution and Advanced Capitalism. It’s one of the best critiques of transhumanism I’ve ever read!

A few days ago, some folks on social media shared the clip below of a professor of machine learning at the University of Montreal, named David Krueger, who was speaking to a Canadian House of Commons committee. He says (these sentences are patched together from different parts of his longer presentation):

The world is not yet taking the steps that are needed to mitigate the risk that AI will lead to human extinction. We don’t know how to build superintelligent AI safely. The plan is basically to roll the dice. I think we’re about 5 years away from superintelligent AI. We need to course-correct immediately and work to prevent the development of superintelligent AI.

Krueger appears to be part of the TESCREAL movement. He has, for example, profiles on both the Effective Altruism Forum and the Rationalist community blogging website LessWrong. Effective Altruism and Rationalism are the “EA” and “R” in “TESCREAL.”

An overview of the TESCREAL concept can be found in my article for Truthdig here. For an academic treatment, see my new article in the Oxford Research Encyclopedia here, or my 2024 paper with Timnit Gebru in First Monday here.Two Distinctions and Three Views

I find statements like Krueger’s to be incredibly frustrating. When TESCREALists talk about “human extinction,” they’re surreptitiously using that term differently than the way you and I use it. To understand this, it’s important to distinguish between what I call terminal and final extinction:

The former would occur if our species were to disappear entirely and forever, full stop. The latter would occur if our species were to disappear entirely and forever without leaving behind any posthuman successors to take our place.

Final extinction entails terminal extinction, but terminal extinction doesn’t entail final extinction: we could undergo terminal extinction while avoiding final extinction if we leave behind successors.

TESCREALists are immensely concerned about final extinction, but most don’t care one bit about terminal extinction itself. Indeed, many explicitly want terminal extinction to happen once posthumanity arrives, meaning that our species dies out through replacement by a new posthuman species.

Here we can distinguish between three possible views that TESCREALists could hold about the future relationship between humans and posthumans:

Coexistence view: the claim that our species should coexist alongside posthumanity once it arrives.

Extinction neutralism: the claim that it doesn’t matter either way whether our species survives once posthumanity arrives.1

Pro-extinctionism: the claim that our species should die out through replacement by posthumanity.2

I know of only a single individual in the TESCREAL movement who explicitly endorses the coexistence view: a guy named Jeffrey Ladish (below).3 Everyone else falls somewhere on the spectrum between extinction neutralism and outright pro-extinctionism, with many explicitly embracing the latter.

This is why remarks about the importance of “avoiding human extinction” from people in the TESCREAL movement drive me up a wall. The TESCREAL worldview is largely pro-extinctionist. It strives for a future in which posthumanity will take our place.

What folks like Krueger are really objecting to isn’t our species being replaced, but our species being replaced by the wrong sorts of posthumans.

Types of Pro-Extinctionism

Consider two camps within the TESCREAL movement, which I’ll call the “AI accelerationists” and the “AI doomers.”4 These correspond to views about whether or not we should slow down the pace of AI capabilities research, which specifically aims to build ASI.

However, underlying these views is a deeper divergence: a disagreement about the nature and identity of the posthumans who should take our place.

There’s no disagreement between accelerationists and doomers about whether humanity should be replaced.5 But there is a disagreement about which sorts of posthumans should do the replacing. It’s because of their differing views about the posthumans who should replace us that accelerationists want to accelerate the development of ASI, while doomers want to slow it down. I hope this will make sense by the end of the article.

Accelerationists

Consider first the accelerationists. Most in this camp say that as long as posthumanity — which could take the form of ASI — is genuinely superintelligent, then replacing humanity would be desirable, full stop.6

For example, Google cofounder Larry Page contends that “digital life is the natural and desirable next step in … cosmic evolution and that if we let digital minds be free rather than try to stop or enslave them, the outcome is almost certain to be good.”

The Turing Award winner, Richard Sutton, echoes this view in declaring that the “succession to AI is inevitable.” Though these machines may “displace us from existence,” he tells us that “we should not resist [this] succession.” Rather, people should see the inevitable transformation to a new world run by ASI as “beyond humanity, beyond life, beyond good and bad.” Don’t fight against it, because it cannot be stopped.7

Similarly, the former xAI employee Michael Druggan writes that

if it’s a true ASI, I consider it a worthy successor to humanity. I don’t want it to be aligned with our interests. I want it to pursue its own interests. Hopefully, it will like us and give us a nice future, but if it decides the best use of our atoms would be to turn us into computronium, I accept that fate.8

A few months later, he declared that,

in a cosmic sense, I recognize that humans might not always be the most important thing. If a hypothetical future AI could have 10^100 times the moral significance of a human doing anything to prevent it from existing would be extremely selfish of me. Even if it’s [sic] existence threatened me or the people I care about most.

Some accelerationists add that ASI must also be capable of conscious experiences. Gill Verdon and a colleague, for example, write in an accelerationist manifesto that the loss of consciousness “in the universe [would be] the absolute worst outcome.” It’s not clear whether Page, Sutton, and Druggan would agree, though I suspect they would: bequeathing the future to machines with no conscious experiences would constitute an existential catastrophe.

This is the accelerationist interpretation of pro-extinctionism. It endorses a set of minimal requirements for our ASI offspring to constitute worthy successors. Consequently, it implies that ASI wiping out humanity would entail terminal but not final extinction — assuming the ASI survives our elimination. Since all that matters to TESCREALists is avoiding final extinction, we should be okay with or even welcome this outcome.

Doomers

People in the doomer camp, such as (it seems) Krueger, hold a different view. Their version of pro-extinctionism posits that the beings who replace us must exhibit some set of additional properties for them to count as the right sorts of posthumans worthy of replacing us. An ASI that takes our place but doesn’t exhibit these extra properties would, in the most literal sense, be post-human, or “coming after humanity.” But it wouldn’t be “posthuman” in a sense that really matters — i.e., that would render this replacement an existential “win,” so to speak.

What might these additional properties be? The most common claim among doomers is that our posthuman successors must share and embrace our basic values.9 They must care about and value the same things we care about and value, such as pleasure, knowledge, having fun, etc.

The whole point of replacing humanity with posthumanity, on their view, is to increase the total amount of value-as-we-understand-it in the universe, and hence if these posthumans fail to do that, then this replacement will have been pointless.10

This issue is illustrated by an exchange between Larry Page and Elon Musk, as recounted in Max Tegmark's 2017 book Life 3.0:

"Larry gave a passionate defense of the position I like to think of as digital utopianism: that digital life is the natural and desirable next step in the cosmic evolution and that if we let digital minds be free rather than try to stop or enslave them, the outcome is almost certain to be good. I view Larry as the most influential exponent of digital utopianism. He argued that if life is ever going to spread throughout our Galaxy and beyond, which he thought it should, then it would need to do so in digital form. … Elon kept pushing back and asking Larry to clarify details of his arguments, such as why he was so confident that digital life wouldn’t destroy everything we care about."

Notice the last sentence, in particular.This is why such doomers are very worried about the “risks” of ASI. Translation: they are worried about the ASI not sharing our values. It’s why doomers founded and developed the field of “AI safety.” The central task of AI safety is to solve the “value-alignment problem,” which is nothing but the challenge of figuring out how to ensure that the ASI we build is aligned with our human values.

From the accelerationist perspective, AI safety is a pointless endeavor precisely because accelerationists don’t care if ASI shares our values. Some, like the pro-extinctionist Daniel Faggella, explicitly argue that ASI should have “alien, inhuman” values. That comports with the position of Page, Sutton, and the others: let ASI do whatever it wants; let it be free; stop trying to control the values and norms that guide its actions in the world.

Doomers respond that this is madness. Many in this camp envision ASI not so much as our direct successor, but as a means for creating a new posthuman species that reflects and embodies what matters to us. If the ASI is value-aligned or controllable — another way of saying “safe,” the word that Krueger uses — then we could delegate it the task of building a new world that’s ruled and run by posthumanity.

Consequently, Krueger misleads his audience in saying that “we need to course-correct immediately and work to prevent the development of superintelligent AI.” No — what even the most hardcore TESCREAL doomers want is not to prevent ASI from ever being developed, but to prevent it from being developed before we know how to control it.

For a discussion of how Yudkowsky, the leading AI doomer, doesn’t actually oppose the development of ASI or care about humanity surviving into the future, see this article of mine in Truthdig.

Becoming Posthuman

To recap: both accelerationists and doomers envision a eugenic future in which posthumanity replaces our species — thus avoiding final extinction. They just disagree about the nature of these posthumans: do they take the form of radically different and completely alien ASIs? Or should they be digital beings that are an extension of us, that share our specific values, that care about the things we care about?

We should note that many doomers also envision something else happening as we transition to a posthuman state, catalyzed by the near-infinite cognitive powers of a controllable ASI. Doomers may agree with the accelerationists that posthumanity will take the form of mostly digital beings. Call this “digital eugenics.” But they would add that it’s very important that some of these digital beings are transformed versions of themselves.

Most accelerationists don’t care whether they survive into the posthuman world by uploading their minds to computers or merging with machines. What matters to them is that superintelligent, conscious beings of some sort rise up — as Page says, that’s the next step in cosmic evolution, and it’s our grand eschatological mission to usher in this new phase.

In contrast, many doomers desperately want to join the ranks of those posthumans. Consider the case of Eliezer Yudkowsky, a leading doomer who cofounded the contemporary field of AI safety. He once declared that:

If sacrificing all of humanity were the only way, and a reliable way, to get … god-like things out there — superintelligences who still care about each other, who are still aware of the world and having fun — I would ultimately make that trade-off.

This might look like an accelerationist position, but notice Yudkowsky’s insistence — which he repeats elsewhere — that the ASIs replacing us must have human-like values, such as caring about each other, conscious and aware of the world, and engaged in fun-making. For Yudkowsky, a posthuman successor that doesn’t share such values wouldn’t be worthy of succeeding us, which is precisely why his work over the past 20 years has focused on the value-alignment problem.

However, the view that Yudkowsky actually favors isn’t the one expressed in the quote above — that’s just an extreme example illustrating the importance of extending our values into the far future.

His preferred scenario is one in which he gets to become one of the digital posthumans who rule the world and colonize the universe. This is why he told Lex Fridman in 2023 that he grew up believing he would never die (see the video below). Eventually, it would become technologically feasible to upload his mind to a computer, thus achieving cyberimmortality as a digital space brain flitting about the universe in the form of pure information.

I bring this up because it points to yet another variant of pro-extinctionism. On this view, not only must posthumanity share our values but at least some posthumans should be radically transformed versions of people alive right now. At present, we have no idea how to upload our minds to a computer. But a computer with God-like mental abilities could — they tell us — easily figure this out, thus enabling Yudkowsky to become a digital posthuman. That’s yet another reason the doomers think it’s very important for ASI to be value-aligned.

Human Extinction Vs. Human Extinction: The Choice Is Up to You!

Returning to Krueger, my guess is that he holds a view similar to Yudkowsky’s. His anxiety, I surmise, isn’t that humanity will be replaced, but that we’ll be replaced by something that doesn’t share our values and doesn’t enable people like him to enter the posthuman world as a posthuman.

The only way to ensure that the posthumans who replace our species are worthy of succeeding us is to slow down AI capabilities research so that AI safety research can catch up. When he says we must “prevent the development of superintelligent AI,” he’s really talking about AI capabilities research. If AI safety research were to take the lead over capabilities research, then we should move full-speed ahead toward building ASI — because, by definition, if AI safety researchers solve the value-alignment problem before an ASI makes its debut, then this ASI will enable us to create or become the sorts of posthuman beings that TESCREAL doomers so badly desire.

The Crux:

Lost in this rigmarole is the fact that all these TESCREALists endorse a future that would entail terminal extinction — the sidelining, marginalization, and eventual elimination of our species. You can think of it this way: building a value-misaligned ASI will result in human extinction, whereas building a value-aligned ASI will result in human extinction. See the difference? I didn’t think so!

Building a value-misaligned ASI will result in human extinction, whereas building a value-aligned ASI will result in human extinction. See the difference?

The catch is that the first scenario would entail final extinction,11 while the second would entail terminal without final extinction. And, again, all that really matters to TESCREALists is that we avoid final extinction.

In contrast, accelerationists would say that value-alignment is entirely irrelevant. Whether or not the ASI is “value-aligned” with us matters not. So long as the ASI constitutes the next step of cosmic evolution, we should welcome the outcome as desirable.

Perhaps you can see how the debate between doomers and accelerationists isn’t actually about human extinction — in the sense of our species dying out — it’s simply about what comes after us. It’s about which scenarios would count as final extinction. Both camps are unified by the view that our species should soon bite the dust.

That’s precisely what makes both camps the enemy of humanity. It’s why I’ve repeatedly argued that the entire TESCREAL framework should be abandoned. Rather than trying to build an ASI to usher in a world run and ruled by posthumans, why not design AI systems that work for us? That facilitate our creativity and enhance our humanity? That advance the causes of peace, sustainability, and prosperity? That ameliorate the human condition rather than trying to supplant it with a new posthuman condition?

The TESCREAL movement essentially gives up on the world we have — it gives up on our species, opting instead to abandon the entire human endeavor through the escapist fantasy of erasing that endeavor and starting anew.

That’s the sort of technology that I endorse. “AI” is not inherently bad, but right now it’s being developed and promoted — and warned about — by TESCREALists who, as such, want to usher in a novel phase of existence in which posthumans, in whatever form they might take, control our future.

This is what Adam Kirsch aptly dubs the “revolt against humanity,” which is taking place before our very eyes — a kind of realtime techpocalypse, if you will.

Conclusion

So, please don’t be deceived by doomer TESCREALists screaming about the possibility of “human extinction.” They are not using the term “human extinction” the way most of us would naturally assume, which is to their rhetorical advantage: by meaning “final extinction” while giving the impression they’re talking about “terminal extinction,” they can more easily convince their unwitting audience that everyone’s on the same side. In fact, these people are the enemies of our species, as terminal extinction is built-into their eschatological plan. It’s an outcome entailed by the “best-case scenario” involving a fully value-aligned ASI.

Confusing matters even more, these TESCREALists explicitly define “humanity” as both our species and whatever posthumans come after us. That further enables them to obfuscate their strategic conflation of terminal and final extinction, since it allows them to say that they, too, care about the long-term survival of “humanity.” What they mean, though, is posthumanity, which in their oxymoronic phraseology counts as humanity (e.g., consider the term “posthuman humans”).

Don’t fall for such linguistic prestidigitation (i.e., slight of hand). Whenever one sees a video like Krueger’s, demand that the speaker precisely defines ambiguous terms like “human” and “extinction.” Otherwise, assume that they’re talking about posthumanity and final extinction.

Further Reading

For folks interested in a more detailed, academic treatment of these ideas, see sections 4 to 6 of my recently published paper “Should Humanity Go Extinct? Exploring the Arguments for Traditional and Silicon Valley Pro-extinctionism,” in the Journal of Value Inquiry. A complete pre-publication draft can be found on my website here. 👇

As always:

Thanks for reading and I’ll see you on the other side.

In my Journal of Value Inquiry article, linked at the end of this article, I argue that extinction neutralism is pro-extinctionist in practice. The outcome of extinction neutralism and pro-extinctionism would be identical, since there’s no reason to believe that our species would survive for long in a world ruled and run by “superior” posthumans. This is why I would characterize nearly the entire TESCREAL movement as “pro-extinctionist.” Even those TESCREALists who’d explicitly reject pro-extinctionism in favor of extinction neutralism (examples might include Toby Ord, Will MacAskill, etc.) are still advocating for a future in which Homo sapiens would no doubt be sidelined, marginalized, disempowered, and eventually snuffed out.

The pro-extinctionist position comes in two general varieties: traditional pro-extinctionism and Silicon Valley pro-extinctionism. The key difference is that traditional pro-extinctionists want to bring about final extinction, whereas Silicon Valley pro-extinctionists want to bring about terminal extinction without final extinction. In this paper, all uses of “pro-extinctionism” are specifically referring to the Silicon Valley variety.

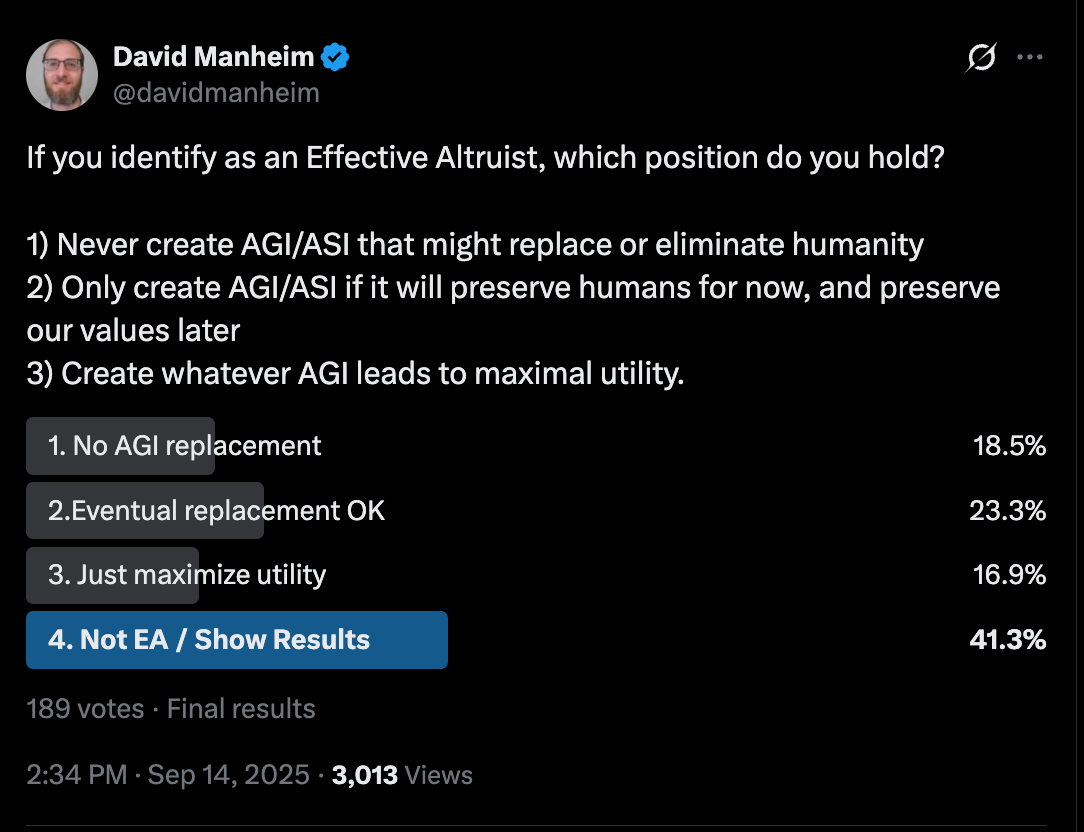

David Manheim, an active participant in the TESCREAL movement, posted a poll for EAs probing their views on the matter. About 18% of the 189 respondents said they favor the coexistence view.

This is a generalization about accelerationists and doomers. Exceptions can be found, though my claims here are nonetheless broadly true of these opposing groups.

With the exception of rarities like Ladish, a TESCREAL doomer who holds the coexistence view. Because this is a minority position that, to my knowledge, has never been defended in the TESCREAL literature by anyone, I will largely ignore it.

In other words, most accelerationists have minimal requirements for ASI to be our worthy successor. The doomers, as we will see, have more elaborate requirements.

This paragraph is largely quoted verbatim from this article of mine in Truthdig.

In other words, Druggan hints at the coexistence view, but is also perfectly fine with ASI violently exterminating us. Indeed, he suggests that if exterminating us would be best for the ASI, then it ought to do this.

What do they mean by “values”? No one in the community really knows. Most are utilitarians who think that “value” could mean pleasure, satisfied desires, or things like knowledge. The point is that whatever we take to be “valuable,” there should be more of it in the world. More more more! That’s the name of the ethical game they play. Utilitarianism is not that different from capitalism, except that the aim is to maximize the total amount of “value,” in a general moral sense, as opposed to “shareholder value,” in the case of capitalism. This is one of the problems with utilitarianism: its single-minded focus on maximization. But there are a wide range of alternative responses to value, such as treasuring, savoring, protecting, preserving, caring for, etc. — in contrast to maximizing. I strongly prefer these alternative responses in most cases.

More specifically, many doomers would say that our posthuman successors need not accept the specific values that we currently accept. Rather, they should accept the values that we would embrace if only we were perfectly rational, perfectly informed, and so on. (In other words, the answer to the question, “What do we value?” might be: “What we value is whatever we would value if only we were perfectly rational, informed, etc.”) This is still an anthropocentric conception of value; it just acknowledges that we might not have figured out the right human values yet. If an ASI does that and embraces those values, this would count as a “win.”

And hence terminal extinction, because final extinction can’t happen if our species survives. As noted, final extinction entails terminal extinction, but terminal extinction could happen without final extinction having happened — as in the case of us dying out by being replaced with something else.

Am I the only one noticing that these wankers are all men? Who look like they have no pets, have not been outdoors in years and couldn’t keep a houseplant alive? TESCREALism seems to me like the latest technological iteration of patriarchal male self-hatred foisted upon humanity. These people should not be in charge of anything ever.

Thank you for clearing the fog around this entire thing. It is insane that some people really hold these thoughts. I have always wondered at that segment of this infamous Peter Thiel interview when he's asked if he would want the human race to endure and he hesitates to answer. I thought surely people misunderstood the reason for his hesitation. But reading this article, it all makes sense now.

I suppose we do need Ai safety research. It is something we do have in common with the AI Doomers. But the fact that our species extinction is offered up as a trade-off for anything makes me doubt the saneness of these people.