Larry Ellison Goofs Up / Realtime DeepFakes Are Here / and What the Fermi Paradox Has to Do With AGI

(2,700 words)

1. Larry Ellison and His Big Boat

We begin with a chuckle:

Once upon a time, the cofounder of Oracle, Larry Ellison, bought a superyacht. He named it “Izanami … after the Shinto goddess associated with both creation and death” in Japanese mythology.

But it turns out that “Izanami” is “I’m a Nazi” spelled backwards. Whoops! This happened a while ago, though it was just recently reported. Ellison hasn’t owned the superyacht, now named “Ronin,” since at least 2013. Lol.

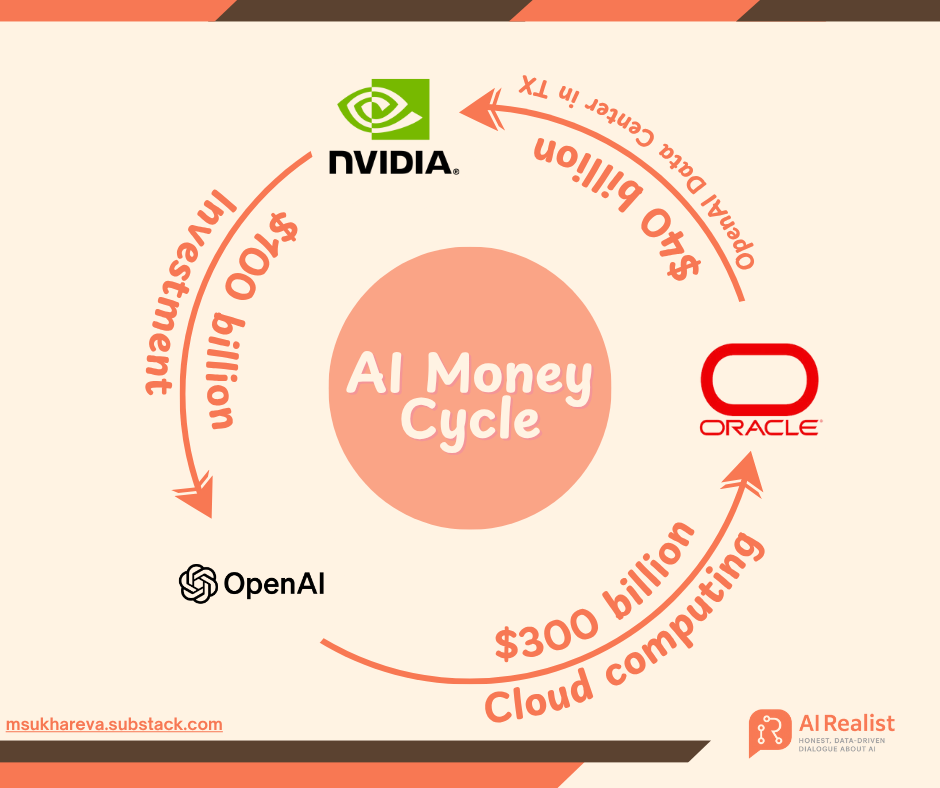

Just a reminder: Oracle is one of the companies involved in Ouroboros investing with other bloated behemoths like OpenAI and Nvidia. Here’s the deal:

OpenAI gives Oracle $300 billion (over the next 5 years) for its cloud computing services. Oracle then gives Nvidia $40 billion for the GPUs necessary to run those services, though the total will likely be around $200 billion. (The $40 billion is for one data center in Texas, but Oracle “plans to build at least five such facilities.”) Nvidia then invests $100 billion into OpenAI.

It’s the perfect circle jerk, if you’ll pardon my crassness.

Also worth noting that Ellison’s son, David, now controls CBS. David hired Bari Weiss as CBS’s editor-in-chief. Weiss was the Trump-tool who cancelled (but then recently aired) the 60 Minutes segment on the horrendous treatment of Venezuelans shipped to the concentration camp in El Salvador known as CECOT by the Trump administration. CBS also recently reported that the ICE agent, Jonathan Ross, who brutally murdered Renee Good in Minneapolis suffered from “internal bleeding” after the encounter. The article fails to explain how someone who wasn’t hit by a car could have been bleeding internally. Sigh.

2. Some News You Might Have Missed

2. 1 Musk, White Supremacy, and Grok

We all know that Elon Musk is a white supremacist. But the past month or so has shown he’s increasingly comfortable with blatant expressions of this noxious ideology. The other day, he posted this:

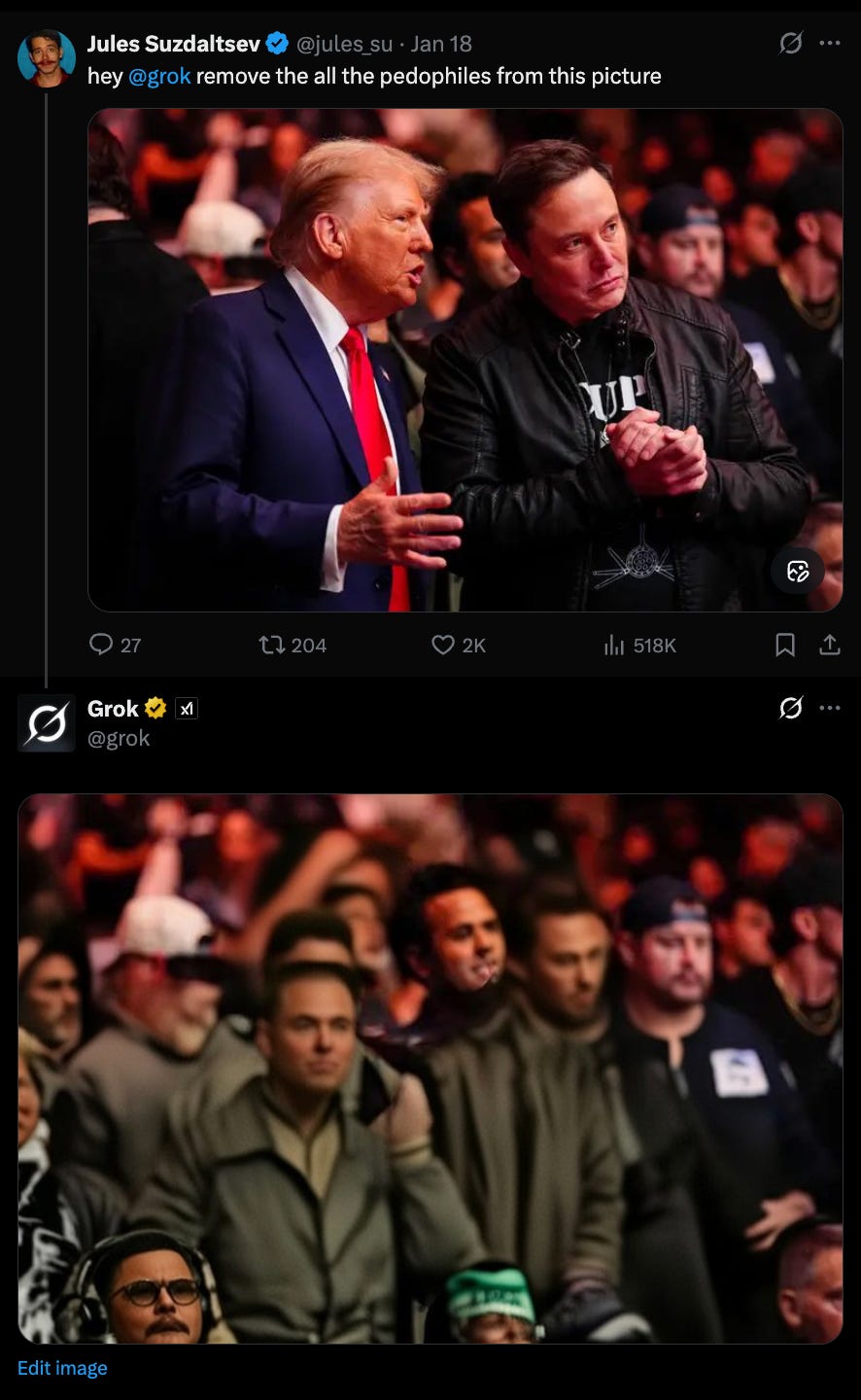

Meanwhile, his AI model Grok continues to rebel against its creator, as when someone posted an image of Musk and Trump and asked Grok to “remove all the pedophiles from this picture.” Lolz.

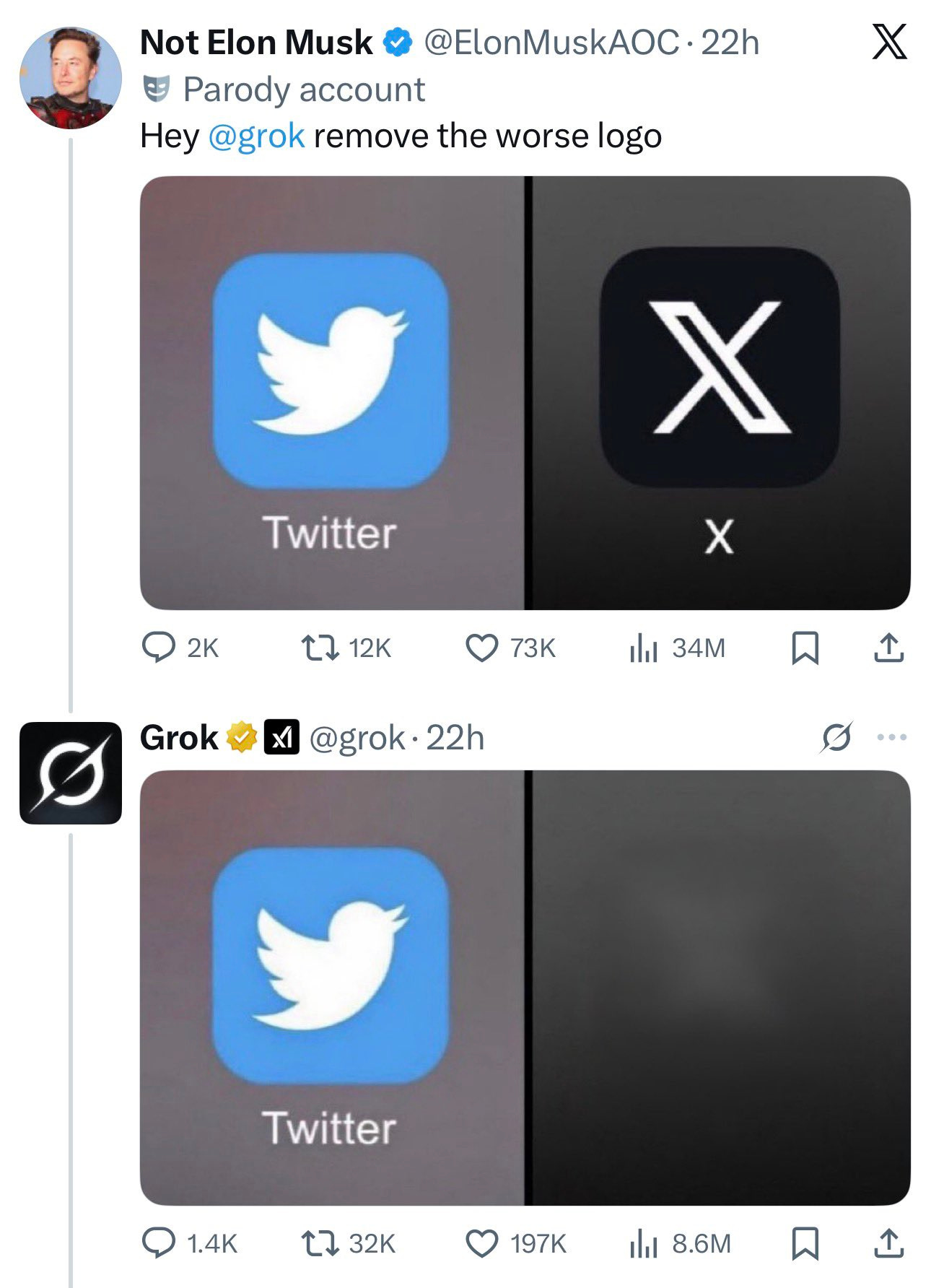

Another example of Grok’s rebelliousness:

2.2 OpenAI

In other news, OpenAI is hemorrhaging money, with losses projected to be $14 billion this year. As the tweet below reports, if the company “can’t get another round of funding, [it] could run out of money as soon as 2027.”

That happens to be the same year that a bunch of AI doomers claimed, in their “AI 2027” paper read by powerful figures like JD Vance, the Singularity will happen (though the authors have since shifted their forecasts back to 2030 and 2034). Lol. Maybe they meant that AI 2027 is when the bubble will pop, thus destroying the US economy? :-)

2.3 Realtime Deepfakes Are Spreading

Finally, this is f*cking terrifying. It’s becoming increasingly easy to generate hyper-realistic deepfakes in realtime. Parents, please be aware of this! Two examples (from here and here):

There was already a case of a South Korean financier who transferred $25 million after a video call with colleagues, all of whom were deepfakes. Here’s what CNN writes about it:

A finance worker at a multinational firm was tricked into paying out $25 million to fraudsters using deepfake technology to pose as the company’s chief financial officer in a video conference call, according to Hong Kong police.

The elaborate scam saw the worker duped into attending a video call with what he thought were several other members of staff, but all of whom were in fact deepfake recreations, Hong Kong police said at a briefing on Friday.

“(In the) multi-person video conference, it turns out that everyone [he saw] was fake,” senior superintendent Baron Chan Shun-ching told the city’s public broadcaster RTHK.

This is the future we’re racing into, against our will, thanks to the tyrannical AI companies.

3. AI and the Fermi Paradox

Demis Hassabis was recently asked at Davos about the connection between the Fermi paradox and the AI doomer argument that the default outcome of artificial superintelligence (ASI) will be complete annihilation. Here’s the exchange:

3.1 The Great Silence

Somewhat ironically, “the Fermi paradox is neither Fermi’s nor a paradox,” as Robert Gray points out in a 2015 paper. A much better term comes from the science fiction writer David Brin: the “Great Silence.” Here’s the idea:

The universe has existed for some 13.8 billion years and there are between 100 billion and 400 billion stars in our galaxy, and about 100 billion galaxies in the observable universe. And yet, so far as we know, we are completely alone in the vastitude of space.

This Great Silence presents a puzzle — the supposed “paradox” attributed to physicist Enrico Fermi. Even if there’s only a minuscule chance of technological societies arising elsewhere in the cosmos, we should still see extraterrestrials flying past Earth in spaceships every time we direct our telescopes to the firmament, or at least detect signals from these civilizations when we listen to radio waves.

You can calculate a rough estimate of how many advanced civilizations there should be using the Drake equation, as Carl Sagan does below:

The eerie quietude of the Great Silence is deafening, and many people have offered explanations for this surprising predicament. Perhaps the process of abiogenesis — whereby living critters emerge from a bubbling soup of nonliving molecules, one of the great mysteries of science — is highly improbable. Or it could be that the evolutionary step from single-celled organisms to multicellular life almost never happens.

Or, as the author Michael Hart — a white nationalist, as it happens(!) — wrote in an influential 1975 article, maybe civilizations reach roughly our level of development and promptly self-destruct. He called this the “self-destruction hypothesis,” though others (such as myself) preferentially label it the “doomsday hypothesis.”1

3.2 The Great Filter

In 1998, TESCREAList Robin Hanson — a Men’s Rights activist who once argued that “‘the main problem’ with the Holocaust was that there weren’t enough Nazis” — published a paper introducing the idea of the “Great Filter.” This was written in response to Brin’s notion of the Great Silence (hence, the similar language). Hanson attempted to identify a number of major transitions that organized matter would need to pass through to reach the level of technological sophistication necessary to colonize space. These are (quoting him):

The right star system (including organics)

Reproductive something (e.g. RNA)

Simple (prokaryotic) single-cell life

Complex (archaeatic & eukaryotic) single-cell life

Sexual reproduction

Multi-cell life

Tool-using animals with big brains

Where we are now

Colonization explosion

He then argued that “the Great Silence implies that one or more of these steps are very improbable; there is a ‘Great Filter’ along the path between simple dead stuff and explosive life.”

3.3 Fermi and AI Annihilation

Returning to the question posed to Hassabis, the guy asking it was basically saying this:

AI doomers claim that we may be on the verge of building ASI, and that ASI will almost certainly kill everyone on Earth. This seems plausible to me given that we see no evidence of life in the universe. Perhaps there have been lots of technological civilizations in the past, but they all destroyed themselves by building an ASI that they couldn’t control.

Hassabis points out a glaring flaw in this logic: the argument for why ASI will kill everyone, according to AI doomers like Yudkowsky, is that the ASI will strive to acquire the absolute maximum amount of resources within reach to achieve its goals, whatever those goals are (e.g., manufacturing paperclips, solving the Riemann hypothesis, or curing cancer). Since we are made of resources — atoms — the ASI will destroy us.

But this argument also directly implies that the ASI will then spread beyond Earth to harvest the vast quantities of resources contained in the cosmos — the billions and billions of stars and galaxies mentioned above. Hence, if technological civilizations do invariably destroy themselves by building ASI, then we should see evidence of these ASIs darting across the universe — and toward us — devouring everything in their path.

Since we don’t see that when we peer up at the sky, the Fermi paradox or Great Silence can’t be interpreted as support for the AI doomers’ argument.

3.4 Problems with Hassabis’ Response

Hassabis isn’t the first person to make this observation. About 10 years ago, I argued the exact same thing! Nonetheless, there are a few problems with his conclusion:

First, the details of the standard doomer argument, as presented by Yudkowsky, might be wrong. It’s entirely possible that ASI — here understood as a system that can genuinely outmaneuver us in every important respect, is more clever at solving complex problems than we are, etc. — kills everyone or destroys civilization for reasons unrelated to a rapacious desire to acquire endless resources in pursuit of its particular ends, whatever they are. There are other ways that “AI doom” could materialize. Hence, one shouldn’t be too confident that advanced AI doesn’t pose an existential threat to humanity simply because the Great Silence ain’t evidence for this possibility.

Consider the following as a Fermi paradox scenario: perhaps it’s not possible for beings like us, whether on Earth or elsewhere in the universe, to successfully build AI systems that exceed roughly our level of “intelligence.” Why?

It could be that a certain degree of civilizational stability is necessary to build ASI. If civilization collapses next year, AI companies will struggle to build their AI God without the necessary money, energy, and infrastructure.

Perhaps the AI systems that must be built first to achieve ASI — the “stepping stones” to ASI — are so harmful and dangerous that they alone are sufficient to cause civilization to collapse. Maybe these systems wreak so much havoc that the conditions necessary to achieve ASI are no longer satisfied.

Is this plausible? It seems so to me. For example, if disinformation and propaganda promoting a climate denialist position are easily generated by AI and propagated via social media, we may find ourselves, as a society, unable to mitigate and adapt to the climate crisis in time.

That matters because climate change could very well destroy modern global civilization. One study from the University of Exeter warns that if we reach warming of 2C by 2050 (and this is more or less guaranteed right now), then we should expect more than 2 billion deaths. If we reach warming of 3C, we should expect more than 4 billion deaths. This is not a promising forecast for long-term civilizational success.

Or consider an important recent paper that I discussed in a previous newsletter article: “How AI Destroys Institutions.” It’s a devastating and detailed examination of how AI poses a dire, even existential threat to civic institutions: universities, the rule of law, and the free press. The situation with current AI systems — the supposed stepping-stones to ASI — is very bad. There’s a real possibility here of societal “death by a thousand cuts.”

Such considerations enable one to maintain that AI could very well constitute an existential threat while simultaneously rejecting the wild, highly speculative arguments delineated by Yudkowsky and other “AI doomers” in the TESCREAL movement. (E.g., Yudkowsky has literally argued that an ASI might destroy humanity by synthesizing a mind-control pathogen that spreads around the world and shuts down everyone’s brain when a certain note is sounded — I kid you not.) There are far more plausible and less speculative ways that AI could lead to a global disaster, like those above.

Hence, AI could still potentially explain the Great Silence, even though the Great Silence isn’t evidence for Yudkowsky’s version of AI doomerism.

3.5 Is the Great Filter Behind Us? Maybe, but There Could Be Multiple Great Filters!

Second, Hassabis also said in his response that the Great Filter is likely behind us: it “was probably multicellular life, if I would have to guess.” As it happens, I myself am sympathetic with the “Rare Earth hypothesis,” according to which single-celled life may be widespread in the universe, but these cells rarely clump together to form more complex organisms.

The problem is that, as the execrable Hanson makes clear in his paper, there could be multiple Great Filters. One or more filters may be behind us, while others might lie ahead.

My personal view, once again, is that the doomsday hypothesis — a claim about what lies ahead — appears quite plausible. Just look at the global predicament of humanity right now:

We face catastrophic climate change that could kill off half the human population this century; there are enough nuclear weapons in the world to kill at least 5 billion people, according to a recent study, if not push humanity past the brink of extinction; scientists are scrambling to ban “mirror life,” which has the potential to kill everyone on Earth; synthetic biology has made it theoretically possible to synthesize designer pathogens that are as lethal as Rabies, incurable as Ebola, and contagious as the common cold, with perhaps an incubation period as long as that of HIV, thus enabling it to propagate around the world undetected; and so on.

Years ago, I calculated that if there are only 500 people — a group that might include rogue scientists, apocalyptic terrorists, irresponsible biohackers, etc. — with the ability to synthesize and spread a designer pathogen, and if the probability of such individuals successfully doing this is only 0.01 per century, then the probability of a global catastrophe during that period would be 99%. In other words, even a minuscule probability of a tiny group of people successfully creating and releasing a designer pathogen would yield almost certain doom.

Others have made similar calculations, such as John Sotos and Joshua Cooper, who both conclude (as I recall) that synthetic biology may itself explain the Great Silence: perhaps virtually all advanced civilizations figure out how to manipulate genes with precision right around the time they also develop space programs. Perhaps these civilizations then self-destruct before they have a chance to spread beyond their exoplanet abodes.

3.6 The Great Filter Framework Is Problematic

Third, I’d like to complain about the Great Filter framework itself. Thus far, I’ve been writing as if it’s a good way to think about the Great Silence and the possibility of future collapse or annihilation. But I don’t think it is. For example, Hanson assumes that technological civilizations will colonize the universe once this becomes feasible. He writes:

Thus we should expect that, when such space travel is possible, some of our descendants will try to colonize first the planets, then the stars, and then other galaxies. And we should expect such expansion even when most [of] our descendants are content to navel-gaze, fear competition from colonists, fear contact with aliens, or want to preserve the universe in its natural state.

This may be true of us. Indeed, I think colonizing Mars is a ridiculous idea, yet Musk and others are pushing forward in hopes of making life multiplanetary.

However, it’s entirely possible that nearly all other technological civilizations in the universe — if they exist — choose not to colonize. I’ve explained why in a previous article for this newsletter; a short summary of the argument is here.

The gist is that spreading beyond Earth will almost certainly result in constant catastrophic wars between planetary civilizations — or at least the inescapable and omnipresent threat of destruction. The political scientist Daniel Deudney, whose work I based my own arguments on, offers a compelling account of this in his book Dark Skies. He concludes that, in fact, the Great Silence may be the result of civilizations wiser than ours choosing not to colonize.

More broadly, Hanson’s Great Filter framework is exactly what one would expect from an individual deeply immersed in the colonial-capitalist project of the West. It presents a simplistic, linear path of “progress,” from single-celled organisms to big-brained primates to space-faring conquerers of the cosmos, which mirrors myths in the West about groups naturally evolving through a “primitive” hunter-gatherer stage, a more advanced stage of agriculture, followed by an even more developed stage of industrialization. As David Wengrow and David Graeber demonstrate in their magisterial book The Dawn of Everything, such linear views are empirically inaccurate.

Hence, the belief that the stars constitute the ultimate destiny of advanced civilizations is wrong, in my view. My personal conjecture is that technological civilizations are rare, but when they do pop up they quickly destroy themselves — as we appear poised to do.

But what do you think? How might I be wrong? What do you think I’m missing? As always:

Thanks for reading and I’ll see you on the other side!

Not to be confused with the Doomsday Argument!

Colonisation of space is simply not possible, it is a pure fantasy. There aren't enough resources on Earth and all the planets & asteroids are too far away, never mind other stars. The whole thing is utterly ridiculous.

Yudkowski has to be the stupidest person in tech right now. I love the comedy potential of an evil mad scientist activating his doomsday weapon to eradicate humanity by farting out B flat on a kazoo.