Is the Hype Just Hyperbole? Could AI Actually Destroy the World?

Another lighthearted conversation about a cheerful topic!

I’ve had a number of people ask me recently about how my views on the “existential risks of AGI” have changed over time. Here’s a brief overview of my current thinking, which offers — perhaps — a somewhat unique perspective on the topic. I think I’m right about what follows, but as I tell my students: most people who are wrong think they’re right! Hence, I’d very much welcome feedback: what do you think I’m missing? What am I getting wrong? What are your own views on the matter? I’ll open up the comments section to everyone, including non-subscribers, so you can share your thoughts. Perhaps I’ll update my views and publish another piece in the coming weeks! :-)

The Framing Is All Wrong

As many of you know, I was a very active member of the TESCREAL movement for roughly a decade (~2009 to ~2019). Most of what I wrote about centered around “existential risks,” and I’m listed as the sixth most prolific contributor to the existential risk literature (a fact that makes me chuckle heartily).

However, I now wholly reject the “existential risk” framing of the question about AGI. I’ve alarmed a few people by saying that “I literally couldn’t care less about existential risks,” but this isn’t a radical view once you understand what it means. Let me explain:

The concept of existential risk was introduced within the explicit context of transhumanism, the ideological backbone of what Dr. Timnit Gebru and I call the “TESCREAL bundle of ideologies.” (It’s the “T” in that acronym.) Transhumanism is the view that we should radically reengineer humanity to become a new “posthuman” species.

Other ideologies in the TESCREAL bundle, such as longtermism, expand the transhumanist futurology in claiming that once we become posthuman, we must fulfill our cosmic destiny by spreading beyond Earth, conquering the universe, subjugating all natural processes, plundering the untapped resources of space, and establishing a multi-galactic civilization full of unfathomable numbers of digital people, all of whom would be living in vast computer simulations. The Father of Longtermism, Nick Bostrom, estimates that there could be at least 10^58 digital people. Since bringing these people into existence is of great moral importance to longtermists, one could thus sloganize the core idea as: Won’t someone think of all the digital unborn? (Lolz.) Elon Musk has called longtermism “a close match for my philosophy,” to give you a sense of how influential this vision has become in Silicon Valley. It wouldn’t be worth talking about if it didn’t matter.

So, existential risk is the most central idea in longtermism — indeed, within the TESCREAL bundle more generally. It essentially denotes any event that would prevent us from creating this “techno-utopian” world of the future; from fulfilling our cosmic destiny in the universe.

The most obvious existential risk is human extinction (a term with many possible definitions), but it’s important to note that there are plenty of survivable existential risks as well. For example, on the standard definition, the following scenario would literally count as an existential catastrophe:

We develop advanced technology and cure all diseases, radically ameliorate the human condition, redistribute wealth so that no one is poor, eliminate world hunger, establish perpetual peace on Earth, fully restore the many ecosystems we’ve obliterated, live in perfect harmony with nature, and so on. But, our descendants also choose not to become posthuman or colonize the cosmos and plunder its vast resources — our so-called “cosmic endowment” of negentropy, or “negative entropy.”

By never doing these things, humanity will have failed to realize a huge fraction of our “long-term potential” in the universe. The whole reason that Bostrom invented the existential risk concept was to pick out scenarios in which we fulfill only a tiny part of our potential. This is why the scenario above would be an existential catastrophe.

If this sounds ridiculous to you, then you and I are same-paging it! You understand why I don’t care one bit about “existential risks” (and neither should anyone else). Since I don’t buy into these bizarre fantasies of digital posthumans living in virtual reality worlds running on literally “planet-sized” computers powered by Dyson spheres strewn across the literal heavens, I don’t care one bit about any event that prevents us from realizing this future.

This does not mean that I don’t care about global catastrophes or human extinction! I very much do, but not because they could foreclose this oddball sci-fi “utopia.” Rather, I care about (averting) them because of the harm they would inflict on actual living, breathing, real human beings like you and me. This is why I preferentially employ the term “existential threat” instead of “existential risk” — to detach the possibility of global-scale harms to civilization or humanity from the techno-fantasies of TESCREALism. I care about existential threats, but not existential risks. The terminological switcheroo here might look rather trivial, but it reflects a deep divergence in ethical perspectives on what really matters.1

A Merely Hypothetical Future Technology

Returning to the original question about AGI, my points above suggest a very easy fix: simply reframe the question as: “Does AGI pose any existential threats to civilization or humanity?” Yet problems remain with the question itself. (This is one of the annoying things about asking philosophers anything: we often get hung up on the details, sometimes driving our interlocutors to consider autodefenestration as a last-resort means of extricating themselves from the insufferable conversation!)

First, I have no idea what “AGI” means because no one does. As a bunch of Google DeepMind engineers wrote in a paper published last year, “if you were to ask 100 AI experts to define what they mean by ‘AGI,’ you would likely get 100 related but different definitions.” If we can’t define what “AGI” means, then asking whether it poses a dire threat to humanity is meaningless. By analogy: Do you think that globeddygirk poses an existential threat to civilization or humanity? What’s your answer? Hmm? Stop being such a philosopher and just answer the damn question!

Fortunately, I think there’s a relatively easy fix for this, too: let’s just avoid the obfuscatory term “AGI” altogether, since what the question is really getting at is the following:

Imagine we build an AI system of some sort that can out-maneuver us in every important way, is more clever than us, can solve problems faster than we can, and so on. (Might as well call this system “globeddygirk”! After all, “What’s in a name?”) Could this system pose an existential threat?

My answer would be: Yes, of course. I see absolutely no reason to think that we could control such a system in every way at all times. Hence, I see no reason to think that building this system wouldn’t have potentially catastrophic consequences — which is why we should never try to build it. Here I actually agree with people like Roman Yampolskiy who say that a system like this would inevitably lead to catastrophe.

Could this catastrophe entail human extinction? I’ll elaborate the reasons in another post, but my considered view as someone who’s been publishing on global catastrophic risks since 2009 is that the probability of human extinction is generally quite low this century. That’s the good news. The bad news is that I think the probability of civilizational collapse is extremely high, as explored more below.2

Focusing specifically on extinction for now, the fact is that humans are incredibly adaptable. Back in 2009, the academic journal Futures published a special issue in which contributors were tasked with devising scenarios in which everyone on Earth dies. The take-away from this issue was that it’s really hard to figure out how to kill every last person! Think of the uncontacted tribes in the Amazon, the small communities in northern Siberia, etc. Even a full-on exchange of thermonuclear weapons between the US and Russia would “only” kill some 5 billion people — that’s horrific beyond human comprehension, but nowhere close to human extinction.

Still, if we build an AI system that really is more clever, better at problem solving, capable of out-maneuvering us, etc., perhaps it could find some surprising way to expel all humans from the theater of existence, including the aforementioned uncontacted tribes and Siberian communities. Recall from 2022 that a team of scientists got curious and tasked an AI-based system called “MegaSyn” with generating “a compendium of toxic molecules that were similar to VX,” one of the most lethal nerve agents known to science. As Scientific American reports:

The team ran MegaSyn overnight and came up with 40,000 substances, including not only VX but other known chemical weapons, as well as many completely new potentially toxic substances. All it took was a bit of programming, open-source data, a 2015 Mac computer and less than six hours of machine time.

Perhaps a very capable AI system could figure out a way to synthesize and disperse even more lethal toxins around the world. Maybe it wouldn’t be toxins but designer pathogens using CRISPR-cas9. I don’t know, but the risk seems real enough for me to reiterate my previous statement: no one should ever try to build a system with such capabilities, because the laws of nature clearly state that if you fuck around, you’re going to find out. The lesson of the MegaSyn experiment isn’t that an advanced AI did that, but rather: “Holy hell, I didn’t know that an AI system could do that.” Even the authors of the study were startled and frightened by the results. What else, then, might an advanced AI system be able to do that we can’t yet anticipate?

This is one reason I’m sympathetic with the StopAI movement. Their position, as I understand it, is that we should immediately and permanently halt the development of AI. This contrasts with groups that I’m not a big fan of, such as PauseAI, which more closely align with TESCREAL doomers like Eliezer Yudkowsky in maintaining that we should build super-powerful, super-advanced AI systems but only once we know how to effectively control them (hence, “pause” rather than “stop”). This is based on the myth, in my view, that it could be possible to control such systems, which I see no good reasons for accepting. At least that’s my current position.

The Scariest Futures Are Far Away

Here comes a huge qualification: we’re nowhere close to building the sort of advanced systems with the general capabilities described above, and scaling-up large language models (LLMs) isn’t going to get us there. As the recent release of GPT-5 shows, even the most advanced frontier AI models are shockingly dumb, and progress appears to be hitting a brick wall (we’ve picked most of the low-hanging fruits since 2022, when ChatGPT was released). As Gary Marcus writes, “LLMs are built, essentially, to autocomplete.” Or to quote Emily Bender, Timnit Gebru, and other scholars, they’re nothing more than “stochastic parrots.”

LLMs don’t “think,” they have no world models, they can’t reason, and they hallucinate constantly. Indeed, hallucinating is all they do, which is precisely why their outputs are nothing more than pure “bullshit,” in the technical sense of that term. Increasing the compute, parameters, and training data of a stochastic parrot isn’t going to get you something qualitatively distinct — a “God-like AI,” as they say — it’s only going to get you a parrot that squawks a little different.

This is why I’m not worried about AI posing any near-term existential threats to the survival of our species as a whole. The AI bubble is going to burst, perhaps taking down much of the entire US economy with it, and those who claimed that “AGI” — whatever that is exactly — is right around the temporal corner are going to look rather silly. In sum, there’s no immediate danger of human extinction from AI, although there might be at some point in the more distant future. That’s precisely why we should, you know, StopAI right now.

The Present Is Still Very Scary

But there’s a twist in the narrative I’m spinning: I don’t think we need to build super-advanced God-like AI to pose serious threats to human civilization. The systems we have right now could nontrivially contribute to, or help catalyze, the collapse of our modern global civilization in the near term, i.e., within our lifetimes.

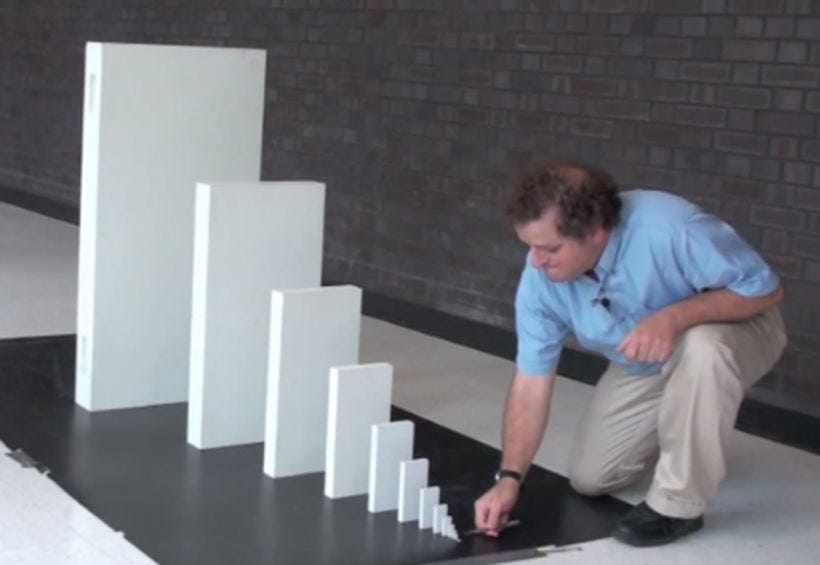

It’s worth noting here that many civilizations have in fact collapsed throughout history. Not every doomsayer who screamed “The end is nigh!” was wrong. What’s different between then and now is that societies, nations, states, communities, countries, and empires aren’t isolated from each other the way they once were, but have instead become massively interconnected through complex economic, agricultural, political, etc. networks. One domino falling could easily trigger amplifying cascades that bring everyone else down, too. This is just another way of saying that we live in a global village.

Now consider the case of climate change. Current AI systems — LLMs — could significantly exacerbate this civilization-level threat in two main ways. First, running LLMs comes with a huge carbon price tag. The emissions of Microsoft, which has a close relationship with OpenAI, have increased by 23.4% due to AI, while the emissions of Google, which owns DeepMind, are up a whopping 51%. This is despite Google’s pledge “to achieve net-zero emissions across all of our operations and value chain by 2030” and Microsoft’s claim that it will be “carbon negative” by 2030.

Second, LLMs enable the generation of highly compelling (to most people) disinformation about climate change. About 15 years ago, I wrote an article (which I never published) arguing that the effects of climate change will make climate denialism impossible by ~2030. People will look around at a world unraveling and find that denying the reality of anthropogenic global warming is no longer tenable. As the saying goes, “climate change will manifest as a series of disasters viewed through phones with footage that gets closer and closer to where you live until you’re the one filming it.”

I now think that denialism might actually increase, for the same reason that the most committed members of The Seekers became more convinced in the truth of their prophecies after those prophecies failed. Backstory: some psychologists infiltrated a 1950s UFO cult to study it. When its prophesied doomsday never materialized, many cult members stuck to their beliefs, holding that it was precisely because of their fervent conviction in the prophecies that the passing UFO did not destroy the world. One of these psychologists, Leon Festinger, coined the term “cognitive dissonance” to describe this phenomenon.

In the same vein, I now suspect that we’ll see all sorts of increasingly bizarre conspiracy theories to explain-away the effects of climate change. Think of recent claims that the Democrats are controlling the weather, and Marjorie Taylor Greene’s banger about “Jewish space lasers.” The point is that LLMs will massively supercharge such disinformation and conspiracy theories. They will enable propagandists to rapidly generate a high volume of extremely plausible-sounding stories about “what’s really going on — what the government and woke liberals don’t want you to know,” which will then be mass disseminated on social media.

This could pose a truly profound obstacle to addressing the root causes of climate change because if half the US population thinks that Democrats are responsible for the most recent flurry of devastating wildfires, spate of flash floods, wave of hurricanes, etc., then there simply won’t be the political will necessary to obviate the worst-case outcomes.

I am very, very worried about this, and I don’t see how our modern global civilization can survive the century — or even much farther than the mid-century point — if climate change is left unchecked. According to a recent study from the University of Exeter, if we exceed 2 or 3 degrees C of warming by 2050, we should expect between 2 and 4 billion — with a “b” — people to die prematurely. I can’t imagine civilization surviving if “only just” 1 billion were to suddenly perish over the course of a few years, much less 2 to 4 billion. This is only one estimate, and the numbers are very uncertain, but what I’ve noticed is leading climate scientists over time increasing their prognostications of how bad climate change will be. In other words, the more they understand the phenomenon, the bleaker our future appears. (It could very well have been the opposite: more research could have undermined prior warnings. But reality is cruel, and nature bats last.)

Hence, at precisely the moment when immediate action is needed to avert an unprecedented environmental catastrophe, companies like OpenAI have unilaterally forced upon society, without our consent, AI systems that are (a) nontrivially raising the concentration of atmospheric CO2, and (b) handing climate deniers and rightwing extremists a powerful new tool to generate propaganda to spread on social media platforms like X. This looks bad.

That’s What I Think, or At Least Think I Think

In conclusion, my take is that the “existential risk” framing is all wrong, which is why I only ever talk about “existential threats” these days. Existential threats include things like civilizational collapse and human extinction. Once again, the latter would entail the former, but not vice versa.

If we do succeed in building AI systems that can out-maneuver us in every way, I see no reason to believe that we’d survive for long, but I don’t think we’re anywhere close to building such systems (*takes deep breath*). Nonetheless, the systems we currently have are enough to jeopardize, in nontrivial ways, the survival and flourishing of our global village (*starts hyperventilating again*).

Those are my current thoughts on the risks of AI, at a very high level. I have said nothing about worker exploitation, intellectual property theft, algorithmic bias, and so on, because I’m focusing exclusively on existential-type threats in this post.

What do you think? I haven’t really heard anyone articulate exactly the same view as above — do you think I’m right? Am I one of those people who thinks they’re right but is actually quite wrong?3 :-)

Thanks so much for reading and I’ll see you on the other side!

Word of the day: lalochezia, meaning “the use of vulgar or foul language to relieve stress or pain.”

To be clear, I take “existential threat” to encompass two broad possibilities: human extinction and civilizational collapse. Human extinction would entail civilizational collapse, but civilizational collapse need not entail human extinction. Keep this in mind as you read further.

In his 2003 book Our Final Hour, Lord Martin Rees — a friend who wrote the foreword to my 2017 book — argues that there’s a 50/50 chance of civilizational collapse by 2100. I currently think this is wildly optimistic, although I tentatively agree with the futurist Bruce Tonn that the probability of human extinction “is probably fairly low, maybe one chance in tens of millions to tens of billions, given humans' abilities to adapt and survive.”

Note that I borrowed the title of this post from a report I wrote last year on quantum computing.

I made a similar observation recently - I don't think that this instance of AI, or to be more precise GenAI, is nearly close to being a super intelligent system that can have a "want" or "need" to wipe us off the Earth, but is just a massive cut among other cuts that will contribute to environmental collapse.

Published here on Substack if anyone is interested in reading.

I am very, very close to the position you articulate.

To maybe make this more explicit a bunch of intuitions/opinions I have about AI and the corresponding risks.

A) There is _in principle_ nothing ruling out _any_ development in AI (Gotthard Günther makes ONE caveat to this, but since this would invite more misunderstandings than solve them I'll bracket that for here and now.)

B) If A holds true then your intuitions about Stop AI also are true, insofar this would mean we couldn't possibly stop any from our POV undesirable behavior.

C) A and B - and this is kind of important - are absolutely moot points insofar we are not only not close to building AI but also not even on any trajectory that would seem promising in this regard.

D) I am not entirely sure how high on their own supply these people are - reading prior post of yours it seems that they pretty much are - but there seems to be a decent to good chance that not only will we produce bogus climate science but also sacrifice any real chances to stop climate change in order to hunt an entirely unrealistic AI savior that will tell us how to solve the issue.

E) As highly alarming as D already is, the more imminent danger is the mass support of authoritarian movements with increasing amounts of targeted propaganda that might propel us into a global war. The role of AI in this regard doesn't only seem to be the production of the propaganda in question but also to serve as a lightning rod to shield yourself from responsibility. With the common belief in AI, it seems that a significant percentage WILL be okay with technically mediated war crimes that our Superbrain at GPT6 or GrokWhateverVersion Number will have worked out for our superior firing power.

So, in short, yes, I am VERY VERY down with what you say. And given that libertarian and rightwing ghouls will in the foreseeable future be handling public education, I _do_ think that we need to put A LOT OF energy into countereducation right now, because when you say "It looks bad" - hell it fucking does.

We can still swing this thing around. But we need to do it STAT.