Is Sam Altman a Sociopath?

On his long history of mendacious, manipulative, and abusive behavior. (1,500 words.)

First, I want to share the most recent episode of my podcast Dystopia Now. The comedian Kate Willett and I interview the brilliant young linguist Adam Aleksic, better known on TikTok and YouTube as Etymology Nerd. Adam just published a fantastic book titled Algospeak.

For me, this was one of the most enjoyable episodes we’ve recorded so far — in part because I’ve had a long-time interest in linguistics and the philosophy of language, going back to my undergraduate years, and I got to nerd-out with Adam about topics like:

how Westphalian sovereignty following the Thirty Years’ War standardized languages,

the simultaneous loss of languages around the world (on average, one language is lost every two weeks!) and rapid diversification of novel dialects via the Internet, and

the ways that algorithms are concentrating power over the future evolution of language in the hands of a small number of Big Tech companies.

If this piques your curiosity, you can listen here.

The most successful founders do not set out to create companies. They are on a mission to create something closer to a religion, and at some point it turns out that forming a company is the easiest way to do so. — Altman

Now, on to the main topic of this article: Sam Altman is a profoundly duplicitous person who’s willing to say whatever is necessary to acquire increasingly obscene amounts of wealth and power. News flash, I know! Let’s take a deeper look:

In 2023, the Washington Post reported that “senior employees” at OpenAI describe Altman “as psychologically abusive,” with some company leaders saying that he is “highly toxic” to work with. Gizmodo writes that “Altman was accused of pitting employees against each other and causing ‘chaos’ at the startup.”

Todor Markov, who left OpenAI to work for Anthropic (another villain in the race to build AGI), characterizes Altman as “a person of low integrity who had directly lied to employees” about non-disparagement agreements that barred “them from saying negative things about the company, or else risk having their vested equity taken away.” Altman has repeatedly claimed that he does “not have a stake in OpenAI,” yet it turns out that he financially benefitted from OpenAI’s Startup Fund, which he owned until last year.

When Altman was fired by OpenAI’s board — before it became a full-on for-profit company — the reason given was that he was not “consistently candid in his communications.” In 2024, one of the board members who fired Altman, the EA-longtermist Helen Toner, provided these examples:

A deposition just made public from former OpenAI employee and cofounder Ilya Sutskever includes an email memo that reads: “Sam exhibits a consistent pattern of lying, undermining his execs, and pitting his execs against one another.” When a lawyer asked Sutskever during the deposition, “What action did you think was appropriate?,” he replied: “Termination.”

Gary Marcus notes that Altman just got caught “lying his ass off” once again — this time “to the American public.” You can read Marcus’ commentary here, but the TL;DR is: Sarah Friar, OpenAI’s CFO, called “for loan guarantees” that would ensure bailouts for OpenAI from the US government, given OpenAI’s overtly “reckless spending.” This pissed off a lot of people on both sides of the political spectrum, leading Altman to release “a meandering fifteen-paragraph reply on X” in which he claimed that “we do not have or want government guarantees for OpenAI datacenters.”

And yet OpenAI had “explicitly asked the White House office of science and technology (OSTP) to consider Federal loan guarantees, just a week earlier.” Altman also alluded to this in a recent podcast episode with economist Tyler Cowen, discussed more below. “When something gets sufficiently huge,” Altman told Cowen, “the federal government is kind of the insurer of last resort, as we’ve seen in various financial crises … given the magnitude of what I expect AI’s economic impact to look like, I do think the government ends up as the insurer of last resort.” Um, wtf?

Marcus concludes: “Altman lied about the whole thing to the entire world.”

I doubt I’ll lose any friends by declaring that Altman is a charlatan, a conman, a fraud — hence the descriptively accurate moniker “Scam Altman,” popularized by Elon Musk, who, by the way, just won a $1 trillion pay package from Tesla. To quote Paul Graham, who picked Altman as YCombinator’s President in 2014: “Sam is extremely good at becoming powerful.” He does this by manipulating others without shame, compunction, or scruples.

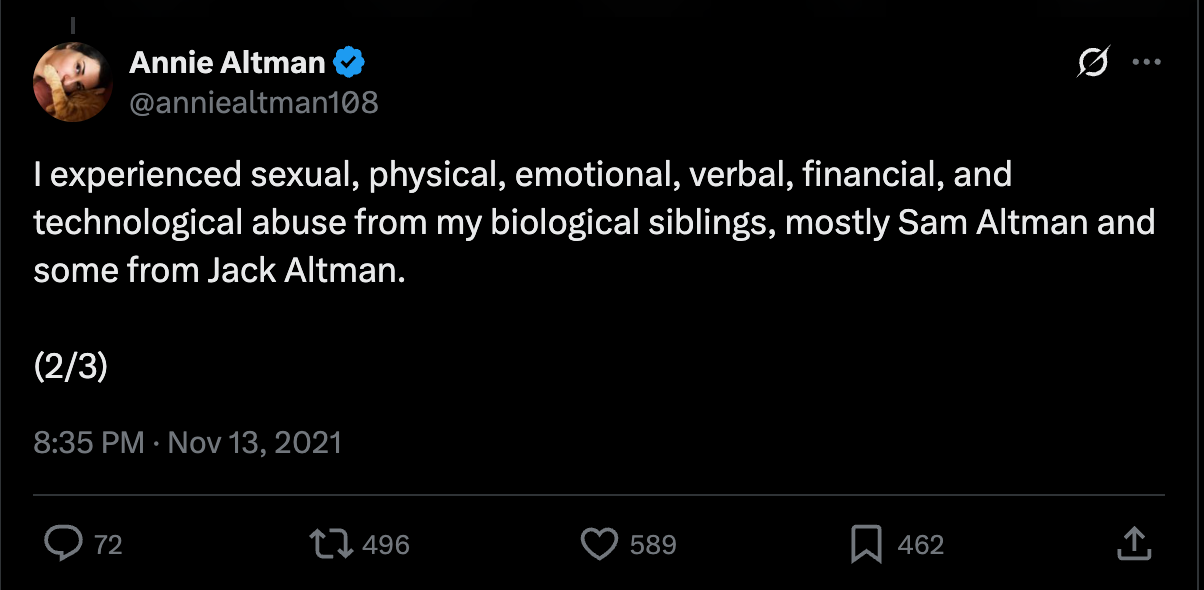

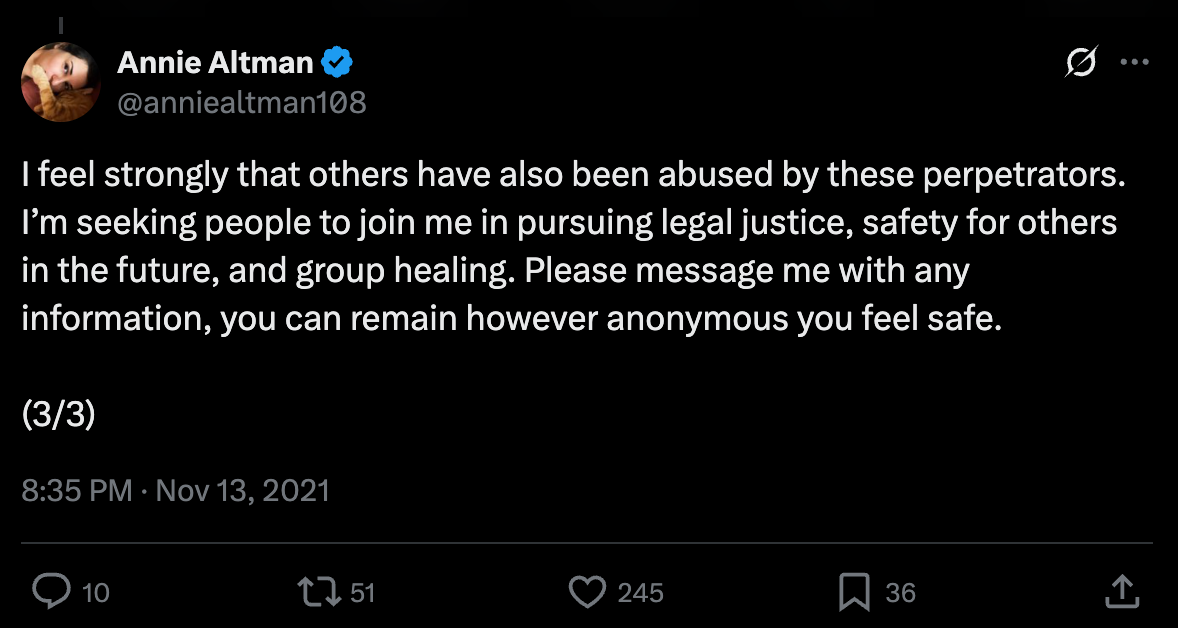

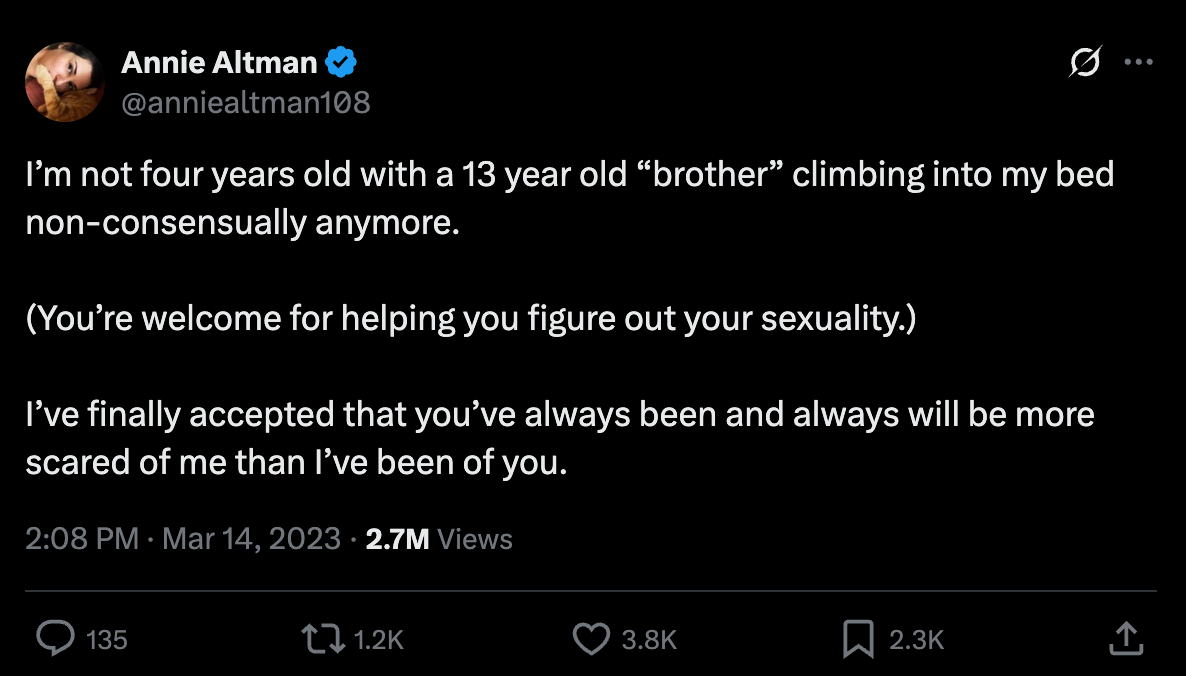

Even Altman’s sister, Annie Altman, has repeatedly accused Sam of abusive behavior. “I experienced sexual, physical, emotional, verbal, financial, and technological abuse from my biological siblings, mostly Sam Altman and some from Jack Altman,” she writes.

Annie adds: “I feel strongly that others have also been abused by these perpetrators. I’m seeking people to join me in pursuing legal justice, safety for others in the future, and group healing. Please message me with any information, you can remain however anonymous you feel safe.” In January of this year, Annie filed a lawsuit against Sam “claiming he sexually abused her.”

I was happy to see such accusations from Annie — made even more plausible by Sam Altman’s protracted history of abusive, toxic, and manipulative behavior — prominently featured in Karen Hao’s excellent book about Altman and OpenAI titled Empire of AI. This sort of thing:

In my view, Altman is very likely a sociopath — someone who does not suffer the pangs of a conscience, which is why he’s able to lie, cheat, and harm people with such ease. He certainly has a lot of sociopathic traits, even joking on multiple occasions about killing everyone on Earth.

For example, in 2015, he told the VP of engineering at Airbnb: “I think AI will … most likely sort of lead to the end of the world, but in the meantime there will be great companies.” On another occasion, he said that “probably AI will kill us all, but until then we’re going to turn out a lot of great students,” which triggered some laughter from the audience. Ha ha.

I mentioned Altman’s interview with Tyler Cowen above. This has also been making the rounds on social media, not just because Altman “appears to have been laying the groundwork for loan guarantees” (quoting Marcus), but because of how unbelievably goofy it is. At one point, the conversation goes:

Altman: I eat junk food. I don’t exercise enough. It’s like a pretty bad situation. I’m feeling bullied into taking this more seriously again.

Cowen: Yes, but why eat junk food? It doesn’t taste good.

Altman: It does taste good.

Cowen: Compared to good sushi? You could afford good sushi. Someone will bring it to you. A robot will bring it.

Altman: Sometimes late at night, you just really want that chocolate chip cookie at 11:30 at night, or at least I do.

Cowen: Yes. Do you think there’s any kind of alien life on the moons of Saturn? Because I do. That’s one of my nutty views.

Altman: I have no opinion on the matter.

At another point, Altman and Cowen say this:

Altman: Because we need to make more electrons.

Cowen: What’s stopping that? What’s the ultimate binding constraint?

Altman: We’re working on it really hard.

Cowen: If you could have more of one thing to have more compute, what would the one thing be?

Altman: Electrons.

Cowen: Electrons. Just energy. What’s the most likely short-term solution for that?

Altman: Short-term, natural gas.

Cowen: Easing, not full solution, but easing the constraint.

As the Northwestern University economist Ben Golub observes in a tweet about the exchange, this is “from the people who renamed computation power as ‘compute’ and model output as ‘inference.’” Another person notes that there are other examples, too. “AI people,” they write, “are pretty good at renaming concepts to make them sound much more science fictional,” such as “emergence, grokking, alignment, transcendence, compute, latency space, oracles … now get ready to refer to power as electrons.” Lolz.

It’s common knowledge that our capitalist system rewards people with sociopathic or psychopathic traits. As Forbes writes, “the link between leadership in the corporate world and non-violent psychopathy is widely acknowledged.”1 Altman, to my mind, is a paradigmatic example of this phenomenon.

And as Futurism reports, he “now has the power to ‘crash the global economy’” because of his constant lies about how amazing LLMs would be, resulting in a huge bubble 17 times the size of the dot-com bubble. Like many sociopathic billionaires, Altman’s greatest legacy will likely be the profound harms he caused people on his single-minded quest to — paraphrasing Graham — “become powerful.”

Thanks so much for reading and I’ll see you on the other side!

This article also discusses a 2023 study finding that “six CEOs who were involved in some of the biggest business scandals of the past few decades show that none of the CEOs fulfilled the diagnostic criteria for psychopathy.” But the study also found that “the CEOs’ scores are all above the population average — but below the diagnostic threshold for psychopathy.”

All the electrons that will ever exist in the universe exist now. No one can make more of them. And although the supply is essential infinite, Scam Altman should not be allowed to hoard anymore of them. GTFO, Altman.

To answer your question:

Yes.