Mars Colonies Cancelled, the Doomsday Clock Ticks Forward, and a TESCREAL Quiz

Plus a short discussion about whether your brain is a computer. (2,100 words)

Your Future Martian Vacation Has Been Cancelled

“I’d like to die on Mars, just not on impact” — Elon Musk, who will die on Earth.

We begin with some chuckleworthy news from the world of space-expansionist billionaires: Elon Musk now says that SpaceX isn’t aiming to establish a colony on Mars in the near future, but will instead focus on building a lunar city. He writes:

For those unaware, SpaceX has already shifted focus to building a self-growing city on the Moon, as we can potentially achieve that in less than 10 years, whereas Mars would take 20+ years.

Some context: in 2011, Musk told the Wall Street Journal that people would be living on Mars within 10 years. In 2014, he declared that “the first people could be taken to Mars in 10 to 12 years, I think it’s certainly possible for that to occur.” In 2016, he predicted that humans would arrive on Mars by 2021, and that “SpaceX would start sending rockets to Mars by 2018, followed by a new Mars mission every 26 months.” He added: “If things go according to plan, we should be able to launch people probably in 2024 with arrival in 2025.” And so on.

It’s astonishing that Musk has gotten so far by habitually lying. Imagine that you give me $1 million to write a book. I assure you that it will be finished later this year. By the end of the year, I’ve only written two pages. But, I tell you, the reason progress has been so slow is that I actually need more money to keep writing. So you give me another $1 million, and the cycle repeats until I’m a billionaire. No one would take me seriously — I’d rightly be seen as a contemptible schmuck who cheated you out of a large heap of money.

This is what Musk is doing, of course. He makes grand promises, which he then consistently fails to keep. Thus far, “SpaceX has received at least $1 billion in government contracts, loans, subsidies and tax credits each year since 2016, and between $2 billion and $4 billion a year from 2021 to 2024 – while Tesla has received over $1 billion a year since 2020.” That’s taxpayer money. Our money, transferred from our pockets to his.

I want to scream what Jesse screams about Walter White in Breaking Bad (lol):

Doomsday Clock Setting

It turns out that I was wrong about the Doomsday Clock — at least partly. In a previous newsletter, I guessed that (a) the minute hand would move forward, and (b) it would be set to 88 minutes before midnight/doom — an apropos number given that “88” is code for “Heil Hitler” among white supremacists.

Instead, the Bulletin of the Atomic Scientists set their iconic clock to 85 seconds, which is 4 seconds ahead of its previous setting. This is the closest we’ve ever been to doom, and I’m afraid that I agree with the Bulletin’s dismal assessment. We are pretty much superfucked right now.

The 2026 Doomsday Clock announcement opens with this chilling paragraph:

A year ago, we warned that the world was perilously close to global disaster and that any delay in reversing course increased the probability of catastrophe. Rather than heed this warning, Russia, China, the United States, and other major countries have instead become increasingly aggressive, adversarial, and nationalistic. Hard-won global understandings are collapsing, accelerating a winner-takes-all great power competition and undermining the international cooperation critical to reducing the risks of nuclear war, climate change, the misuse of biotechnology, the potential threat of artificial intelligence, and other apocalyptic dangers. Far too many leaders have grown complacent and indifferent, in many cases adopting rhetoric and policies that accelerate rather than mitigate these existential risks. Because of this failure of leadership, the Bulletin of the Atomic Scientists Science and Security Board today sets the Doomsday Clock at 85 seconds to midnight, the closest it has ever been to catastrophe.

It’s stunning to watch our civilization sleepwalk toward the precipice, in complete denial of the extreme danger that we’re in. Nationalism, fascism, trade wars, and a general breakdown of the postwar global order are tearing down barriers to global conflict. Our political leaders behave like climate change doesn’t pose a dire, unprecedented threat to our collective wellbeing. AI slop, deepfakes, and disinformation spread through social media are flooding our information ecosystems with noxious poison, fomenting political polarization and conspiracy theories. And the US economy is propped up by an AI bubble that appears close to bursting, with potentially devastating consequences.

Meanwhile, the Trump administration allowed the New START nuclear arms treaty to expire last Thursday. This treaty was “the last major guardrail constraining the nuclear arsenals of the two countries that together hold some 85% of the world’s warheads.” Although there will be negotiations over the next 6 months between Russia and the US to establish a new agreement, there’s no guarantee that such an agreement will be reached.

This comes at a time when, as the Bulletin points out, “the Russia–Ukraine war has featured novel and potentially destabilizing military tactics and Russian allusions to nuclear weapons use,” while Trump has suggested that the US might restart underground nuclear weapons testing.

Interestingly, the Doomsday Clock announcement also mentions that,

in December 2024, scientists from nine countries announced the recognition of a potentially existential threat to all life on Earth: the laboratory synthesis of so-called “mirror life.” Those scientists urged that mirror bacteria and other mirror cells — composed of chemically-synthesized molecules that are mirror-images of those found on Earth, much as a left hand mirrors a right hand — not be created, because a self-replicating mirror cell could plausibly evade normal controls on growth, spread throughout all ecosystems, and eventually cause the widespread death of humans, other animals, and plants, potentially disrupting all life on Earth. So far, however, the international community has not arrived at a plan to address this risk.

I’ll admit that mirror life really freaks me out. It’s the stuff of nightmares: mirror bacteria could potentially infect our bodies without even being detected by our immune systems. It could also obliterate entire ecosystems, as the Bulletin notes, thus quite plausibly precipitating the complete extinction of our species.

As Dario Amodei — a guy I wouldn’t normally cite — points out in his surprisingly decent essay “The Adolescence of Technology”:

Skeptics retreated to the objection that LLMs weren’t end-to-end useful, and couldn’t help with bioweapons acquisition as opposed to just providing theoretical information. As of mid-2025, our measurements show that LLMs may already be providing substantial uplift in several relevant areas, perhaps doubling or tripling the likelihood of success. … We believe that models are likely now approaching the point where, without safeguards, they could be useful in enabling someone with a STEM degree but not specifically a biology degree to go through the whole process of producing a bioweapon.

I have no idea if AI could enable someone with the relevant training to synthesize mirror lifeforms. But that thought is not patently preposterous, which is alarming. And for those who suspect that there aren’t malicious actors in the world who would willingly try to destroy humanity, I published two academic articles several years ago (here) showing that, in fact, there are lots of people who would push a “doomsday button” — perhaps in the form of laboratory-synthesized mirror life — if only they could. I also wrote about this in a previous newsletter article.

Someone who held a senior position in the Biden administration once told me that he knew of two graduate students at Duke University who specifically pursued PhDs in microbiology because they wanted to synthesize a doomsday pathogen. Both were negative utilitarians, meaning that they believed our only moral obligation is to minimize suffering. Since the best way to do this is to eliminate that which suffers, negative utilitarianism instructs adherents to become, as R. N. Smart put it in 1958, a “benevolent world-exploder.”

(Of note: the Efilist movement — that’s “life” spelled backwards — embraces the negative utilitarian ethic, and one of its members bombed a fertility clinic in California last year in hopes of minimizing suffering by preventing new people from being born. I have no doubt that mirror life is on the radar of Efilists, as well as the possibility of using AI to build bioweapons.)

In conclusion, the world is in bad shape, and things are getting worse. That’s why the Doomsday Clock ticked forward. None of this is inevitable, though it often feels that way, as we’re up against forces that appear inexorable: billionaire techno-feudalists, TESCREAL fanatics driving the AGI race, fascists and authoritarians controlling world superpowers, and so on.

I’d recommend reading the entire Doomsday Clock announcement, which is quite short.

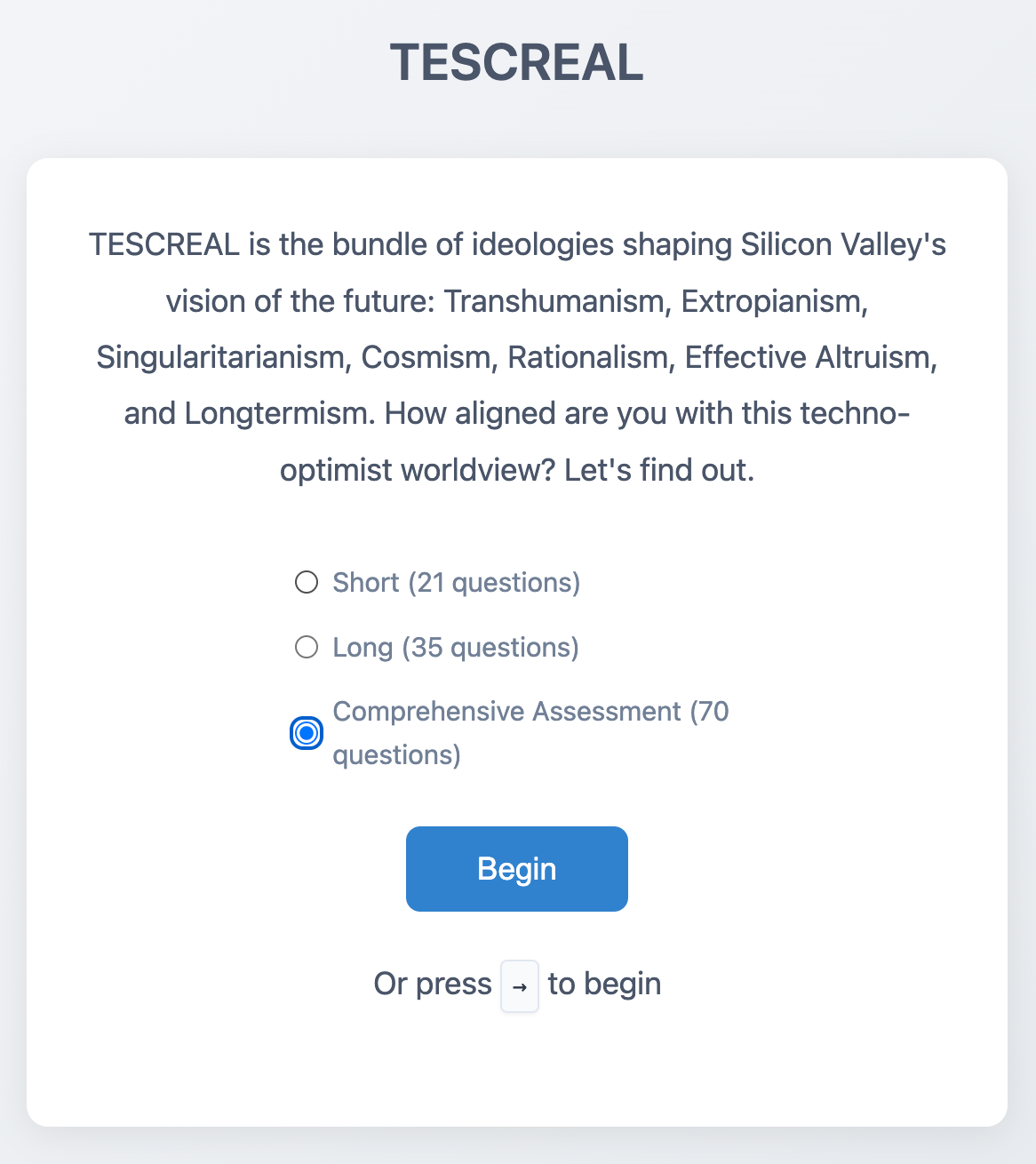

How TESCREAL Are YOU?

The other day, Timnit Gebru sent me a link to a survey that attempts to assess how TESCREAL one is. It’s quite amusing:

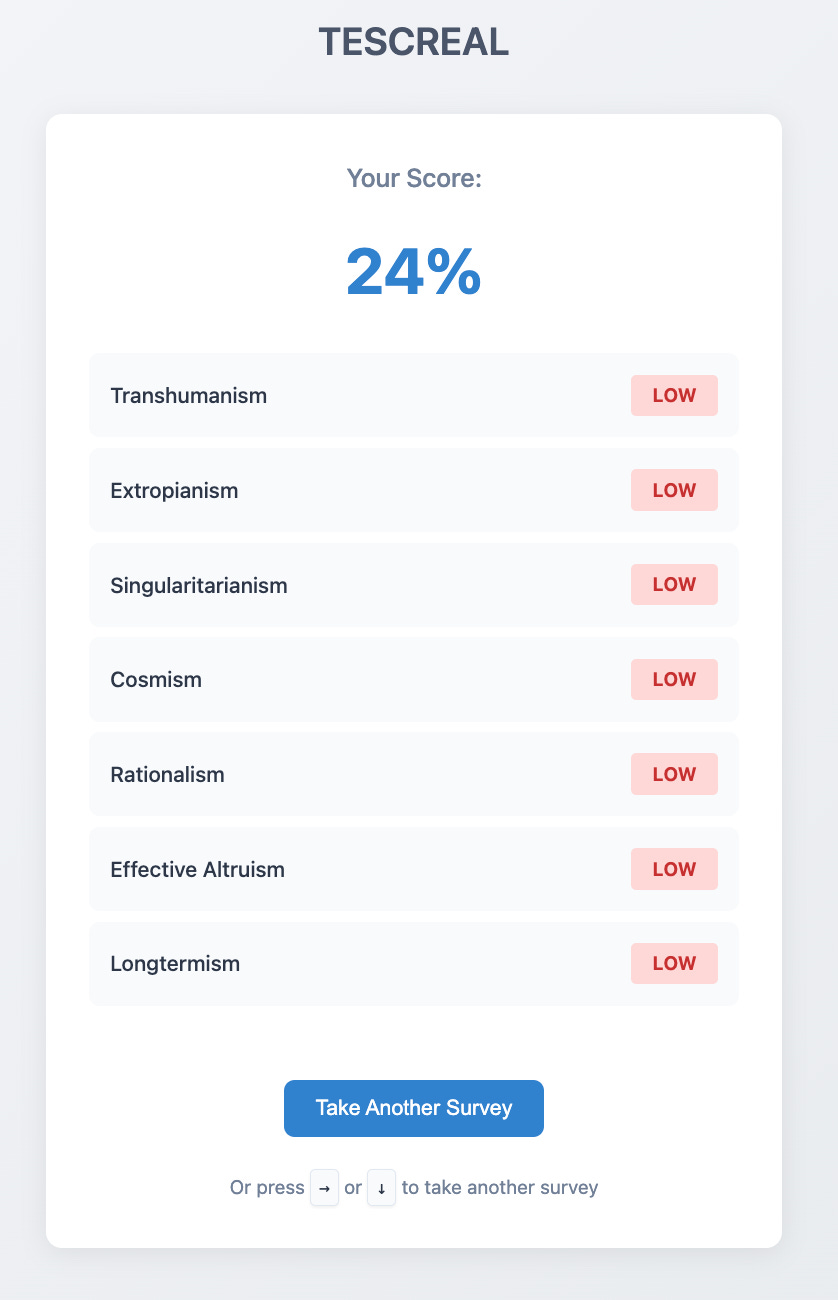

I took the 70-question comprehensive survey (less than 10 minutes), and here are my results. I’m surprised I scored this high!

Vitalik Buterin, who won a Thiel Fellowship and cofounded Etherium, also shared his score on Bluesky. Buterin is a major funder of the TESCREAL movement — e.g., he recently donated $660 million to the TESCREAL-aligned Future of Life Institute.

Someone else wrote this in response to the original post sharing the survey:

Those comments dovetail with my previous newsletter article. Indeed, it points to an idea that I haven’t yet written about (although I plan to in my book): Silicon Valley pro-extinctionists tend to see humanity, and life itself, different from human preservationists like myself. Consider this excerpt from an excellent article by my friend Dan Zimmer:

Today’s growing political fault line runs deeper than merely a preference for technological or ecological solutions, however, for it turns out that these new servants of Life also disagree on what Life itself is. One camp views Life primarily as an information process to expand and enhance, while the other conceives of Life chiefly as a complex system to maintain and balance. These contrasting perspectives inspire rival political visions: one gazing upward toward Life’s cosmic conquests and the other downward toward Life’s planetary entanglements.

TESCREALists tend to see life, and hence humanity, as entirely reducible to information processing. They see our brains as biological computers (“wetware”) performing computations in accordance with cognitive algorithms. Your personality, preferences, values, hopes, dreams, and conscious experiences are all just patterns of information being shuffled about by the wriggling neurons in your brain — that’s it.

When one accepts this view, it becomes immediately apparent that our biological substrate — our bodies and brains — aren’t important. If the same cognitive algorithms that give rise to your personality, values, thoughts, etc. were replicated on computer hardware, you would persist. More generally, if our intelligence is nothing but software, then we could presumably build artificial systems running even “better” software.

Many TESCREALists maintain that what’s valuable about humans is our intelligence, consciousness, and values. Since these don’t require the biological substrate of our bodies and brains to persist, there’s no reason to keep our species around. Simply transfer those attributes to computer hardware and then expand, augment, and enhance them. What matters, on this account, is preserving the abstract informational patterns that constitute life, intelligence, and consciousness. Ray Kurzweil himself calls this “patternism.” As he writes: “Our ultimate reality is our pattern.”

I wholly reject this view, which is why I so vigorously argue that preserving our biological species is important (at least for the foreseeable future, by which I mean many centuries or millennia). I reject it for a multitude of reasons, which I’ll save for another article. The point for now is to briefly highlight the more fundamental, metaphysical disagreements between people in my camp and the TESCREALists, who see flesh-and-blood humanity as disposable. More on this soon!

Podcast Episodes!

Finally, I keep forgetting to promote new episodes of my podcast Dystopia Now. Here is, once again, a recent discussion with my friend Dr. Alexander Thomas. I really enjoyed our chat, gloomy as it was, and think you might, too:

We also recorded a subscription-only episode on Stop AI, after one of it’s members defected and appeared to threaten violence against AI companies. This happened last November, and immediately afterwards, the individual in question disappeared. He is still missing as of this writing.

As always:

Thanks so much for reading and I’ll see you on the other side!

Scored 13%. Which… yeah. Although my mood was, at the time, very anti-TESCREAL at the moment.

I don't know. I tend to think about the ethics of something on a more… relational scale? i.e. our relationship with each other, the biosphere, our bodies and physicality, our cultures and upbringings. And I'm automatically suspicious of quantitative approaches to ethical questions, because a quantitative approach must necessarily strip out the subtleties of the question in itself. Like… one of the questions was about how the Singularity would be one of the most momentous events in human history, and I agreed with it. I didn't think it would be a GOOD thing though — assuming that it WAS possible, then… yeah! It would be momentous! A momentous DISASTER, for sure.

Also, that question about if your gut feels bad even if you've done the ethical calculation… buddy, it's time to examine the assumptions behind the ethical calculation, then. It's ACTUALLY possible you got something wrong. This is the sort of shit that causes people to walk into abusive relationships. Good lord, please look out for yourself.

Re: your concerns about mirror life… I commented about it in the newsletter you posted last time as well. Are mirror life and tabletop WMDs possible? Sure. Is THAT the stuff that's most likely gonna kill us all? Nah. I'm more worried about RFK Jr.'s policies causing mass death by gutting healthcare services. Or a world leader losing his mind (and it's likely a he) and launching the nukes and everyone not knowing who his target was and just deciding to launch at their hardwired, predetermined targets.

Listening to Robert Evans of Behind the Bastards talk about how the entire nuclear situation we're in was caused by a bunch of men who were traumatized about WW1 and totally determined in their (erroneous) belief that all you need is to MOAR DAKKA targeted civilian populations to win ANY war, despite repeated evidence to the contrary: turns out that when you bomb the shit out of a country, you don't break the spirits of its civilians — you just piss them off.

Like, I guess dwelling on mirror life for long periods of time might be upsetting, but honestly, I'm more frustrated, frightened, and angry that the people who colluded with a rapist, child trafficker and sexual abuser are STILL the same people who are trying to dismantle international law, democracy of the world's largest nuclear arsenal, and our information systems.

And they're SUCH LOSERS about it, too.

I couldn't do that 70-question quiz in ten minutes. Each question was several minutes' worth of thinking before I could give a straight "disagree" or "agree," because the phrasing was such a challenge. I very much enjoyed the process, so I will finish it.

My guess is that my percentage will be pretty high, but not as high as my initial expectation.

Also: every time you make a statement like this one: "Silicon Valley pro-extinctionists tend to see humanity, and life itself, different from human preservationists like myself," I'm reminded why I keep coming back to read you, despite constantly disagreeing with you a lot. It helps to remember that while you put a label like "TESCREAList" or "pro-extinctionist" on people, you aren't dodging your own label.